User:MitchJ/Application

Contents

Rule-based finite-state disambiguation[edit]

Name: Mitchell JEFFREY

Institution: Monash University, Melbourne, Australia (UTC+10)

IRC: mitch-j on #apertium

Introduction[edit]

Machine learning facilitates international communication, and is especially useful in supporting the continued usage of minority languages for which human translators are in short supply. Apertium represents both an academic exercise and a useful software development project whose goal it is to provide an accurate, universal automated translation engine and accompanying language-specific datasets.

My bilingual experience has taught me that each language provides an insightful, valuable and most importantly, unique way of interpreting and conceptualising the world around us. The subtle tools of expression encapsulated within a language are extremely valuable both in terms of cultural heritage and linguistic history. A sad fact of the modern world is that less frequently spoken minority languages are slowly dying off in response to the convenience and necessity required by increasingly globalised communication. Language extinction is occurring all around the world, including my native Australia which once boasted almost 1000 indigenous languages.

Machine translation is one tool of many which could help slow the decline of minority languages not only as a stand-in replacement for human translators, but also as a educational tool. Being an open source project, Apertium is in a position to support minority languages which aren’t financially viable to maintain in the commercial sense.

On a personal level, machine translation projects such as this represent a unique opportunity to combine my interests in computer science, natural language and mathematics/logic. I am interested in how humans communicate and convey meaning; investigating how machines could process human language (for instance with a translation package such as Apertium) would not only be an insightful venture in itself, but would also inform my understanding of the condition of human communication.

Synopsis[edit]

Disambiguation is an essential part of the MT process, the aim of which is to correctly identify the meaning and function of each word of input text. Apertium currently uses a bigram/trigram part-of-speech tagger, where any given word is disambiguated entirely on the basis of how the two or three words surrounding it are categorised. This project seeks to implement a complementary disambiguation framework suited to broader constraints, where rules can span entire sentences rather than just adjacent words. So-called constraint grammar (CG) rules would be processed before input text is passed on to the existing bigram/trigram apertium-tagger. In broad terms, the CG parser would be implemented as a language-independent finite state transducer (FST), which uses a language-specific constraint grammar to process text.

Benefits to Apertium[edit]

Apertium currently offers CG disambiguation with vislcg3, which does not operate on a FST model and suffers from poor performance as a result. Introducing the option of efficient constraint grammar disambiguation benefits the entire Apertium community as it is language-independent (although language-specific grammars will need to be written to take advantage of it). CG disambiguation would allow the addition of new language pairs which are currently unsuitable for parsing with the current bigram disambiguation methods. CG disambiguation also provides additional flexibility to current language pairs by allowing for rules with have broader scope - as long as an entire sentence. Ultimately the availability of a CG parser would benefit the accuracy of translations in most languages.

Details[edit]

The aim of the CG parser is to take natural language input text and functionally identify (disambiguate) each word of input. CG parsing is introduced at stage four of the overall translation process:

- Preprocessing - case conversion, sentence delimitation (handled by lttoolbox)

- Lexicon updating - identification of unknown words

- Morphological analysis - attaching a list of possible morphological readings to each wordform (also handled by lttoolbox)

- Local morphological disambiguation - some readings may be immediately discarded with a simple inspection. Here we introduce the CG parser, which applies constraint rules in three simultaneous phases: (1) application of disambiguation constraints; (2) assignment of clause boundaries; (3) assignment of grammatical labels such as ‘finite main verb’ or ‘subject’. Clause boundaries are identified and iterated over until there are no more changes to be made by rules in the grammar.

- Final processing by the existing bigram tagger, which is guaranteed to leave only one reading.

- Postprocessing (eg, reintroduction of formatting) with lttoolbox

There are several design considerations and goals to take into account when planning to implement a CG parser. Consideration will need to be given to how the parser design affects end-user functionality for users as well as grammar writers, and should include facilities for testing, debugging and optimisation of constraint grammar definitions.

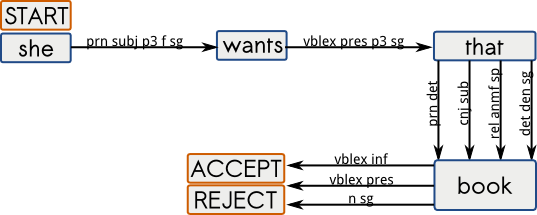

One possible way of representing an ambiguously tagged sentence is in the form of a directed graph where words are represented as nodes; a word’s tags then become edges originating from that node. The particular manner (to be decided) in which rules are applied to sentences would determine what kind of finite state machine the graph should become. Grammar rules could similarly be represented in finite state notation, with sentential components as edges between tags as nodes. This is similar to the finite-state intersection grammar approach introduced by authors such as Koskenniemi, Tapanainen and Voutilainen. Although much more thorough consideration would be given to the sentence-grammar architecture, the diagram below illustrates how the sentence “she wants that book” could be represented in graph form:

Deliverables and Schedule[edit]

The primary focus of this project will be to design, implement, integrate and document a language-independent CG parser. This will be a python prototype with anticipated future porting to C++ for performance. The XML grammar standard which will be accepted will also need to be specified.

- 4 week community Bonding: Reading around the subject area and acquiring specific skills such as parser design, language processing and computational linguistics. Research into other CG implementations will be necessary. Independent FST libraries exist for C and python [1], [2], and will provide much of the required FST behaviour.

- 12 week coding period with goals and deliverables:

- [1 week] Represent a tagged sentence as an appropriate state graph;

- [2 weeks] Standardise specification of CG rules (with reference to prior art in vislcg3/CG-2);

- [6-9 weeks] Implement essential rules (SELECT, REMOVE, LIST, ADD, MAP, SUBSTITUTE, STAR(*), BARRIER, CAREFUL(C)) in the FST model;

- [remainder] Merge or incorporate functionality with vislcg3, time permitting

- [1 week sprint] Final polish, debugging and documentation effort

The three-month coding period would suggest that an agile approach be best, with short (perhaps weekly) cycles of reading, research, planning, implementation, testing and documentation - in approximately that order. I will have part-time coursework commitments at university for the first half of development, but can reasonably expect that time requirements will be minimal and that I will be able to commit to this project on a full-time (30-40 hours per week) basis throughout.

Bio[edit]

I am based in Melbourne, Australia and currently completing course requirements for a B.Sc degree on a part-time basis with majors in mathematics and physics, as well as a minor in computer science. Current language skills include Java, Python, Javascript, HTML/CSS and LaTeX. Previous project experience has seen me learn and use new languages, tools and APIs as required (namely the creation of a data analysis suite for a research physics laboratory built with Enthought Python).

One of the most interesting courses in my degree has been one on the theory of computation, which covered topics such as finite state machines, formal grammar, parsing and compiler design. It would be an absolute delight to deepen and broaden my knowledge along these lines by producing a practical codebase for processing natural language.

Recently I’ve been working on an online chessboard (jQuery/node.js; http://cosmicpostalchess.nodester.com), meeting with my Japanese-English language exchange partner and playing sports such as Ultimate and Rogaining.