Difference between revisions of "Concordancer"

Jump to navigation

Jump to search

| Line 41: | Line 41: | ||

[[File:concordancerProposed.png|600px]] |

[[File:concordancerProposed.png|600px]] |

||

==TODO== |

|||

* Efficiency: Make it scale up to corpora of millions of words. This might involve doing (a) pre-analysis of the corpus -- e.g. the program doesn't read + analyse, but rather just read from a pre-analysed corpus; and (b) indexing using SQLite or something similar. |

|||

==External links== |

==External links== |

||

Revision as of 00:45, 28 November 2013

A concordancer is a tool which shows you a word, in context.

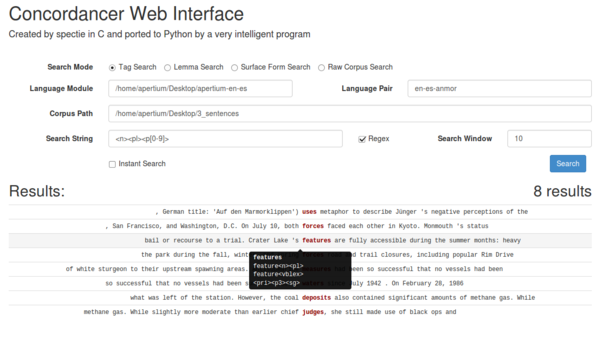

Web Concordancer

A concordancer web interface is available in the SVN at /trunk/apertium-tools/webconcordancer. Use python3 server.py to run the code on localhost:8080/concordancer.html. The web interface requires three primary inputs to set up:

- Corpus path: an absolute path to the the corpus (e.g. /home/apertium/Desktop/corpus.txt)

- Language module: an absolute path to the language module to use for analysis (e.g. /home/apertium/Desktop/apertium-en-es)

- Language Pair: the language pair to pass to Apertium for morphological analysis (e.g. en-es-anmor)

- Search Window: the size of the context around the token located in number of characters/number of tokens (e.g. 15)

The interface also supports four distinct search modes, each of which has support for regular expressions (enabled via a checkbox):

- Tag Search: this mode will output all tokens in the corpus with all of the specified search tags and supports regex inside individual tags

- e.g.

<n>will show all noun tokens - e.g.

<n><sg>will show all singular noun tokens - e.g.

<p[1-2]>will show all first person and second person tokens

- e.g.

- Lemma Search: this mode will output all tokens in the corpus which contain lemmas that match the given search string and supports regex within the search string (omit the regex '$' token)

- e.g.

bewill show all the tokens that are forms of the word 'be' - e.g.

t.*will show all the tokens that have lemmas beginning with 't'

- e.g.

- Surface Form Search: this mode will output all tokens in the corpus which have a surface form that matches the search string and supports regex within the search string (omit the regex '$' token)

- e.g.

thewill show all the instances of 'the' in the corpus - e.g.

[0-9]+will show all the tokens composed entirely of Arabic numerals

- e.g.

- Raw Corpus Search: this mode will find all matches to the search string in the corpus and supports regex within the search string

- e.g.

previouswill find all the instances of the letter sequence 'previous' in the corpus - e.g.

\.$will find all the instances of a period character ending a line in the corpus

- e.g.

Current Interface

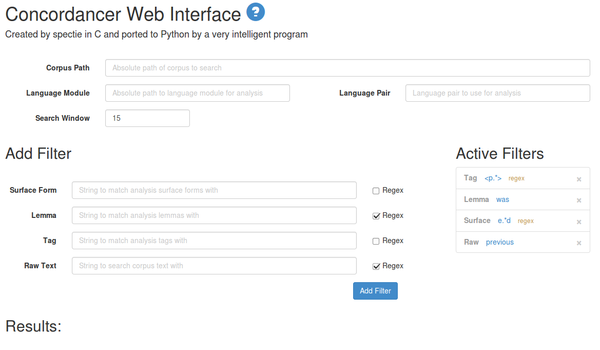

Proposed Changes

- Apply an unlimited number of sets of filters simultaneously.

- To appear in the output, a line of the corpus must have tokens which fulfill all the sets of filters specified.

- Filters can be added one by one and deleted

Proposed Interface (draft)

TODO

- Efficiency: Make it scale up to corpora of millions of words. This might involve doing (a) pre-analysis of the corpus -- e.g. the program doesn't read + analyse, but rather just read from a pre-analysed corpus; and (b) indexing using SQLite or something similar.