Difference between revisions of "User:Pankajksharma/Application"

| (31 intermediate revisions by 2 users not shown) | |||

| Line 5: | Line 5: | ||

'''Name:''' Pankaj Kumar Sharma |

'''Name:''' Pankaj Kumar Sharma |

||

'''E-mail address:''' sharmapankaj1992 |

'''E-mail address:''' sharmapankaj1992 (at) gmail (dot) com |

||

'''Other information that may be useful to contact:''' |

'''Other information that may be useful to contact:''' |

||

My alternative email: pankaj |

My alternative email: pankaj (at) pankajksharma (dot) com |

||

IRC: pankajksharma |

|||

== Interest in MT and Apertium == |

== Interest in MT and Apertium == |

||

| Line 15: | Line 17: | ||

'''Why is it you are interested in machine translation?''' |

'''Why is it you are interested in machine translation?''' |

||

I am interested in Machine Translation (MT) because of two reasons. The first one is little Philosophical one, i.e., the ideology of making all the digital information present openly available to everyone regardless of the language in which it's written or |

I am interested in Machine Translation (MT) because of two reasons. The first one is little Philosophical one, i.e., the ideology of making all the digital information present, openly available to everyone regardless of the language in which it's written or the language used by it's recipients. Also advancement in MT would cause in reducing the language barrier in the exchange process of ideas. |

||

Second, I did my minor in Text Classification and since then become interested in Machine Learning and took me closer to NLP ( |

Second, I did my minor in Text Classification and since then become interested in Machine Learning and took me closer to NLP (a part of MT), this made me interested in MT. To be honest and I had only used MT only as an end-user until recently when I became aware of Apertium. |

||

'''Why is it that they are interested in the Apertium project?''' |

'''Why is it that they are interested in the Apertium project?''' |

||

| Line 24: | Line 26: | ||

* It's open source. |

* It's open source. |

||

* |

* It's extremely helping community (experienced this from my interaction during project discussion). |

||

* All the technique used in Apertium are provided as research papers (so anyone could learn from them). |

* All the technique used in Apertium are provided as research papers (so anyone could learn from them). |

||

* Apertium works Offline as well (:P). |

* Apertium works Offline as well (:P). |

||

== Proposal == |

== Proposal == |

||

* [[Ideas_for_Google_Summer_of_Code/Command-line_translation_memory_fuzzy-match_repair]] |

|||

=== Title === |

=== Title === |

||

| Line 34: | Line 40: | ||

Fuzzy-match repair from Translation Memory |

Fuzzy-match repair from Translation Memory |

||

=== |

=== Description === |

||

For a given sentence S in a source language and it's translation T in another language, the idea is to find the translation (T') of another sentence S'. The condition that S and S' must hold is that S and S' must have high Fuzzy-match score (or Low Edit Distance) between them. Depending upon what changes from S to S', we employ a set of repair operations (t, t') on T to get our T'. Here T' is a possible translation of S' and the pairs (t, t') (also, called repair operations here) holds the condition that t is a sub-segment of T and t' is the possible change which leads us to T'. |

For a given sentence S in a source language and it's translation T in another language, the idea is to find the translation (T') of another sentence S'. The condition that S and S' must hold is that S and S' must have high Fuzzy-match score (or Low Edit Distance) between them. Depending upon what changes from S to S', we employ a set of repair operations (t, t') on T to get our T'. Here T' is a possible translation of S' and the pairs (t, t') (also, called repair operations here) holds the condition that t is a sub-segment of T and t' is the possible change which leads us to T'. |

||

In the second phase of the project we'd preprocess an existing translation memory corresponding to the source and target language pair and store validated (s,t) pairs (s is a sub-segment of S, t is a sub- |

In the second phase of the project we'd preprocess an existing translation memory corresponding to the source and target language pair and store validated (s,t) pairs (here s is a sub-segment of S, t is a sub-segment of T and s translates to t). These pairs could be used for generating better and verified (s', t') pairs (here s' is a sub-sequence of S' and s' translates to t'). |

||

For details please see [[#Project_Details|Project Details]] section. |

For details please see [[#Project_Details|Project Details]] section. |

||

| Line 46: | Line 52: | ||

=== Project Details === |

=== Project Details === |

||

These details are developed after discussions with [[User:mlforcada]] and may have slight variations during the implementation phase. |

These details are developed after discussions with [[User:mlforcada]] and other community members and may have slight variations during the implementation phase. |

||

We will use following example throughout this section: |

We will use following example throughout this section: |

||

| Line 62: | Line 68: | ||

==== Finding fuzzy match score ==== |

==== Finding fuzzy match score ==== |

||

To find |

To find how much the given input source sentences (S and S') are similar to each other, we'd use the fuzzy match score of S and S'. |

||

We would use the following method for finding the the fuzzy match score (FMS) between S and S': |

We would use the following method for finding the the fuzzy match score (FMS) between S and S': |

||

| Line 68: | Line 74: | ||

FMS(S, S') = 1 - ED(S, S') / max(|S|, |S'|) |

FMS(S, S') = 1 - ED(S, S') / max(|S|, |S'|) |

||

ED(S, S') is the edit distance between S and S'. We would employ Levenshtein Distance for sentence for calculating the edit distance. |

Here ED(S, S') is the edit distance between S and S'. We would employ Levenshtein Distance for sentence for calculating the edit distance. |

||

If only the value of FMS > min-fms(specified by user, default 80%), the program will proceed. |

If only the value of FMS(S, S') >= min-fms(specified by user, default 80%), the program will proceed. |

||

'''In our example''': |

'''In our example''': |

||

| Line 82: | Line 88: | ||

Since the fms is large enough we'll proceed further. |

Since the fms is large enough we'll proceed further. |

||

Please check the [[#Coding_Challenge|coding challenge]], to check in detail |

Please check the [[#Coding_Challenge|coding challenge]], to check in detail, how ED is calculated. |

||

==== Finding what changed from S to S' ==== |

==== Finding what changed from S to S' ==== |

||

To find out the changes between S and S', we would employ the phrase-extraction algorithm |

To find out the changes between S and S', we would employ the '''phrase-extraction algorithm''' with slight modification to obtain pairs (s, s') where s and s' are sub-segments of S and S' respectively and there is some non-alignment them. We'd call the covering set as set '''A'''. |

||

The modification would be made only to consider those |

The modification would be made only to consider those pairs which have one or more mis-match (or non-alignment) and satisfy following condition: |

||

min-len <= |s|,|s'| <= max-len, (min-len, max-len being specified by the user). |

min-len <= |s|,|s'| <= max-len, (min-len, max-len being specified by the user). |

||

| Line 96: | Line 102: | ||

Pairs [(1, 1), (2, 2), (3, 3), (5, 5)] are same (or aligned) in S and S', |

Pairs [(1, 1), (2, 2), (3, 3), (5, 5)] are same (or aligned) in S and S', |

||

i.e., their longest common sequence will contain words present at index i of S and index j of S' for each pair (i,j). |

|||

Though the default phrase-extraction algorithm [implemented in the [[#Coding_Challenge|coding challenge]]] will give more pairs, we'll only consider those pairs which satisfy |

Though the default phrase-extraction algorithm [implemented in the [[#Coding_Challenge|coding challenge]]] will give more pairs, we'll only consider those pairs which satisfy the conditions given above. |

||

Say, if min-len=2 and max-len=3, then our set '''A''' will be: |

|||

[("changed his", "changed his number"), |

[("changed his", "changed his number"), |

||

| Line 116: | Line 124: | ||

==== Translating what changed from S to S' ==== |

==== Translating what changed from S to S' ==== |

||

To translate the changes from source to target language, we'd be using the clippings that we created in above section, as in (s, s') pairs. |

|||

To consider the context of translation, we'd be using double validation, i.e., would be considering those pairs (s, t) which have following properties: |

To consider the context of translation, we'd be using double validation, i.e., would be considering those pairs (s, t) which have following properties: |

||

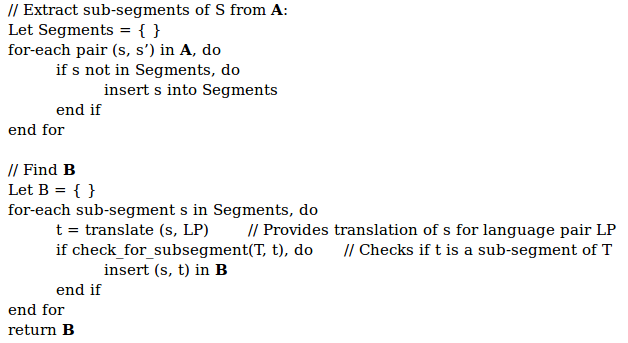

s is a sub-segment of S, s contains some mismatch in S and S', t is a sub-segment of T and s translates to t. We'd call covering set as set '''B'''. |

s is a sub-segment of S, s contains some mismatch in S and S', t is a sub-segment of T and s translates to t. We'd call covering set as set '''B'''. |

||

In addition to the pair (s,t), we'd also have to store the location to which t belong, because t may have multiple appearances in T, which could be problematic in upcoming sections. |

|||

This we'd be doing using following '''Algorithm''': |

This we'd be doing using following '''Algorithm''': |

||

| Line 129: | Line 139: | ||

The set '''B''' would be: |

The set '''B''' would be: |

||

[("changed his address", "canviar la seva adreça"), |

[("changed his address", "canviar la seva adreça", [2,5]), |

||

("his address", "la seva adreça"), |

("his address", "la seva adreça", [3,5]), |

||

("his address recently", "la seva adreça recentment"), |

("his address recently", "la seva adreça recentment", [3,6]), |

||

("address recently", "adreça recentment")] |

("address recently", "adreça recentment", [5,6])] |

||

The 3rd indices mark the positions of t in T. |

|||

| ⚫ | |||

| ⚫ | We would have an "-r" option [see [[#Coding_Challenge|coding challenge]] for details] that could be used to find more pairs. This would be done by extracting sub-segments (t) from T and finding their translations (s), they would be added to above pairs, as (s, t) if the translation (s) will be a sub-segment in S as well. |

||

==== Translating changes in S' ==== |

==== Translating changes in S' ==== |

||

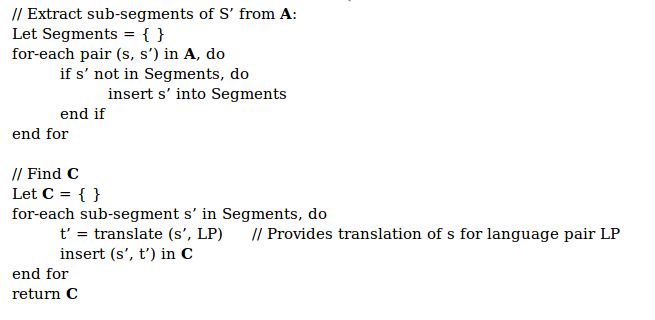

We'd use Apertium python API (developed in the [[#Coding_Challenge|Coding challenge]] to obtain pairs (s', t'). These pairs would have following properties: s' is a sub-segment of S', s' carries some variation (between S and S') and s' translates t'. We'd call the covering set as set '''C'''. |

We'd use Apertium python API (developed in the [[#Coding_Challenge|Coding challenge]]) to obtain pairs (s', t'). These pairs would have following properties: s' is a sub-segment of S', s' carries some variation (between S and S') and s' translates t'. We'd call the covering set as set '''C'''. |

||

A better option of doing this could be processing of Translation memory for given language pair, which we'd employ in the second phase of this project. Please see [[#Preprocessing|preprocessing]] for detail. |

|||

We'd use following '''Algorithm''' to find '''C''': |

We'd use following '''Algorithm''' to find '''C''': |

||

| Line 163: | Line 175: | ||

("number recently", "número recentment")] |

("number recently", "número recentment")] |

||

As stated |

As stated above we can use "-r" option to increase chances to getting more pairs. |

||

==== Obtaining repair pairs ==== |

==== Obtaining repair pairs ==== |

||

| Line 189: | Line 201: | ||

As the number of of such pairs could be large so we'd employ some post processing technique to decrease their numbers (like removing subsets, removing single words, etc.). These pairs would be our repair operations, using which we'd try to obtain T'. |

As the number of of such pairs could be large so we'd employ some post processing technique to decrease their numbers (like removing subsets, removing single words, etc.). These pairs would be our repair operations, using which we'd try to obtain T'. |

||

==== |

==== Alternative Method ==== |

||

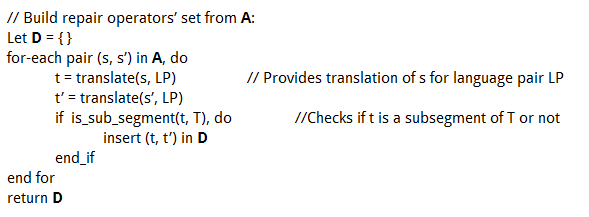

Instead of finding '''B''', '''C''', '''D''' independently, we can combine these algorithms to obtain our repair repairs. This method is likely to be more efficient than calculating those sets independently. |

|||

This combined algorithm would look like this: |

|||

| ⚫ | |||

[[File:AlgoCombined.png]] |

|||

As the number of of such pairs would be very large (much more than those obtained in above section), so we'd employ post processing techniques to remove the unwanted pairs. |

|||

For this we could employ a number of techniques like removing subsets, removing single words, etc. |

|||

'''Please Note''': The independent algorithms given in above sections are mostly for explanation purposes and most probably we'd use a more robust version of this algorithm during implementation. |

|||

==== Patching and Obtaining T' ==== |

|||

| ⚫ | |||

Now the point worth noting is that even after the post processing '''D''' may still have multiple repair pair. By choosing or leaving any such pair, we could have 2^n ways to patch for "n" candidate repair operators. Our aim is cover all mismatches, and cover them in the best way possible (or abort if there isn't any). |

|||

To cover this, we'd employ a strategy, outlined as follow: |

|||

(A) Keep track of where the path is to inserted and which parts would it cover. |

|||

(B) Apply 2 or more patches on same T if the values of ts in them either have no overlap or a permissible overlap. |

|||

(C) Try to keep possible T' candidates (ie., |T*| ) minimum. |

|||

After applying these patches, we'll receive a set of candidate T's. |

|||

We have represented these candidates independently as T'(i) and '''T*''' represents set of all these candidates. |

|||

'''In our Example''': |

'''In our Example''': |

||

| Line 202: | Line 241: | ||

Luckily, in this example we only received a single translation after applying all the repair operations. Though, this will not be the case in most of the other examples. |

Luckily, in this example we only received a single translation after applying all the repair operations. Though, this will not be the case in most of the other examples. |

||

| ⚫ | |||

Let's assume that we have set of all such T'(i) by T*. |

|||

| ⚫ | |||

To generalize this, we'd have to develop a repair policy, which based on parameters like context coverage, repair lengths, and other parameters could provide us the best translation value T'. |

To generalize this, we'd have to develop a repair policy, which based on parameters like context coverage, repair lengths, and other parameters could provide us the best translation value T'. |

||

We could do this by making use of machine learning by learning from an example set (in which we'd have T' given) by calculating values of FMS(T'', T'), where T'' is an element of T* and chose best repair pairs and then to generalize the same for other unknown sentences S'. |

We could do this by making use of machine learning by learning from an example set (in which we'd have T' given) by calculating values of FMS(T'', T'), where T'' is an element of '''T*''' and chose best repair pairs and then to generalize the same for other unknown sentences S'. |

||

==== Preprocessing ==== |

==== Preprocessing ==== |

||

| Line 235: | Line 272: | ||

P.S.: Feedback are always welcome. |

P.S.: Feedback are always welcome. |

||

== Final API |

== Final API == |

||

As the project |

As the project allows you to use any scripting language I'll be using Python. |

||

The main program would have nearly following API (might change slightly during implementation): |

The main program would have nearly following API (might change slightly during implementation): |

||

'''apertium-repair.py''' S S' T LP [--min-fms (default 80%)] [--min-len (default 3)] [--max-len (default 3)] [--min-context (default 20%)] |

'''apertium-repair.py''' S S' T LP [--min-fms (default 80%)] [--min-len (default 3)] [--max-len (default 3)] [--min-context (default 20%)] |

||

[-r] [-s] [-h] [-d Directory] |

[-r] [-s] [-h] [-d Directory] [-m memory.tmx] |

||

positional arguments: |

positional arguments: |

||

| Line 259: | Line 296: | ||

-d D Specify the language-pair installation directory |

-d D Specify the language-pair installation directory |

||

-m mem.tmx use translation memory (mem.tmx) |

|||

-r Check for pairs reversibly as well |

-r Check for pairs reversibly as well |

||

| Line 266: | Line 305: | ||

--min-fms Minimum Fuzzy match score required to process (default value: 80%) |

--min-fms Minimum Fuzzy match score required to process (default value: 80%) |

||

--min-len Minimum length of the sub-segments (default value: |

--min-len Minimum length of the sub-segments (default value: 2) |

||

--max-len Maximum length of the sub-segments (default value: |

--max-len Maximum length of the sub-segments (default value: max(|S|,|S'|) ) |

||

--min-context Minimum context the sub-segments must have (default value: 0.2 (or 20%)) |

--min-context Minimum context the sub-segments must have (default value: 0.2 (or 20%)) |

||

--allow-plugs Allows user to patch the sentence without context as well. |

|||

--safe-patches Safe patches only (having context on both sides). |

|||

== Time line of the Project == |

== Time line of the Project == |

||

| Line 287: | Line 330: | ||

* Week 1: Improving Phrase extraction Algorithm |

* Week 1: Improving Phrase extraction Algorithm |

||

| ⚫ | |||

::: describe the improvements in more detail. I assume you mean pairs (s,s') --[[User:Mlforcada|Mlforcada]] ([[User talk:Mlforcada|talk]]) 19:09, 27 April 2014 (CEST) |

|||

* Week 3: Developing Repair operations generator |

|||

| ⚫ | |||

* Week 3: Candidate T' generator |

|||

::: Currently the approach uses a series of loops to find relevant string pairs, but I think they could be interleaved as in the algorithm I sent. This could be a nice subject for discussion before week 1 --[[User:Mlforcada|Mlforcada]] ([[User talk:Mlforcada|talk]]) 19:09, 27 April 2014 (CEST) |

|||

* Week 4: Testing and Code clean up |

* Week 4: Testing and Code clean up |

||

* Deliverable #1: Repair Operations generator |

* Deliverable #1: Repair Operations generator |

||

| Line 295: | Line 344: | ||

* Week 8: Testing and Code clean up |

* Week 8: Testing and Code clean up |

||

Deliverable #2: Fuzzy match repairer |

Deliverable #2: Fuzzy match repairer |

||

* Week 9: Preprocessing (How to store, etc). |

* Week 9: [[#Preprocessing|Preprocessing]] (How to store, etc). |

||

* Week 10: Using preprocessing in the repairer. |

* Week 10: Using preprocessing in the repairer. |

||

* Week 11: Testing with some existing Translation Memory and pending work (or code clean-up if none). |

* Week 11: Testing with some existing Translation Memory and pending work (or code clean-up if none). |

||

| Line 321: | Line 370: | ||

No, I don't have any other engagement for the Summer and would be more than happy to devote 30+ hours every week for this project. |

No, I don't have any other engagement for the Summer and would be more than happy to devote 30+ hours every week for this project. |

||

[[Category:GSoC 2014 Student proposals|Pankajksharma]] |

|||

Latest revision as of 08:31, 2 June 2016

Personal Information[edit]

Name: Pankaj Kumar Sharma

E-mail address: sharmapankaj1992 (at) gmail (dot) com

Other information that may be useful to contact:

My alternative email: pankaj (at) pankajksharma (dot) com

IRC: pankajksharma

Interest in MT and Apertium[edit]

Why is it you are interested in machine translation?

I am interested in Machine Translation (MT) because of two reasons. The first one is little Philosophical one, i.e., the ideology of making all the digital information present, openly available to everyone regardless of the language in which it's written or the language used by it's recipients. Also advancement in MT would cause in reducing the language barrier in the exchange process of ideas.

Second, I did my minor in Text Classification and since then become interested in Machine Learning and took me closer to NLP (a part of MT), this made me interested in MT. To be honest and I had only used MT only as an end-user until recently when I became aware of Apertium.

Why is it that they are interested in the Apertium project?

I am interested in Apertium because:

- It's open source.

- It's extremely helping community (experienced this from my interaction during project discussion).

- All the technique used in Apertium are provided as research papers (so anyone could learn from them).

- Apertium works Offline as well (:P).

Proposal[edit]

Title[edit]

Fuzzy-match repair from Translation Memory

Description[edit]

For a given sentence S in a source language and it's translation T in another language, the idea is to find the translation (T') of another sentence S'. The condition that S and S' must hold is that S and S' must have high Fuzzy-match score (or Low Edit Distance) between them. Depending upon what changes from S to S', we employ a set of repair operations (t, t') on T to get our T'. Here T' is a possible translation of S' and the pairs (t, t') (also, called repair operations here) holds the condition that t is a sub-segment of T and t' is the possible change which leads us to T'.

In the second phase of the project we'd preprocess an existing translation memory corresponding to the source and target language pair and store validated (s,t) pairs (here s is a sub-segment of S, t is a sub-segment of T and s translates to t). These pairs could be used for generating better and verified (s', t') pairs (here s' is a sub-sequence of S' and s' translates to t').

For details please see Project Details section.

This idea was originally given by User:mlforcada.

Project Details[edit]

These details are developed after discussions with User:mlforcada and other community members and may have slight variations during the implementation phase.

We will use following example throughout this section:

S "he changed his address recently"

T "Va canviar la seva adreça recentment"

S' "he changed his number recently"

LP "en-ca" (English-Catalan)

The project has been further divided into following phases (sub-tasks):

Finding fuzzy match score[edit]

To find how much the given input source sentences (S and S') are similar to each other, we'd use the fuzzy match score of S and S'.

We would use the following method for finding the the fuzzy match score (FMS) between S and S':

FMS(S, S') = 1 - ED(S, S') / max(|S|, |S'|)

Here ED(S, S') is the edit distance between S and S'. We would employ Levenshtein Distance for sentence for calculating the edit distance.

If only the value of FMS(S, S') >= min-fms(specified by user, default 80%), the program will proceed.

In our example:

ED(S,S') = 1 (since only "address" and "number" differ).

max(|S|, |S'|) = 5

Hence, FMS(S,S') = 0.80 (or 80%).

Since the fms is large enough we'll proceed further.

Please check the coding challenge, to check in detail, how ED is calculated.

Finding what changed from S to S'[edit]

To find out the changes between S and S', we would employ the phrase-extraction algorithm with slight modification to obtain pairs (s, s') where s and s' are sub-segments of S and S' respectively and there is some non-alignment them. We'd call the covering set as set A.

The modification would be made only to consider those pairs which have one or more mis-match (or non-alignment) and satisfy following condition:

min-len <= |s|,|s'| <= max-len, (min-len, max-len being specified by the user).

In our example:

Pairs [(1, 1), (2, 2), (3, 3), (5, 5)] are same (or aligned) in S and S',

i.e., their longest common sequence will contain words present at index i of S and index j of S' for each pair (i,j).

Though the default phrase-extraction algorithm [implemented in the coding challenge] will give more pairs, we'll only consider those pairs which satisfy the conditions given above.

Say, if min-len=2 and max-len=3, then our set A will be:

[("changed his", "changed his number"),

("changed his address", "changed his"),

("changed his address", "changed his number"),

("his address", "his number"),

("his address recently", "his number recently"),

("address recently", "recently"),

("address recently", "number recently"), ...]

Translating what changed from S to S'[edit]

To translate the changes from source to target language, we'd be using the clippings that we created in above section, as in (s, s') pairs.

To consider the context of translation, we'd be using double validation, i.e., would be considering those pairs (s, t) which have following properties: s is a sub-segment of S, s contains some mismatch in S and S', t is a sub-segment of T and s translates to t. We'd call covering set as set B.

In addition to the pair (s,t), we'd also have to store the location to which t belong, because t may have multiple appearances in T, which could be problematic in upcoming sections.

This we'd be doing using following Algorithm:

In our example:

The set B would be:

[("changed his address", "canviar la seva adreça", [2,5]),

("his address", "la seva adreça", [3,5]),

("his address recently", "la seva adreça recentment", [3,6]),

("address recently", "adreça recentment", [5,6])]

The 3rd indices mark the positions of t in T.

We would have an "-r" option [see coding challenge for details] that could be used to find more pairs. This would be done by extracting sub-segments (t) from T and finding their translations (s), they would be added to above pairs, as (s, t) if the translation (s) will be a sub-segment in S as well.

Translating changes in S'[edit]

We'd use Apertium python API (developed in the Coding challenge) to obtain pairs (s', t'). These pairs would have following properties: s' is a sub-segment of S', s' carries some variation (between S and S') and s' translates t'. We'd call the covering set as set C.

A better option of doing this could be processing of Translation memory for given language pair, which we'd employ in the second phase of this project. Please see preprocessing for detail.

We'd use following Algorithm to find C:

In our Example:

The set C would be:

[("changed his number", "canviar el seu número"),

("changed his", "canviar el seu"),

("his number", "el seu número"),

("his number recently", "el seu número recentment"),

("number recently", "número recentment")]

As stated above we can use "-r" option to increase chances to getting more pairs.

Obtaining repair pairs[edit]

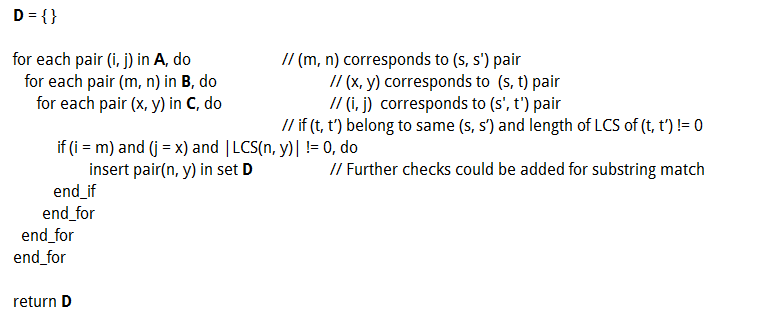

Using sets A, B and C, we'd find pairs (t, t'). Let's call the covering set of such element be D.

To find the covering sets all such pairs, we'd use following Algorithm:

In our Example:

The set D would be:

[("canviar la seva adreça", "canviar el seu número"),

("la seva adreça", "el seu número"),

("la seva adreça recentment", "el seu número recentment"),

("adreça recentment", "número recentment"), ...]

Please note that above algorithm is very naive, we'll improve this by using HashMap data-structures and other improvements.

As the number of of such pairs could be large so we'd employ some post processing technique to decrease their numbers (like removing subsets, removing single words, etc.). These pairs would be our repair operations, using which we'd try to obtain T'.

Alternative Method[edit]

Instead of finding B, C, D independently, we can combine these algorithms to obtain our repair repairs. This method is likely to be more efficient than calculating those sets independently.

This combined algorithm would look like this:

As the number of of such pairs would be very large (much more than those obtained in above section), so we'd employ post processing techniques to remove the unwanted pairs.

For this we could employ a number of techniques like removing subsets, removing single words, etc.

Please Note: The independent algorithms given in above sections are mostly for explanation purposes and most probably we'd use a more robust version of this algorithm during implementation.

Patching and Obtaining T'[edit]

After we have obtained our set D, we can use the repair operations (t, t') on T (or path T) to obtain possible T'.

Now the point worth noting is that even after the post processing D may still have multiple repair pair. By choosing or leaving any such pair, we could have 2^n ways to patch for "n" candidate repair operators. Our aim is cover all mismatches, and cover them in the best way possible (or abort if there isn't any).

To cover this, we'd employ a strategy, outlined as follow:

(A) Keep track of where the path is to inserted and which parts would it cover.

(B) Apply 2 or more patches on same T if the values of ts in them either have no overlap or a permissible overlap.

(C) Try to keep possible T' candidates (ie., |T*| ) minimum.

After applying these patches, we'll receive a set of candidate T's.

We have represented these candidates independently as T'(i) and T* represents set of all these candidates.

In our Example:

T'(1) = "Va canviar el seu número recentment" T'(2) = "Va canviar el seu número recentment" T'(3) = "Va canviar el seu número recentment" T'(4) = "Va canviar la seva número recentment"

Luckily, in this example we only received a single translation after applying all the repair operations. Though, this will not be the case in most of the other examples.

Out of all the values of T*, we must choose the value of T'.

To generalize this, we'd have to develop a repair policy, which based on parameters like context coverage, repair lengths, and other parameters could provide us the best translation value T'. We could do this by making use of machine learning by learning from an example set (in which we'd have T' given) by calculating values of FMS(T, T'), where T is an element of T* and chose best repair pairs and then to generalize the same for other unknown sentences S'.

Preprocessing[edit]

After the basic framework is being prepared, we could preprocess an existing translation memory (i.e., large set of (S, T)) using coding challenge work to get and index a large set of (s,t) pairs that are "doubly validated": on the one hand, t is the MT of s (or s is the MT of t), but on the other hand, they'd have been observed in your translation memory. In the future, they could be used as "higher quality" (s',t') pairs used to build "better" patches for new sentences.

Coding Challenge[edit]

As suggested by User:mlforcada, I did my coding challenge work and it was really helpful in my understanding of Apertium and also in understanding this whole project. You can find the code at https://github.com/pankajksharma/py-apertium

Currently it has following main programs:

apertium.py

This was the objective of the challenge and it takes source sentence S, target sentence T, language pair LP and provides set of pairs (s,t) such that s is a sub-segment of S, t is sub-segment of T and s translates to t.

fms.py

It takes two sentences S and S' and calculates the fuzzy match score of those sentences (in percentage)

pairs.py

It takes two sentences S and S' and using phase extraction algorithm, provides pairs (s, s') with consideration to the LCS of two sentences.

For more about options and other parameters, please check above github link.

P.S.: Feedback are always welcome.

Final API[edit]

As the project allows you to use any scripting language I'll be using Python.

The main program would have nearly following API (might change slightly during implementation):

apertium-repair.py S S' T LP [--min-fms (default 80%)] [--min-len (default 3)] [--max-len (default 3)] [--min-context (default 20%)] [-r] [-s] [-h] [-d Directory] [-m memory.tmx]

positional arguments:

S Source Language Sentence

T Target Language Sentence

S' Second Source Language Sentence

LP Language Pair (for example 'en-eo')

optional arguments:

-h, --help shows help message and exit

-d D Specify the language-pair installation directory

-m mem.tmx use translation memory (mem.tmx)

-r Check for pairs reversibly as well

-s Ignore single words

--min-fms Minimum Fuzzy match score required to process (default value: 80%)

--min-len Minimum length of the sub-segments (default value: 2)

--max-len Maximum length of the sub-segments (default value: max(|S|,|S'|) )

--min-context Minimum context the sub-segments must have (default value: 0.2 (or 20%))

--allow-plugs Allows user to patch the sentence without context as well.

--safe-patches Safe patches only (having context on both sides).

Time line of the Project[edit]

We'd use following schedule for executing this process:

Community Interaction Period: I would employ this interval for interacting with Apertium community and project mentors. Apart from this I'd be reading all the existing work that has been done and required algorithms.

What's been done til now ?

- FMS calculator

- Source-Target sub-segments generator

- Phrase extraction Algorithm [basic, need changes]

Remaining Plan:

- Week 1: Improving Phrase extraction Algorithm

- Week 2: Developing Repair operations generator

- Week 3: Candidate T' generator

- Week 4: Testing and Code clean up

- Deliverable #1: Repair Operations generator

- Week 5-6: Leaning from examples to develop an heuristic based repairing algorithm

- Week 7: Testing above algorithm

- Week 8: Testing and Code clean up

Deliverable #2: Fuzzy match repairer

- Week 9: Preprocessing (How to store, etc).

- Week 10: Using preprocessing in the repairer.

- Week 11: Testing with some existing Translation Memory and pending work (or code clean-up if none).

- Week 12: Code clean up and Documentation (most of that would be along the coding phase).

Project completed

My skills and qualifications[edit]

Currently I'm a final year graduate student of Computer Science from Jamia Millia Islamia.

I did my Minor project (of 14 credits in our course work)under the supervision of Prof. M M S Beg on the topic of Text Mining. Code for the same could be found here.

I co-authored a paper titled "Frequent Sequential Patterns based Probabilistic Model for Effective Classification of Web Documents" (currently under review) on the same project.

I'm quite proficient in Python and have done several projects in it. A list of projects and scripts have I've written in Python could be found here.

I've limited experience of programming with open source communities. Some of that are:

I've submitted some patches for PTS project of Debain (for example this).

I've also written some plugins for wordpress (could be found here).

Apart from this, I'm a core member of our college's Linux User Group and we promote Linux and open source software via various meetups held time-to-time.

Non-Summer-of-Code plans[edit]

No, I don't have any other engagement for the Summer and would be more than happy to devote 30+ hours every week for this project.