Difference between revisions of "User:Wei2912"

| Line 62: | Line 62: | ||

Another thing is that some words may have the same translation. In that case, we also create two entries. |

Another thing is that some words may have the same translation. In that case, we also create two entries. |

||

In the process, we also remove any "cf" tags, as they are not required. Finally, we remove anything within square brackets, as they're just translations to other languages. |

In the process, we also remove any "cf" tags, as they are not required. Finally, we remove anything within square brackets, as they're just translations to other languages, as well as rounded brackets, which are just annotations. |

||

Each entry should look like this after the processing: |

Each entry should look like this after the processing: |

||

Revision as of 08:41, 2 December 2014

My name is Wei En and I'm currently a GCI student. My blog is at http://wei2912.github.io.

I decided to help out at Apertium because I find the work here quite interesting and I believe Apertium will benefit many.

The following are projects related to Apertium.

Wiktionary Crawler

https://github.com/wei2912/WiktionaryCrawler is a crawler for Wiktionary which aims to extract data from pages. It was created for a GCI task which you can read about at Task ideas for Google Code-in/Scrape inflection information from Wiktionary.

The crawler crawls a starting category (usually Category:XXX language)for subcategories, then crawls these subcategories for pages. It then passes the page to language-specific parsers which turn it into the Speling format.

The current languages supported are Chinese (zh), Thai (th) and Lao (lo). You are welcome to contribute to this project.

Spaceless Segmentation

Spaceless Segmentation has been merged into Apertium under https://svn.code.sf.net/p/apertium/svn/branches/tokenisation. It serves to tokenize languages without any whitespace. More information can be found under Task ideas for Google Code-in/Tokenisation for spaceless orthographies.

The tokeniser looks for possible tokenisations in the corpus text and selects the tokenisation which tokens appears the most in corpus.

A report comparing the above method, LRLM and RLLM (longest left to right matching and longest right to left matching respectively) is available at https://www.dropbox.com/sh/57wtof3gbcbsl7c/AABI-Mcw2E-c942BXxsMbEAja

Conversion of PDF dictionary to lttoolbox format

NOTE: This document is a draft.

In this example we're converting the following PDF file: http://home.uchicago.edu/straughn/sakhadic.pdf

We copy the text directly from the PDF file, as PDF to text converters are currently unable to convert the text properly (thanks to the PDF format).

Here's a small sample:

аа exc. Oh! See! ааҕыс= v. to reckon with аайы a. each, every; күн аайы every day аак cf аах n. document, paper; аах= v. to read аал n. ship, barge, float, buoy

As we can see, words on the same line are seperated by "; ". Hence, we can replace "; " with "\n" so as to get a list of words seperated by newlines.

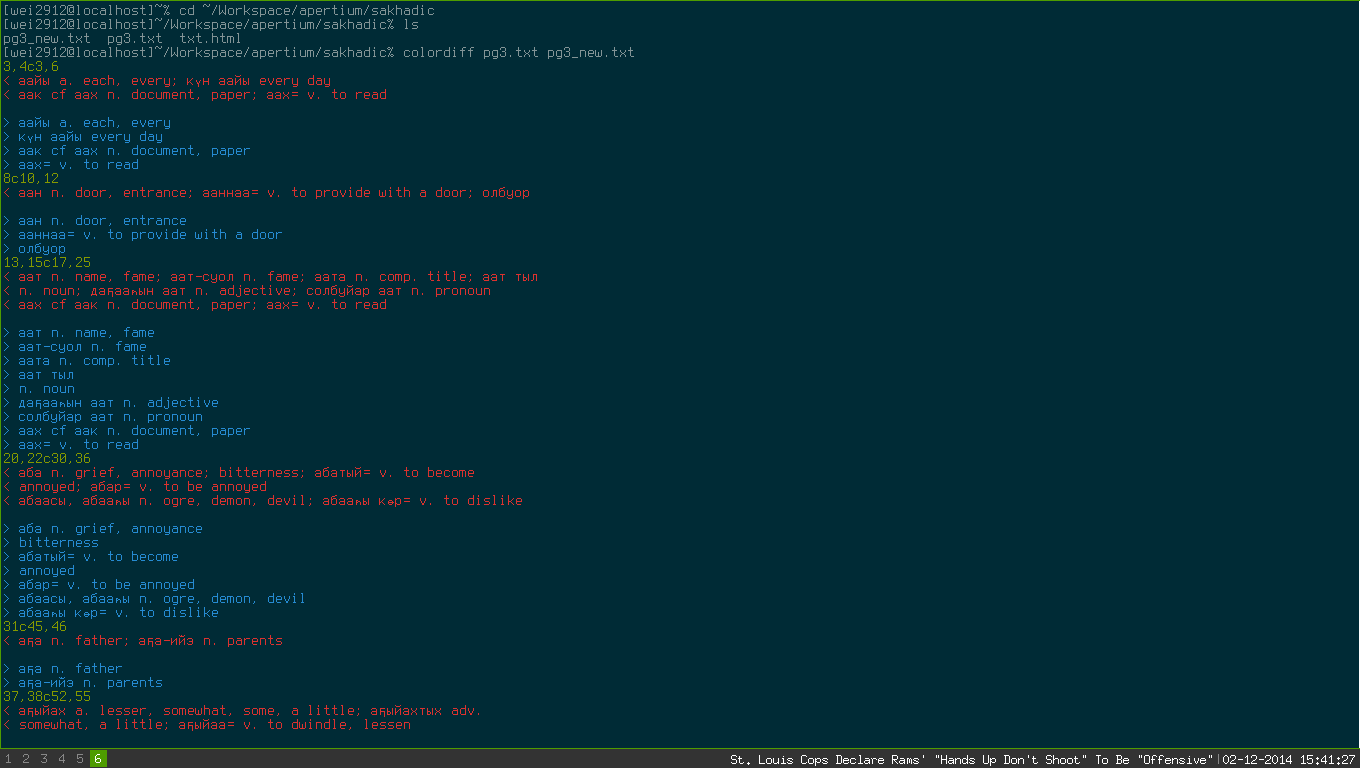

A diff reveals the following image:

Unfortunately for us, definitions may be seperated by "; " too. Hence, we'll need to merge these lines together and replace the original semicolons with commas. Also, some definitions spread over to the next line; we'll also need to fix that. At the same time, we can remove the equal signs too (they appear to indicate the end of verbs, but this notation is not required in lttoolbox format).

Also, some words have different word forms. To handle this, we copy over the original word to create a new entry. This:

албас a. cunning; n. trick, ruse

becomes

албас a. cunning албас n. trick, ruse

The good part about this is that they're also seperated by "; " and will be placed on a newline, so it's easy to spot the lines where we need to handle this.

Another thing is that some words may have the same translation. In that case, we also create two entries.

In the process, we also remove any "cf" tags, as they are not required. Finally, we remove anything within square brackets, as they're just translations to other languages, as well as rounded brackets, which are just annotations.

Each entry should look like this after the processing:

word abbrv1. abbrv2. definition1, definition2, definition3, definition4

Note that abbreviations end with a fullstop and the definitions are seperated by commas.