Difference between revisions of "User:Mihirrege/GSOC 2013 Application - Interface for creating tagged corpora"

| Line 26: | Line 26: | ||

== Which of the published tasks are you interested in? What do you plan to do? == |

== Which of the published tasks are you interested in? What do you plan to do? == |

||

| − | I am interested in working on making a tool to ease the process of hand tagging, especially in cases of large corpora and aiding related tasks like improving tagger definition files, incorporating constraint grammar rules, evaluating the |

+ | I am interested in working on making a tool to ease the process of hand tagging, especially in cases of large corpora and aiding related tasks like improving tagger definition files, incorporating constraint grammar rules, evaluating the performance of taggers. |

<br/> |

<br/> |

||

Revision as of 11:47, 3 May 2013

Contents

- 1 Name

- 2 Contact information

- 3 Why are you interested in machine translation?

- 4 Why are you interested in the Apertium project?

- 5 Why Google and Apertium should sponsor it?

- 6 How and who it will benefit in society?

- 7 Which of the published tasks are you interested in? What do you plan to do?

- 8 Work plan

- 9 List your skills and give evidence of your qualifications

- 10 My non-Summer-of-Code plans for the Summer

- 11 Links

Name

Mihir Rege

Contact information

E-mail: mihirrege@gmail.com

irc: mihirrege , geremih

sourceforge account: mihirrege

Why are you interested in machine translation?

Machine Translation is one of the main applications of Natural Language Processing, a field of Computer Science which I find to be particularly fascinating. As a person coming from a country with rich linguistic diversity, I consider that machine translation can play an important role in reducing barriers so that any language can have its place in the world.

Why are you interested in the Apertium project?

Coming from a country with rich linguistic diveristy, I admire the emphasis that Apertium puts on marginalized languages and with less number of speakers. Also this is the first time I across use of shallow transfer translation, which I find to be very interesting. Hence, I am interested in the Apertium project and look forward to contributing to it.

Why Google and Apertium should sponsor it?

Tagging based on statistical methods have led to enhancing the performance of taggers. A major difficulty with this is acquiring previously tagged corpora to perform the statistical training. In order to obtain correctly tagged corpora, hand tagging is necessary, either for tagging each token directly or to disambiguate tags by processing the corpus with an existing morphological analyser. Manually assigning tags is a demanding task and requires hours of dedicated effort by linguistically skilled people. Also, coming across free tagged corpora, especially in Apertium format is very hard.

How and who it will benefit in society?

Manually assigning tags to every token of a corpus with several million words is a highly demanding task in terms of resources since it requires the continued effort of many linguistically skilled people for a very long period of time. By accelerating this task, the limited resources at hand can be put at better use, leading to faster development of language-pairs and better quality of taggers.

Which of the published tasks are you interested in? What do you plan to do?

I am interested in working on making a tool to ease the process of hand tagging, especially in cases of large corpora and aiding related tasks like improving tagger definition files, incorporating constraint grammar rules, evaluating the performance of taggers.

There are currently three major interfaces:

- Manual disambiguator

- .prob evaluator

- .tsx file editor

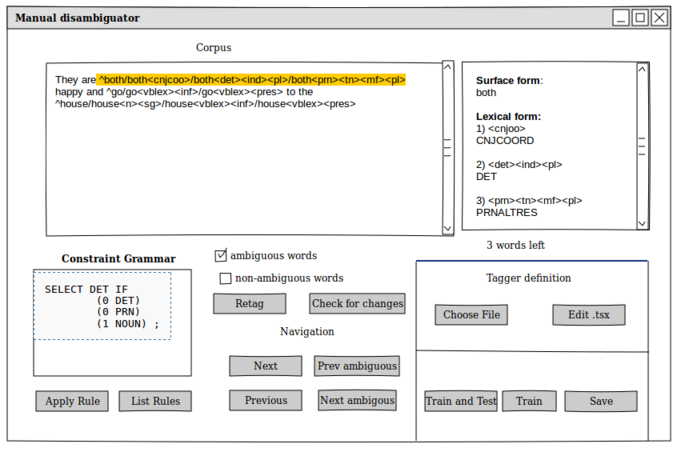

Manual disambiguator

Summary

This interface allows with minimal effort, the ambiguous words are highlighted and information with respect to the lexical form chosen is shown in a parallel window. Constraint grammar rules can be compiled and applied directly to the corpus and are saved for further reference. If a tagger definition file is provided, it can be edited and information like coarsetags, forbid definitions can be displayed. As tagging large corpuses is a discontinous process , resume support is added by saving the current configuration in a save state. Changes occuring to morphological analyser during this period might lead to the corpus being unaligned. Thus, changes in the morphological analyser is reported and it is possible to selectively reanalyze parts of the corpus, thus also accounting for addition of multiwords

Mockup

Functions:

- Jump to next ambiguous lexical unit or adjacent lexical-unit using the keyboard or mouse.

- A quick-view bound to a key, to hide the tags and show the raw text

- If the .tsx file is provided, information like the coarse tags, forbid, enforce rules applicable can also be displayed.

- Show statistics of disambiguation

- Compile and apply constraint grammar rules to the buffer

- List the applied constraint grammar rules

- Train and test the tagger (a prompt will ask the part of the corpus to be used as testing data).

- Train the tagger and export the .prob file

- Save progress ( this will save the corpus and also create a project description file which will keep track of the morphological analyser, .tsx files used, so that it is easier to resume tagging)

- The interface will be keyboard centric, though it will be equally functional with a mouse.

- Default keymaps will be provided and the bindings can be changed to suit the user

For example

[P] - <previous-ambiguous>

[N] - <next-ambiguous>

[F] - <forward-word>

[B] - <back-word>

[1], [2],[3],[4] for choosing the correct lexical form.

Evaluating the tagger

Functions

- The trained tagger can be evaluated immediately by having an option of setting aside x% of the corpus as testing data.

- Else, it can be evaluated using the .prob evaluator using an unrelated corpus.

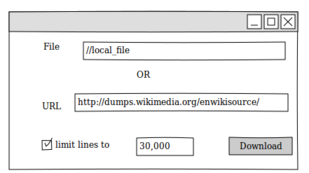

Loading the corpus

- The available options are:

- Load a raw-text file, morphological analyser and .tsx file (optional)

- Continue on an existing project

- Pull a wiki-dump and use it as the corpus

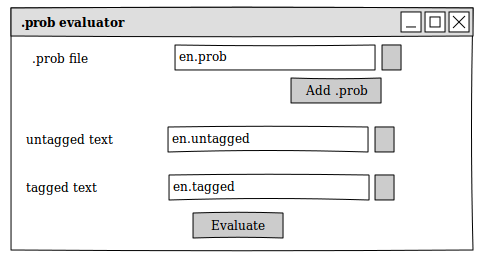

.prob evaluator

Summary: Assists the evaluation and comparison of taggers

Mockup:

Functions:

- Input the .prob file , the manually disambiguated corpus along with morphologically analysed corpus or the morphological analyser for the language.

- Evaluate the .prob file and display statistics about tagger accuracy

- Muliple .prob files can be provided which will generate a comparison between the taggers.

- Generate a log file, which will basically be the diff between the provided tagged corpus and the corpus disambiguated by the tagger, making it easier to frame new sentences to add to the corpus, so as to give more context to the tagger

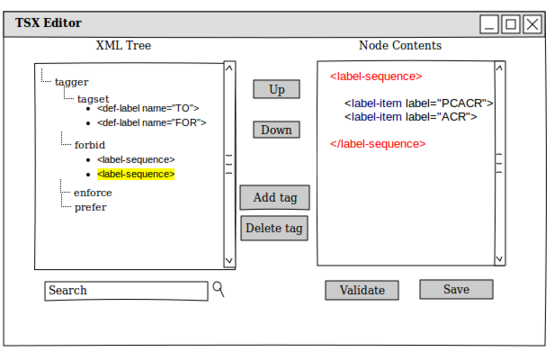

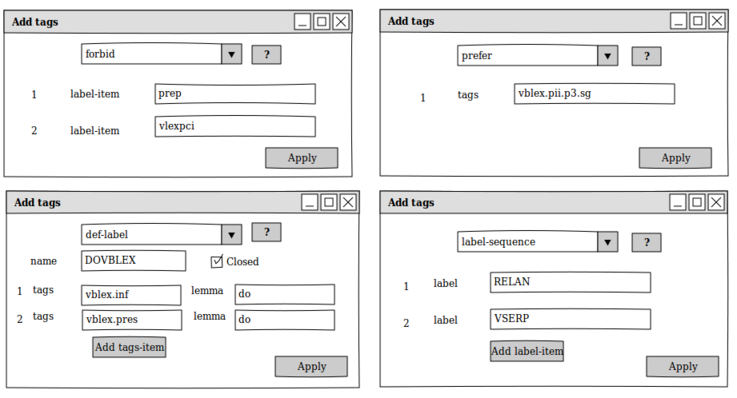

.tsx file editor

Summary: The tagger definition file specifies how to group fine grained tags into more general coarse tags and also specifies restrictions and preference rules to be applied. The major points taken into consideration are addition of new tags and editing previous tags (which is aided by templates) , reordering the tags (as more specific categories are definied more general ones), manually editing the xml and validiting the tagger definition.

Mockup:

TSX Viewer

Templates

Functions:

- Add new tags

- categories

- multi-categories

- forbid

- enforce

- prefer

- categories

- Templates for adding new tags

- Each tag in the template has a help associated to give more information for the particular tag

- Change the order of the tags (as more specific categories must be defined before more general ones) within the same parent tag. The nodes in the xml viewer can also be made draggable within the same parent node to make it easier to change the order

- Search within tags for faster navigation.

- Validate the tagger definition

- Editor features like syntax highlighting , auto-indentation and tag completion for manual editing in the Node Contents textview for complex in-place editing.

Basis of the interface

- A keyboard-centric which can be extended with custom keybindings.

- The user will drive the interface, not the reverse, i.e. each operation being undoable, with minimal prompts to the user.

- Do not lose data, the tagged corpus buffer will be backed up, in case of any failure, so that no work is lost.

Work plan

Coding challenge

I have completed the coding challenge using en-ca as my choice of language pair. For manually tagging the corpus, I wrote a small elisp script [1]. I have pushed the manually tagged corpus and the outputs generated while training the taggers to a github repo [2].

Week Plan

I will be working about 30-40/hours per week. The code will be documented along the way.

| Week | Interface | Tasks |

|---|---|---|

| Week 1-2 | Manual Disambiguator | Input corpus, tokenization, implement navigation, manual-tagging |

| Week 3-5 | Manual Disambiguator | Accounting for changes in dictionary, applying constraint grammar rules |

| Deliverable #1 | ||

| Week 6-7 | Manual Disambiguator | Buffer Undo, Saving states , Customizable Keymaps |

| Week 8-9 | .tsx editor | Build XML tree, implement draggable nodes, add templates, implement editor |

| Deliverable #2 | ||

| Week 10-11 | .prob evaluator | Input corpus, evaluate accuracy, generate logs |

| Deliverable #3 | ||

| Week 12 | Suggested "pencils down" date: scrub code, write documentation, etc. | |

List your skills and give evidence of your qualifications

I'm currently a second year student, doing my Bachelors in Computer Science and Engineering at Indian Institute of Technology, Kharagpur. I have intermediate proficiency in Python. Self taught, I have completed MIT’s online lectures on Python, and read a few books and online documentation.One of my first Python projects involved NLP, which was a problem statement to identify various users based on their IRC chat logs [3]. I also did a fun hack for a hackathon in February, which involved a chrome extension to clean bad spelling, excessive punctuation and bad grammar on webpages. [4] I have made some toy apps using GTK+ and am confident about learning any advanced topics that may be required on my own. I have completed the Machine Learning course ( by Andrew Ng) on Coursera and am currently following the Natural Language Processing (by Dan Jurafsky and Christopher Manning).

Other languages known:

C, Java, C++, Lisp (Emacs Lisp and Scheme) in order of proficiency.

Although most of the software I have developed has been free software, my experience with community driven development has been minimal. However, being a user of open-source software, I have read up extensively on open source development and would like to be a contributor.

My non-Summer-of-Code plans for the Summer

I will be staying with my brother in the United States from June 13th - July 9th. I still expect to have a minimum of 25-30 hours /week for GSoC that period.