Difference between revisions of "User:Gang Chen/GSoC 2013 Progress"

| (23 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

== GSOC 2013 == |

== GSOC 2013 == |

||

| − | I |

+ | I was working with Apertium for the GSoC 2013 (2013.06-2013.09), on the project "Sliding Window Part of Speech Tagger for Apertium". |

my proposal is here: [http://wiki.apertium.org/wiki/User:Gang_Chen/GSoC_2013_Application:_%22Sliding_Window_PoS_Tagger%22 Proposal] |

my proposal is here: [http://wiki.apertium.org/wiki/User:Gang_Chen/GSoC_2013_Application:_%22Sliding_Window_PoS_Tagger%22 Proposal] |

||

| Line 16: | Line 16: | ||

<pre> |

<pre> |

||

HMM tagger usage: |

HMM tagger usage: |

||

| − | apertium-tagger -t 8 es.dic es.crp apertium-en-es.es.tsx es-en.prob |

+ | apertium-tagger -t 8 es.dic es.crp apertium-en-es.es.tsx es-en.prob.new |

apertium-tagger -g es-en.prob.new |

apertium-tagger -g es-en.prob.new |

||

| − | + | LSW tagger usage: |

|

| − | apertium-tagger -w -t 8 es.dic es.crp apertium-en-es.es.tsx es-en.prob |

+ | apertium-tagger -w -t 8 es.dic es.crp apertium-en-es.es.tsx es-en.prob.new |

apertium-tagger -w -g es-en.prob.new |

apertium-tagger -w -g es-en.prob.new |

||

</pre> |

</pre> |

||

| Line 30: | Line 30: | ||

To execute a whole training and evaluation procedure, please refer to these 2 scripts: |

To execute a whole training and evaluation procedure, please refer to these 2 scripts: |

||

| − | [https://svn.code.sf.net/p/apertium/svn/branches/apertium-swpost/apertium-en-es/ |

+ | [https://svn.code.sf.net/p/apertium/svn/branches/apertium-swpost/apertium-en-es/es_step1_preprocess.sh apertium-en-es/es_step1_preprocess.sh] |

[https://svn.code.sf.net/p/apertium/svn/branches/apertium-swpost/apertium-en-es/es_step2_train_tag_eval.sh apertium-en-es/es_step2_train_tag_eval.sh] |

[https://svn.code.sf.net/p/apertium/svn/branches/apertium-swpost/apertium-en-es/es_step2_train_tag_eval.sh apertium-en-es/es_step2_train_tag_eval.sh] |

||

| Line 37: | Line 37: | ||

In the apertium-swpost/apertium-xx-yy (e.g. apertium-en-es) package, we have 2 tiny scripts, that do the evaluation, and are called by es_step2_train_tag_eval.sh: |

In the apertium-swpost/apertium-xx-yy (e.g. apertium-en-es) package, we have 2 tiny scripts, that do the evaluation, and are called by es_step2_train_tag_eval.sh: |

||

| − | [https://svn.code.sf.net/p/apertium/svn/branches/apertium-swpost |

+ | [https://svn.code.sf.net/p/apertium/svn/branches/apertium-swpost/apertium-en-es/es-tagger-data/extract_word_pos.py apertium-en-es/es-tagger-data/extract_word_pos.py] |

| − | [https://svn.code.sf.net/p/apertium/svn/branches/apertium-swpost |

+ | [https://svn.code.sf.net/p/apertium/svn/branches/apertium-swpost/apertium-en-es/es-tagger-data/eval.py apertium-en-es/es-tagger-data/eval.py] |

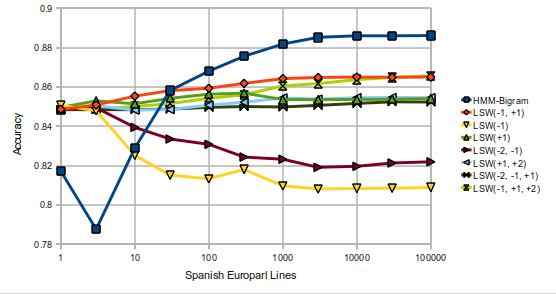

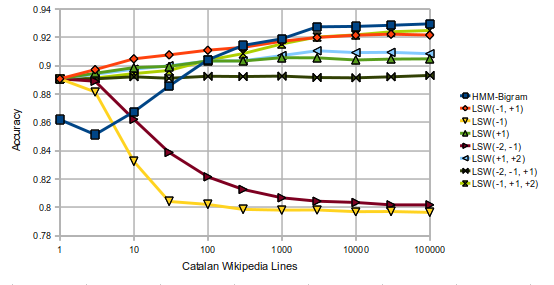

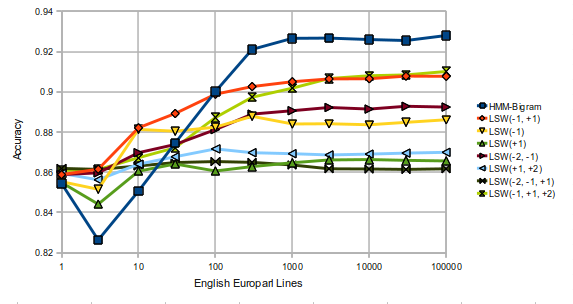

==== 3. Experimetal Results ==== |

==== 3. Experimetal Results ==== |

||

| Line 67: | Line 67: | ||

|} |

|} |

||

| − | ===== 3.2 System |

+ | ===== 3.2 System Precision ===== |

| − | <pre> |

||

| − | es-europarl-lines HMM(order 1) LSW(-1, +1) LSW(-1, +1, +2) LSW(+1) LSW(+1, +2) LSW(-2, -1, +1) LSW(-2, -1) LSW(-1) |

||

| − | 1 0.8194 0.8508 0.848465443901 0.849608928326 0.848465443901 0.8507 0.848556922655 0.850615194621 |

||

| − | 10 0.8311 0.8576 0.850066322097 0.85171293967 0.848419704524 0.8507 0.839454786626 0.82522984037 |

||

| − | 30 0.8605 0.8596 0.851438503408 0.854960435439 0.849060055802 0.8509 0.833097013219 0.815395874308 |

||

| − | 100 0.8703 0.8615 0.854182866029 0.857293143667 0.851575721539 0.8517 0.830261171843 0.813383341719 |

||

| − | 300 0.8778 0.8652 0.856286877373 0.857842016192 0.852719205964 0.8512 0.82445227096 0.818231715684 |

||

| − | 1000 0.8841 0.8677 0.859900288158 0.854777477931 0.854960435439 0.8516 0.823720440928 0.809998627819 |

||

| − | 3000 0.8875 0.8685 0.861638384485 0.854685999177 0.854548781046 0.8525 0.81937520011 0.808397749623 |

||

| − | 10000 0.8883 0.8688 0.864245528976 0.854823217308 0.855280611078 0.8516 0.819969812011 0.808306270869 |

||

| − | 30000 0.8883 0.8686 0.864565704615 0.854960435439 0.855280611078 0.8524 0.82152495083 0.808763664639 |

||

| − | 100000 0.8884 0.8687 0.865754928418 0.855372089832 0.855189132324 0.8543 0.821204775191 0.80890088277 |

||

| − | 300000 0.8659 |

||

| − | 1000000 0.8667 |

||

| − | </pre> |

||

| − | |||

| − | <pre> |

||

| − | ca-wiki-lines HMM LSW(-1, +1) LSW(-1, +1, +2) LSW(+1, +2) LSW(+1) LSW(-2, -1, 1) LSW(-2, -1) LSW(-1) |

||

| − | 1 0.82379762896 0.852186669839 0.852821061814 0.852821061814 0.852186669839 0.852186669839 0.852186669839 0.852186669839 |

||

| − | 10 0.830101899211 0.866539788272 0.856548114666 0.859680425043 0.861662899964 0.853574402284 0.823757979462 0.794139804131 |

||

| − | 30 0.848419967487 0.870108243131 0.85872883708 0.86201974545 0.863486776892 0.852583164823 0.803695333254 0.772848023473 |

||

| − | 100 0.865627849808 0.873993893977 0.865627849808 0.865429602316 0.866777685262 0.853693350779 0.78708219341 0.770350105071 |

||

| − | 300 0.875778121407 0.876333214385 0.869711748146 0.865271004322 0.867412077237 0.852265968835 0.780540026169 0.768565877642 |

||

| − | 1000 0.880417112724 0.881685896673 0.87569882241 0.869077356171 0.869553150153 0.852226319337 0.775623488363 0.768565877642 |

||

| − | 3000 0.888703857896 0.884738908053 0.881447999683 0.872447563538 0.869434201657 0.85190912335 0.773442765949 0.768684826137 |

||

| − | 10000 0.889258950874 0.886364537489 0.883470124103 0.871218429087 0.867887871218 0.850679988898 0.772887672971 0.767614289679 |

||

| − | 30000 0.889734744855 0.887316125451 0.886126640498 0.871377027081 0.868561912692 0.85139367987 0.771420641529 0.767733238175 |

||

| − | 100000 0.890963879307 0.886681733476 0.887038578962 0.870425439118 0.868720510686 0.851552277864 0.771301693034 0.7670988462 |

||

| − | 300000 0.8881 |

||

| − | 1000000 0.8876 |

||

| − | </pre> |

||

| − | |||

| − | <pre> |

||

| − | en-europarl-lines HMM(order 1) LSW(-1, +1) LSW(-1, +1, +2) LSW(+1) LSW(+1, +2) LSW(-2, -1, +1) LSW(-2, -1) LSW(-1) |

||

| − | 1 0.8258 0.8268 0.8279 0.8306 0.828 0.8277 0.829 0.8293 |

||

| − | 10 0.8294 0.852 0.8353 0.8333 0.8324 0.8298 0.8399 0.861 |

||

| − | 30 0.8494 0.8622 0.8389 0.8379 0.8364 0.8297 0.8542 0.8566 |

||

| − | 100 0.8771 0.8742 0.856 0.8505 0.8427 0.83 0.8637 0.8648 |

||

| − | 300 0.8886 0.8807 0.8701 0.8488 0.8479 0.8304 0.8723 0.8708 |

||

| − | 1000 0.8948 0.8843 0.8791 0.8473 0.8512 0.8322 0.876 0.8722 |

||

| − | 3000 0.8942 0.8867 0.8849 0.8492 0.8525 0.8325 0.8784 0.8728 |

||

| − | 10000 0.8934 0.8874 0.8885 0.8493 0.8529 0.8319 0.8787 0.8764 |

||

| − | 30000 0.8929 0.8886 0.8893 0.8499 0.8538 0.8325 0.8791 0.8747 |

||

| − | 100000 0.8945 0.8887 0.8902 0.8496 0.8541 0.8329 0.8795 0.8749 |

||

| − | 300000 0.8906 |

||

| − | 1000000 0.891 |

||

| − | </pre> |

||

| − | |||

| − | ===== 3.3 Graph ===== |

||

[[File:LSW_tagger_lines_vs_system-precision_Spanish.png]] |

[[File:LSW_tagger_lines_vs_system-precision_Spanish.png]] |

||

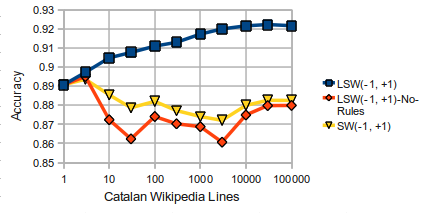

[[File:LSW_tagger_lines_vs_system-precision_Catalan.png]] |

[[File:LSW_tagger_lines_vs_system-precision_Catalan.png]] |

||

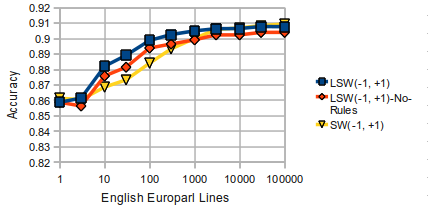

[[File:LSW_tagger_lines_vs_system-precision_English.png]] |

[[File:LSW_tagger_lines_vs_system-precision_English.png]] |

||

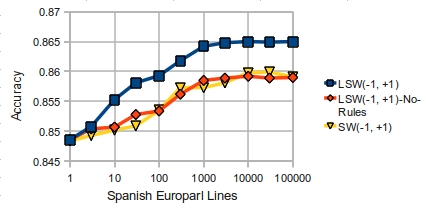

| − | ==== 4. |

+ | ==== 4. Rules and CG ==== |

| − | ===== 4.1 data ===== |

||

| − | '''es: HMM & LSW''' |

||

| − | <pre> |

||

| − | Lines HMM(order 1) HMM_No-Rules HMM_CG HMM_CG-Train | LSW(-1, +1) LSW(-1, +1)_No-Rules LSW(-1, +1)_CG LSW(-1, +1)_CG-Train |

||

| − | 1 0.8194 0.7462 0.8473 0.8173 | 0.8508 0.8486 0.8458 0.8486 |

||

| − | 10 0.8311 0.8243 0.8526 0.8425 | 0.8576 0.8507 0.8496 0.856 |

||

| − | 30 0.8605 0.8342 0.8587 0.8548 | 0.8596 0.8528 0.8523 0.8589 |

||

| − | 100 0.8703 0.8525 0.8631 0.8634 | 0.8615 0.8534 0.8549 0.8623 |

||

| − | 300 0.8778 0.8559 0.8659 0.871 | 0.8652 0.8562 0.8587 0.8671 |

||

| − | 1000 0.8841 0.8568 0.8687 0.8753 | 0.8677 0.8585 0.8608 0.8702 |

||

| − | 3000 0.8875 0.8606 0.8711 0.879 | 0.8685 0.8589 0.862 0.8717 |

||

| − | 10000 0.8883 0.8612 0.8712 0.8786 | 0.8688 0.8592 0.862 0.8721 |

||

| − | 30000 0.8883 0.862 0.8712 0.8783 | 0.8686 0.8589 0.8622 0.8728 |

||

| − | 100000 0.8884 0.8623 0.8712 0.8779 | 0.8687 0.859 0.8622 0.8728 |

||

| + | ===== 4.1 With or without Rules ===== |

||

| − | </pre> |

||

| + | [[File:Spanish_SW_LSW_LSW-noRules.png]] |

||

| + | [[File:Catalan_SW_LSW_LSW-noRules.png]] |

||

| + | [[File:English_SW_LSW_LSW-noRules.png]] |

||

| + | ===== 4.2 With or without CG ===== |

||

| − | '''ca: HMM & LSW''' |

||

| + | |||

| − | <pre> |

||

| + | * '''cgTrain''' means using CG for processing the training corpus (to reduce ambiguity). |

||

| − | Lines HMM HMM_No-Rules HMM_CG HMM_CG-Train | LSW(-1, +1) LSW(-1, +1)_No-Rules LSW(-1, +1)_CG LSW(-1, +1)_CG-Train |

||

| + | |||

| − | 1 0.8237 0.7954 0.863 0.8234 | 0.8521 0.8528 0.8492 0.8528 |

||

| + | * '''cgTag''' means using CG before the PoS tagger. |

||

| − | 10 0.8301 0.823 0.8519 0.832 | 0.8665 0.8345 0.8642 0.8675 |

||

| + | |||

| − | 30 0.8484 0.8303 0.8694 0.8568 | 0.8701 0.8245 0.8685 0.872 |

||

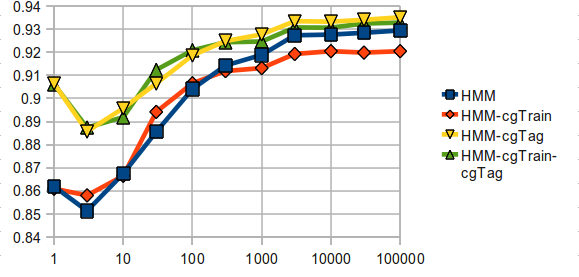

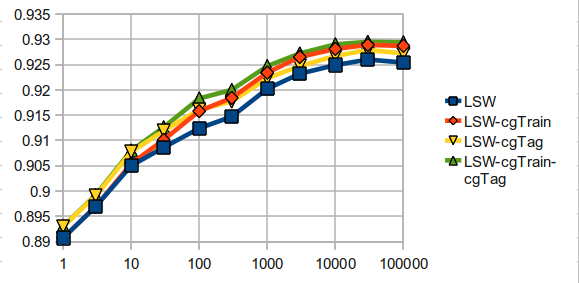

| + | ====== 4.2.1 Spanish ====== |

||

| − | 100 0.8656 0.8421 0.8775 0.8689 | 0.8739 0.8362 0.8743 0.8778 |

||

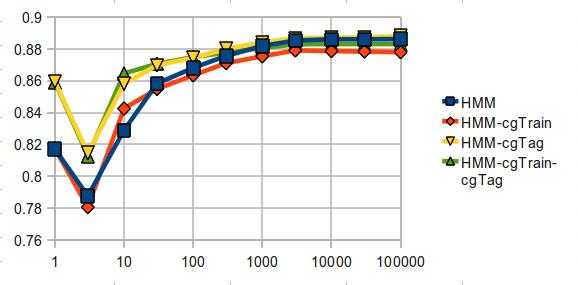

| + | [[File:ES-HMM-CG.png]] |

||

| − | 300 0.8757 0.8567 0.8808 0.8741 | 0.8763 0.8327 0.8762 0.8806 |

||

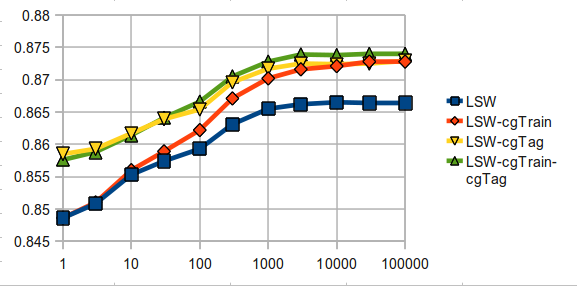

| + | [[File:ES-LSW-CG.png]] |

||

| − | 1000 0.8804 0.8642 0.8811 0.8753 | 0.8816 0.8312 0.8808 0.8855 |

||

| − | 3000 0.8887 0.8747 0.8868 0.8812 | 0.8847 0.823 0.8832 0.8885 |

||

| − | 10000 0.8892 0.8752 0.8869 0.8825 | 0.8863 0.8373 0.885 0.8903 |

||

| − | 30000 0.8897 0.8771 0.8887 0.8819 | 0.8873 0.8422 0.8855 0.8908 |

||

| − | 100000 0.8909 0.8785 0.8893 0.8827 | 0.8866 0.8422 0.8853 0.8906 |

||

| − | </pre> |

||

| − | ===== 4.2 |

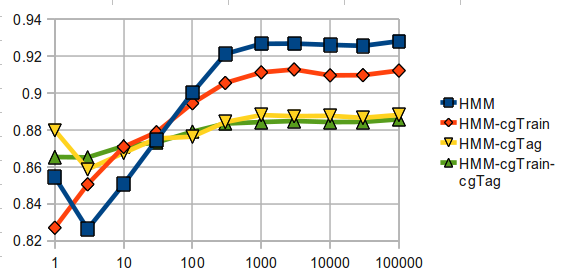

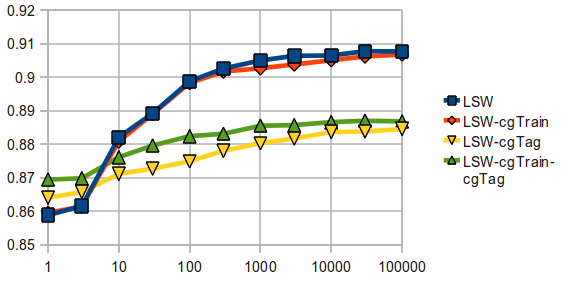

+ | ====== 4.2.2 Catalan ====== |

| − | [[File: |

+ | [[File:CA-HMM-CG.png]] |

| − | [[File: |

+ | [[File:CA-LSW-CG.png]] |

| + | ====== 4.2.3 English ====== |

||

| − | [[File:Ca_HMM_Rules_and_CG.png]] |

||

| − | [[File: |

+ | [[File:EN-HMM-CG.png]] |

| + | [[File:EN-LSW-CG.png]] |

||

=== Week Plan and Progress=== |

=== Week Plan and Progress=== |

||

| Line 242: | Line 178: | ||

|08.26-09.01 |

|08.26-09.01 |

||

|Make tests, check the code and documentation. Optionally, Study further possible improvements. |

|Make tests, check the code and documentation. Optionally, Study further possible improvements. |

||

| + | |(1) re-ran the experiments to check code and data. <br/> (2) Write the report, 1st version. |

||

| − | | |

||

|- |

|- |

||

|Week 12 |

|Week 12 |

||

|09.02-09.08 |

|09.02-09.08 |

||

|Make tests, check the code and documentation. |

|Make tests, check the code and documentation. |

||

| + | |Documentation |

||

| − | | |

||

|- |

|- |

||

|'''Deliverable #3''' |

|'''Deliverable #3''' |

||

| Line 255: | Line 191: | ||

=== General Progress === |

=== General Progress === |

||

| + | 2013-09-23: Making test, checking for bugs, documentation. |

||

2013-08-27: Experiment using CG with LSW tagger. |

2013-08-27: Experiment using CG with LSW tagger. |

||

| Line 279: | Line 216: | ||

=== Detailed progress === |

=== Detailed progress === |

||

| + | ------------------------------------------------------------------------ |

||

| + | '''2013-09-23''' |

||

| + | |||

| + | 1. Makint tests, checking for bugs, documentation. |

||

| + | |||

| + | 2. Finished the report, 2nd, 3rd vertion. |

||

| + | |||

| + | ------------------------------------------------------------------------ |

||

| + | '''2013-09-03''' |

||

| + | |||

| + | 1. Finished the report, 1st version |

||

| + | |||

------------------------------------------------------------------------ |

------------------------------------------------------------------------ |

||

'''2013-08-27''' |

'''2013-08-27''' |

||

Latest revision as of 08:43, 7 October 2013

Contents

GSOC 2013[edit]

I was working with Apertium for the GSoC 2013 (2013.06-2013.09), on the project "Sliding Window Part of Speech Tagger for Apertium".

my proposal is here: Proposal

SVN repo[edit]

1. the tagger https://svn.code.sf.net/p/apertium/svn/branches/apertium-swpost/apertium

2. en-es language pair(for experiment) https://svn.code.sf.net/p/apertium/svn/branches/apertium-swpost/apertium-en-es

3. es-ca language pair(for experiment) https://svn.code.sf.net/p/apertium/svn/branches/apertium-swpost/apertium-es-ca

Usage[edit]

Command line[edit]

HMM tagger usage: apertium-tagger -t 8 es.dic es.crp apertium-en-es.es.tsx es-en.prob.new apertium-tagger -g es-en.prob.new LSW tagger usage: apertium-tagger -w -t 8 es.dic es.crp apertium-en-es.es.tsx es-en.prob.new apertium-tagger -w -g es-en.prob.new

Tagging Experiments[edit]

1. run the whole training and evaluation pipeline[edit]

To execute a whole training and evaluation procedure, please refer to these 2 scripts:

apertium-en-es/es_step1_preprocess.sh

apertium-en-es/es_step2_train_tag_eval.sh

2. evaluation scripts[edit]

In the apertium-swpost/apertium-xx-yy (e.g. apertium-en-es) package, we have 2 tiny scripts, that do the evaluation, and are called by es_step2_train_tag_eval.sh:

apertium-en-es/es-tagger-data/extract_word_pos.py

apertium-en-es/es-tagger-data/eval.py

3. Experimetal Results[edit]

3.1 Language Pairs[edit]

| language | svn | training | test-set |

|---|---|---|---|

| es | apertium-swpost/apertium-en-es | Europarl Spanish | 1000+ lines, hand-tagged |

| ca | apertium-swpost/apertium-es-ca | Catalan Wikipedia | 1000+ lines, hand-tagged |

| en | apertium-swpost/apertium-en-es | Europarl Spanish | 1000+ lines, 80% automatically mapped from the TnT tagger, 20% by hand-tagging |

3.2 System Precision[edit]

4. Rules and CG[edit]

4.1 With or without Rules[edit]

4.2 With or without CG[edit]

- cgTrain means using CG for processing the training corpus (to reduce ambiguity).

- cgTag means using CG before the PoS tagger.

4.2.1 Spanish[edit]

4.2.2 Catalan[edit]

4.2.3 English[edit]

Week Plan and Progress[edit]

| week | date | plans | progress |

|---|---|---|---|

| Week -2 | 06.01-06.08 | Community Bonding | (1) Evaluation scripts (1st version) working for Recall precision and F1-score. (2) SW tagger (1st version) working. |

| Week -1 | 06.09-06.16 | Community Bonding | (1) LSW tagger (1st version) working, without rules. |

| Week 01 | 06.17-06.23 | Implement the unsupervised version of the algorithm. | (1) LSW tagger working, without rules. (2) Check for and fix bugs. |

| Week 02 | 06.24-06.30 | Implement the unsupervised version of the algorithm. | (1) LSW tagger working, with rules. (2) Update evaluation scripts for unknown words. |

| Week 03 | 07.01-07.07 | Store the probability data in a clever way, allowing reading and edition using linguistic knowledge. Test using linguistic edition. | (1) Reconstruct tagger-data using inheritance. (2) Experiment on "How the iteration number affects the tagger performance?"(Conclusion: usually less than 8.) (3) Extract large corpus from Wikipedia for Spanish and Catalan for text amount experiments. |

| Week 04 | 07.08-07.14 | Make tests, check for bugs, and documentation. | (1) Refine LSW tagger code for efficiency. (2) Experiment on "How the text amount affects the tagger performance?" (Conclusion: usually 1000+ lines will be OK) (3) Make stability tests. |

| Deliverable #1 | A SWPoST that works with probabilities. | ||

| Week 05 | 07.15-07.21 | Implement FORBID restrictions, using LSW. Using the same options and TSX file as the HMM tagger. | (1) Refine LSW tagger code for efficiency. (2) Implement LSW tagger with different window sizes for Catalan and Spanish and experiment them with different amounts of text. (Conclusion: window -1,+1 works best of all). |

| Week 06 | 07.22-07.28 | Implement FORBID restrictions, using LSW. Using the same options and TSX file as the HMM tagger. | (1) Study the TnT tagger for preparing English tagged text. (2) Map tags between TnT tagger and LSW tagger. 6000 ambigous words were automatically mapped out of the total 8000. |

| Week 07 | 07.29-08.04 | Implement ENFORCE restrictions, using LSW. Using the same options and TSX file as the HMM tagger. | (1) Hand-tag the 2000 ambiguous words that were not mapped automatically. |

| Week 08 | 08.05-08.11 | Make tests, check for bugs, and documentation. | (1) Experiment different window settings and text amounts on English. (Conclusion: performance all increase, unlike Spanish and Catalan, which decrease under some settings.) (2) Experiment to randomize training text for Spanish. (Conclusion: behave very alike.) (3) Further study window -1,+1,+2. (very little improvement at the cost of increasing many parameters and memory usage.) |

| Deliverable #2 | A SWPoST that works with FORBID and ENFORCE restrictions. | ||

| Week 09 | 08.12-08.18 | Implement the minimized FST version. | (1) Experiment HMM and LSw tagger without rules (2) Learn about CG. |

| Week 10 | 08.19-08.25 | Refine code. Optionally, implement the supervised version of the algorithm. | (1) Experiment HMM and LSw tagger without rules (2) Experiment HMM and LSW tagger with CG. |

| Week 11 | 08.26-09.01 | Make tests, check the code and documentation. Optionally, Study further possible improvements. | (1) re-ran the experiments to check code and data. (2) Write the report, 1st version. |

| Week 12 | 09.02-09.08 | Make tests, check the code and documentation. | Documentation |

| Deliverable #3 | A full implementation of the SWPoST in Apertium. |

General Progress[edit]

2013-09-23: Making test, checking for bugs, documentation.

2013-08-27: Experiment using CG with LSW tagger.

2013-08-20: Experiment HMM and LSW tagger without rules.

2013-08-09: Experiment different window settings and text amounts on English.

2013-08-01: Hand-tag the English words that were not automatically mapped from the TnT tagger.

2013-07-26: Map tags between TnT tagger and LSW tagger.

2013-07-17: Refine LSW tagger code for efficiency.

2013-07-06: Reconstruct tagger data using inheritance. Delete the intermedia SW tagger implementation.

2013-06-28: LSW tagger working, with rules.

2013-06-20: LSW tagger working, without rules.

2013-06-11: SW tagger working. Evaluation scripts working.

2013-05-30: Start.

Detailed progress[edit]

2013-09-23

1. Makint tests, checking for bugs, documentation.

2. Finished the report, 2nd, 3rd vertion.

2013-09-03

1. Finished the report, 1st version

2013-08-27

1. Finished experimenting HMM and LSW tagger without using rules.

2. Managed to use CG together with LSW tagger.

2013-08-09

1. Finished hand-tagging the English test set.

2. Experiment different window settings, and text amount on English.

3. Experiment to randomize training text for Spanish.

4. Further experiment the -1,+1,+2 window.

2013-08-01

1. Check the algorithm and analyse cases

2. Hand-tag the rest 20% ambiguous words that were not mapped automatically.

2013-07-26

1. Study the usage of the TnT tagger.

2. Study the relationship between tagset of the TnT tagger and that of the Apertium tagger.

3. Develop algorithms to do automatic mapping between the two tagsets, 80% of the ambiguous words are successfully mapped.

2013-07-15

1. Follow the training and tagging procedure step by step, using a small corpus of several sentences, so that parameters can be calculated by hand.

2. No significant bugs found during the training and tagging procedure.

2013-07-10

1. Managed to get the HMM-supervised running.

2. Do experiments with window +1+2, -1+1+2. The results show that the RIGHT contexts are more important than the left contexts.

2013-07-06

1. Reconstruct 'tagger_data' class, using INHERITANCE for HMM and LSW respectively.

2. Make relevant changes to the surroundings, including tagger, tsx_reader, hmm, lswpost, filter-ambiguity-class, apply-new-rules, read-words, etc.

3. DELETE the swpost tagger, which serves as an intermedia implementaion, replaced by the final lsw tagger, which works fine and could support rules.

2013-07-03

1. update evaluation scripts, putting the unknown words into consideration. Now the evaluation script can report Recall, Precision, and F1-Score.

2. experiment with different amounts of text for training the LSW tagger.

3. experiment with different window sizes for training the LSW tagger.

4. experiments show strange results.

5. checking implementation, in case of potential bugs.

2013-06-28

1. add find_similar_ambiguity_class for the tagging procedure. So a new ambiguity class won't crash the tagger down.

2. bugfix to the normalize factor. This makes the things right, but no improvements are gained to the quality.

3. replace the SW tagger with the LSW tagger. So the "-w" option is owned by the LSW tagger.

2013-06-23

1. implement a light sliding-window tagger. This tagger is based on the SW tagger, with "parameter reduction" described in the 2005 paper.

2. add rule support for light-sw tagger. The rules help to improve the tagging quality.

2013-06-21

1. add ZERO define. Because there are some double comparisons, we need a relatively precise threshold.

2. bug fix for the initial procedure and iteration formula.

3. check function style so the new code is consistent to the existing code.

2013-06-20

1. use heap space for 3-dimensional parameters. This makes it possible to train successfully without manually setting the 'stack' environment.

2. add retrain() function for SW tagger. The logic of the SW tagger's retrain is the same as that of the HMM tagger. It append several iterations based on the current parameters.

3. bugfix, avoid '-nan' parameters.

4. the tagger_data write only non-ZERO values. This saves a lot of disk space, reducing the parameter file from 100M to 200k.

2013-06-19

1. add print_para_matrix() for debugging in SW tagger. This funciton only prints non-ZERO parameters in the 3d matrix.

2. add support for debug, EOS, and null_flush. This makes the tagger work stable when called by other programs.

2013-06-17

1. a deep follow into the morpho_stream class, and make sense its memembers and functions.

2. refine the reading procedure of the SW tagger, so that the procedure is simpler and more stable.

2013-06-13

1. fix a bug in tagging procedure, where the initial tag score should be -1 instead of 0.

2013-06-12

1. add option "-w" for sw tagger. The option "-w" is not a drop-in replacement to the current HMM tagger, but an extension. So the default tagger for Apertium will still be the HMM tagger. If the "-w" option is specified, the the SW tagger will be used.

2. add support for judging the end of morpho_stream.

3. The first working version is OK:)

2013-06-11

1. Fix the bug of last version, mainly because of the read() method in 'TSX reader'. The read and write methods in the tsx_reader and tagger_data are re-implemented, because they are different from those for the HMM.

2. Implement the compression part of the SW tagger probabilities. These parameters are stored in a 3-d array.

3. Doing some debugging on the HMM tagger, in order to see how a tagger should work togethor with the whole pipeline.

2013-06-10

1. Implement a basic version SW tagger. But there are bugs between them.

2. The training and tagging procedures strictly follow the 2004 paper.

2013-05-30

1. Start the project.