Talk:Automatically trimming a monodix

Contents

Implementing automatic trimming in lttoolbox

The simplest method seems to be to first create the analyser in the normal way, then loop through all its states (see transducer.cc:Transducer::closure for a loop example), trying to do the same steps in parallel with the compiled bidix:

trim(current_a, current_b):

for symbol, next_a in analyser.transitions[current_a]:

found = false

for s, next_b in bidix.transitions[current_b]:

if s==symbol:

trim(next_a, next_b)

found = true

if seen tags:

found = true

if !found && !current_b.isFinal():

delete symbol from analyser.transitions[current_a]

// else: all transitions from this point on will just be carried over unchanged by bidix

trim(analyser.initial, bidix.initial)

Trimming while reading the XML file might have lower memory usage, but seems like more work, since pardefs are read before we get to an "initial" state.

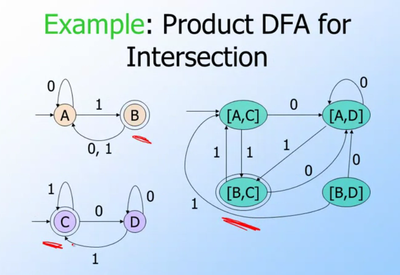

A slightly different approach is to create the product automaton for intersection, marking as final only state-pairs where both parts of the state-pair are final in the original automata. Minimisation should remove unreachable state-pairs. However, this quickly blows up in memory usage since it creates all possible state pairs first (cartesian product), not just the useful ones.

https://github.com/unhammer/lttoolbox/branches has some experiments, see e.g. branches product-intersection and df-intersection

Worse is better

If it's difficult to get a complete lt-trim solution that handles <g> perfectly a stop-gap might be to distribute a dictionary of the multiwords with the language pair, and leave only "simple" words in the monolingual package dictionary. The multiwords would be manually trimmed to the pair, the simple words trimmed with lt-trim, and a new lt-merge command would merge the two compiled dictionaries (as seperate sections).

(An lt-merge command might also be helpful when compiling att transducers, which makes anything that looks like punctuation inconditional, and anything else standard.)

#-type multiwords

An idea for implementation: first "preprocess" the bidix so it has the same format as the analyser, ie. instead of "take# out<vblex>" it has "take<vblex># out".

Say you have these entries in the bidix:

take# out<vblex> take# out<n> take# in<vblex> take<n> take<vblex>

You do a depth-first traversal, and then after reading all of "take" you see t=transition(A, B, ε, #). You remove that transition, and instead add the result of unpretransfer(t), which returns an ε-transition into a new transducer that represents these partial analyses:

<vblex># out <n># out <vblex># in

pseudocode

seen = set()

todo = [initial]

while todo:

src = todo.pop()

for left, right, trg in transitions[src]:

if left == '#':

transitions[src].remove('#')

transitions[src][epsilon] = unpretransfer(trg)

else:

if trg not in seen:

todo.push(trg)

seen.insert(trg)

def unpretransfer(start):

lemq = Transducer()

todo = [ ( start, lemq, lemq.initial ) ]

# Each searchstate in the stack is a transition in this FST,

# along with a lemq-FST which is a completely linear transducer

# (ie. all states have at most one transition),

# as well as a pointer to the currently "last" state in the lemq.

while todo:

src, lemq, lemq_last = todo.pop()

for left, right, trg in self.transitions[src]:

if not self.isTag(left):

# We're reading a lemq; append it to the current one:

lemq_trg = lemq.newState()

lemq.linkStates(lemq_last, left, right, lemq_trg)

todo.push(( trg, lemq, lemq_trg ))

else:

if lemq_last:

# This is the first <tag>, attach it to the start, and finalise lemq:

self.linkStates(start, left, right, trg)

lemq.finals.insert(lemq_last)

todo.push(( trg, lemq, NULL ))

if trg in self.finals:

unmarkfinals.insert(trg)

self.insertTransducer(trg, lemq)

# TODO: how does this interact with appendDotStar?

for state in unmarkfinals:

self.finals.remove(state)

Compounds vs trimming in sme

The sme.lexc can't be trimmed using the simple HFST trick, due to compounds.

Say you have cake n sg, cake n pl, beer n pl and beer n sg in monodix, while bidix has beer n and wine n. The HFST method without compounding is to intersect (cake|beer) n (sg|pl) with (beer|wine) n .* to get beer n (sg|pl).

But HFST represents compounding as a transition from the end of the singular noun to the beginning of the (noun) transducer, so a compounding HFST actually looks like

- ((cake|beer) n sg)*(cake|beer) n (sg|pl)

The intersection of this with

- (beer|wine) n .*

is

- (beer n sg)*(cake|beer) n (sg|pl) | beer n pl

when it should have been

- (beer n sg)*(beer n (sg|pl)

Lttoolbox doesn't represent compounding by extra circular transitions, but instead by a special restart symbol interpreted while analysing.

When we have lt-trim we will be able to make it understand compounds by e.g. restarting on +