User:Gang Chen/GSoC 2013 Progress

Contents

GSOC 2013

I'm working with Apertium for the GSoC 2013, on the project "Sliding Window Part of Speech Tagger for Apertium".

my proposal is here: Proposal

SVN repo

1. the tagger https://svn.code.sf.net/p/apertium/svn/branches/apertium-swpost/apertium

2. en-es language pair(for experiment) https://svn.code.sf.net/p/apertium/svn/branches/apertium-swpost/apertium-en-es

3. es-ca language pair(for experiment) https://svn.code.sf.net/p/apertium/svn/branches/apertium-swpost/apertium-es-ca

Usage

Command line

HMM tagger usage: apertium-tagger -t 8 es.dic es.crp apertium-en-es.es.tsx es-en.prob apertium-tagger -g es-en.prob.new SW tagger usage: apertium-tagger -w -t 8 es.dic es.crp apertium-en-es.es.tsx es-en.prob apertium-tagger -w -g es-en.prob.new

Tagging Experiments

1. language

es, in the language pair en-es, https://svn.code.sf.net/p/apertium/svn/branches/apertium-swpost/apertium-en-es

2. test set

The hand-tagged 1200+ lines in the en-es package.

25% of the words are ambiguous.

3. training data

Europal Spanish, 2 million lines.

4. evaluation scripts

In the en-es package, we have 2 tiny scripts: apertium-en-es/es-tagger-data/extract_word_pos.py and apertium-en-es/es-tagger-data/eval_fscore.sh

The scripts is a bit simple, because it just list words and their POS tags in two collumns for the reference and test file, and count how many lines of the two are different. But I think the trend it reveals is informative.

5. run the whole training and evaluation pipeline

To execute a whole training and evaluation prodecure, please refer to thesse 2 scripts: apertium-en-es/step1_preporcess.shand apertium-en-es/step2_train_tag_eval.sh .

6. F1 scores

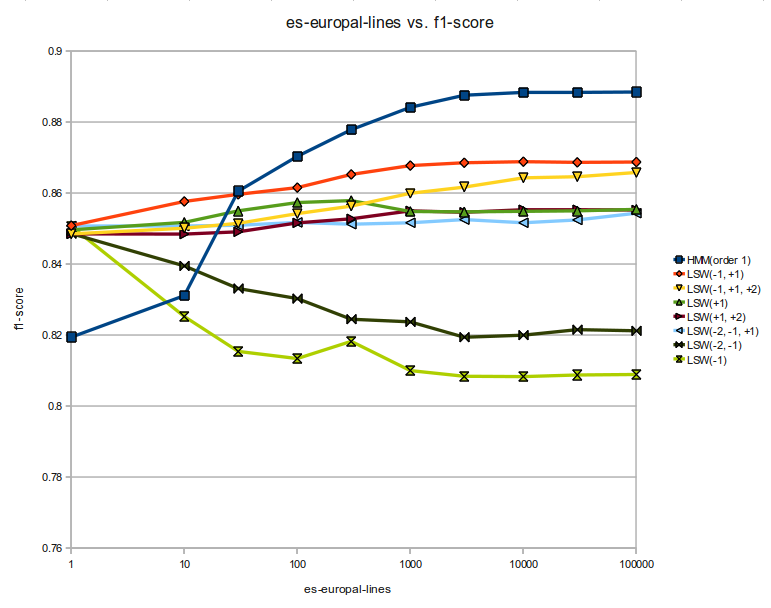

Spanish

Euroal Lines HMM(order 1) LSW(-1, +1) LSW(-1, +1, +2) LSW(+1) LSW(+1, +2) LSW(-2, -1, +1) LSW(-2, -1) LSW(-1) 1 0.8194 0.8508 0.848465443901 0.849608928326 0.848465443901 0.8507 0.848556922655 0.850615194621 10 0.8311 0.8576 0.850066322097 0.85171293967 0.848419704524 0.8507 0.839454786626 0.82522984037 30 0.8605 0.8596 0.851438503408 0.854960435439 0.849060055802 0.8509 0.833097013219 0.815395874308 100 0.8703 0.8615 0.854182866029 0.857293143667 0.851575721539 0.8517 0.830261171843 0.813383341719 300 0.8778 0.8652 0.856286877373 0.857842016192 0.852719205964 0.8512 0.82445227096 0.818231715684 1000 0.8841 0.8677 0.859900288158 0.854777477931 0.854960435439 0.8516 0.823720440928 0.809998627819 3000 0.8875 0.8685 0.861638384485 0.854685999177 0.854548781046 0.8525 0.81937520011 0.808397749623 10000 0.8883 0.8688 0.864245528976 0.854823217308 0.855280611078 0.8516 0.819969812011 0.808306270869 30000 0.8883 0.8686 0.864565704615 0.854960435439 0.855280611078 0.8524 0.82152495083 0.808763664639 100000 0.8884 0.8687 0.865754928418 0.855372089832 0.855189132324 0.8543 0.821204775191 0.80890088277

Here is the graph:

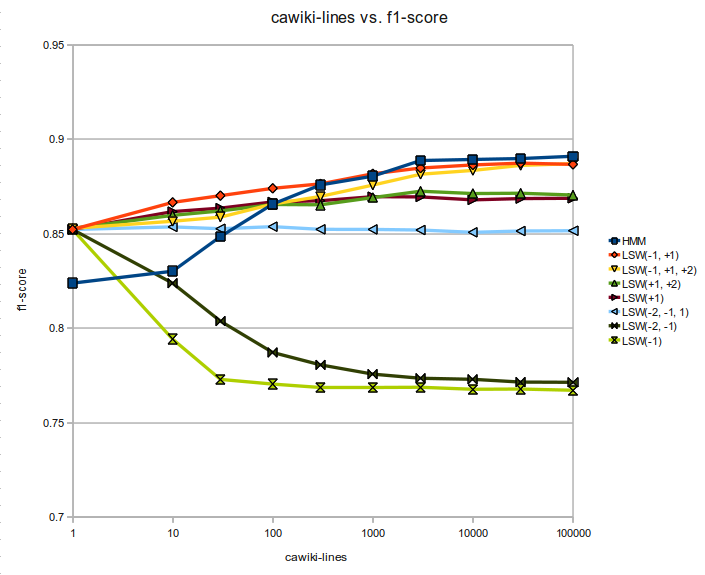

Catalan

cawiki lines HMM LSW(-1, +1) LSW(-1, +1, +2) LSW(+1, +2) LSW(+1) LSW(-2, -1, 1) LSW(-2, -1) LSW(-1) 1 0.82379762896 0.852186669839 0.852821061814 0.852821061814 0.852186669839 0.852186669839 0.852186669839 0.852186669839 10 0.830101899211 0.866539788272 0.856548114666 0.859680425043 0.861662899964 0.853574402284 0.823757979462 0.794139804131 30 0.848419967487 0.870108243131 0.85872883708 0.86201974545 0.863486776892 0.852583164823 0.803695333254 0.772848023473 100 0.865627849808 0.873993893977 0.865627849808 0.865429602316 0.866777685262 0.853693350779 0.78708219341 0.770350105071 300 0.875778121407 0.876333214385 0.869711748146 0.865271004322 0.867412077237 0.852265968835 0.780540026169 0.768565877642 1000 0.880417112724 0.881685896673 0.87569882241 0.869077356171 0.869553150153 0.852226319337 0.775623488363 0.768565877642 3000 0.888703857896 0.884738908053 0.881447999683 0.872447563538 0.869434201657 0.85190912335 0.773442765949 0.768684826137 10000 0.889258950874 0.886364537489 0.883470124103 0.871218429087 0.867887871218 0.850679988898 0.772887672971 0.767614289679 30000 0.889734744855 0.887316125451 0.886126640498 0.871377027081 0.868561912692 0.85139367987 0.771420641529 0.767733238175 100000 0.890963879307 0.886681733476 0.887038578962 0.870425439118 0.868720510686 0.851552277864 0.771301693034 0.7670988462

Here is the graph:

General Progress

2013-06-28: LSW tagger working, with rules.

2013-06-20: LSW tagger working, without rules.

2013-06-11: SW tagger working.

2013-05-30: Start.

Detailed progress

2013-07-08 1. Managed to get the HMM-supervised running.

2013-07-06

1. Reconstruct 'tagger_data' class, using INHERITANCE for HMM and LSW respectively.

2. Make relevant changes to the surroundings, including tagger, tsx_reader, hmm, lswpost, filter-ambiguity-class, apply-new-rules, read-words, etc.

3. DELETE the swpost tagger, which serves as an intermedia implementaion, replaced by the final lsw tagger, which works fine and could support rules.

2013-07-03

1. update evaluation scripts, putting the unknown words into consideration. Now the evaluation script can report Recall, Precision, and F1-Score.

2. experiment with different amounts of text for training the LSW tagger.

3. experiment with different window sizes for training the LSW tagger.

4. experiments show strange results.

5. checking implementation, in case of potential bugs.

2013-06-28

1. add find_similar_ambiguity_class for the tagging procedure. So a new ambiguity class won't crash the tagger down.

2. bugfix to the normalize factor. This makes the things right, but no improvements are gained to the quality.

3. replace the SW tagger with the LSW tagger. So the "-w" option is owned by the LSW tagger.

2013-06-23

1. implement a light sliding-window tagger. This tagger is based on the SW tagger, with "parameter reduction" described in the 2005 paper.

2. add rule support for light-sw tagger. The rules help to improve the tagging quality.

2013-06-21

1. add ZERO define. Because there are some double comparisons, we need a relatively precise threshold.

2. bug fix for the initial procedure and iteration formula.

3. check function style so the new code is consistent to the existing code.

2013-06-20

1. use heap space for 3-dimensional parameters. This makes it possible to train successfully without manually setting the 'stack' environment.

2. add retrain() function for SW tagger. The logic of the SW tagger's retrain is the same as that of the HMM tagger. It append several iterations based on the current parameters.

3. bugfix, avoid '-nan' parameters.

4. the tagger_data write only non-ZERO values. This saves a lot of disk space, reducing the parameter file from 100M to 200k.

2013-06-19

1. add print_para_matrix() for debugging in SW tagger. This funciton only prints non-ZERO parameters in the 3d matrix.

2. add support for debug, EOS, and null_flush. This makes the tagger work stable when called by other programs.

2013-06-17

1. a deep follow into the morpho_stream class, and make sense its memembers and functions.

2. refine the reading procedure of the SW tagger, so that the procedure is simpler and more stable.

2013-06-13

1. fix a bug in tagging procedure, where the initial tag score should be -1 instead of 0.

2013-06-12

1. add option "-w" for sw tagger. The option "-w" is not a drop-in replacement to the current HMM tagger, but an extension. So the default tagger for Apertium will still be the HMM tagger. If the "-w" option is specified, the the SW tagger will be used.

2. add support for judging the end of morpho_stream.

3. The first working version is OK:)

2013-06-11

1. Fix the bug of last version, mainly because of the read() method in 'TSX reader'. The read and write methods in the tsx_reader and tagger_data are re-implemented, because they are different from those for the HMM.

2. Implement the compression part of the SW tagger probabilities. These parameters are stored in a 3-d array.

3. Doing some debugging on the HMM tagger, in order to see how a tagger should work togethor with the whole pipeline.

2013-06-10

1. Implement a basic version SW tagger. But there are bugs between them.

2. The training and tagging procedures strictly follow the 2004 paper.

2013-05-30

1. Start the project.