Apertium going SOA

UPDATE: This project has actually moved from branches/gsoc2009/deadbeef/ to trunk/, and its sources are available here: https://apertium.svn.sourceforge.net/svnroot/apertium/trunk/apertium-service/

A quick web interface to a working prototype of the service is available here: http://www.neuralnoise.com/ApertiumWeb2/

Contents

Introduction

The aim of this project is to design and implement a service-oriented architecture for Apertium.

Actually, to translate a big corpus of document, many Apertium processes are created and each one must load the required transducers, grammars etc., causing a waste of resources and, so, a reduction of scalability.

To solve this problem, a solution is to implement an Apertium Service that doesn't need to reload all the resources for every translation task. In addition, this kind of service would be able to handle multiple request at the same time (useful, for example, in a Web 2.0-oriented enviroment), would improve scalability, and could be easily included into existing business processes and integrated in existing IT infrastructures with the minimum effort.

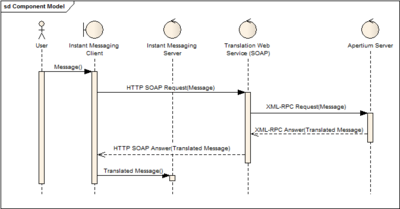

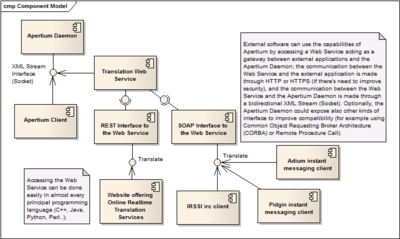

In addition, this project aims also to implement a Web Service acting as a gateway between the Apertium Server and external applications (loading Apertium inside the Web Service itself would be nonsense, since a Web Service is stateless and it wouldn't solve the scalability problem): the Web Service will offer both a SOAP and a REST interface, to make it easier for external applications/services (for example: IM clients, web sites, large IT business processes..) to include translation capabilities without importing the entire Apertium application.

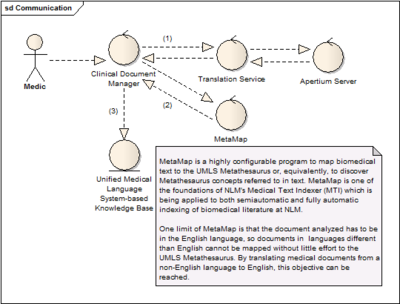

A possible Use Case: an Healthcare organization

In Healthcare Information Systems (HIS), to improve external services' access and integration, there's a general trend to implement IT infrastructure based on a SOA (Service-Oriented Architecture) model; in this use case, I show how an Healthcare Organization of non English speaking countries can greatly benefit of the integration of a Translation Service implemented using Apertium in their IT infrastructure.

MetaMap is an online application that allows mapping text to UMLS Metathesaurus concepts, which is very useful interoperability among different languages and systems within the biomedical domain. MetaMap Transfer (MMTx) is a Java program that makes MetaMap available to biomedical researchers. Currently MetaMap only works effectively on text written in the English language, which difficult the use of UMLS Metathesaurus to extract concepts from non-English biomedical texts.

A possible solution to this problem is to translate the non-English biomedical texts into English, so MetaMap (and similar Text Mining tools) can effectively work on it.

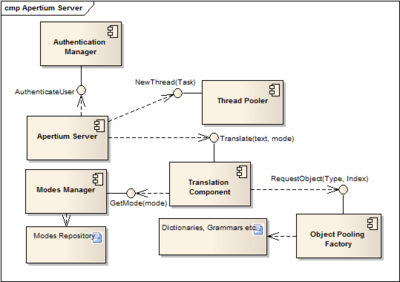

Service's internals

A possible efficient and scalable implementation of an Apertium Server can be composed by the following components:

- Authentication Manager: it's needed to authenticate users (using basic HTTP authentication) and it can be interfaced, for example, to an external OpenLDAP server, a DBMS containing users' informations, etc.

- Thread Pooler: an implementation of the Thread Pool pattern: http://en.wikipedia.org/wiki/Thread_pool

- Modes Manager: locates modefiles and parses them in the corresponding sets of instructions

- Object Pooling Factory: an implementation of the Object Pool pattern: http://en.wikipedia.org/wiki/Object_pool

- Translating Component: using the instructions contained in a mode file (parsed by the Modes Manager component), translates a given text encoded in a certain language into another.

Service's Interface

A possible interface for the Apertium service's functionalities is based on XML-RPC, a remote procedure call protocol which uses XML to encode its calls and HTTP as a transport mechanism. The XML-RPC standard is described in detail here: http://www.xmlrpc.com/spec

The list of methods the Apertium RPC service will expose will be probably similar to the following:

- array<string> GetAvailableModes();

- string Translate(string Message, string modeName, string inputEncoding);

TODO: add methods to get Server's current capabilities and load; this is useful to implement some kind of load balancing in the case of a cluster of Apertium Servers

Development status - 22/05/2009

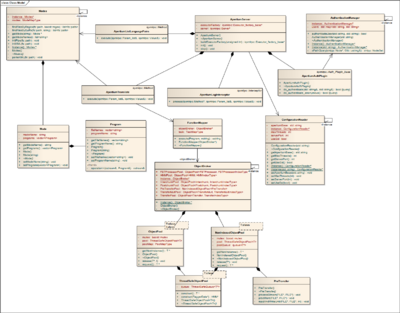

At present, a working prototype of the Apertium Service has been implemented; it reflects the attached UML diagram (click to enlarge);

The project is composed by the following classes/components:

- ApertiumServer: its role is to keep the service running and handle requests (thread pooling, handling priorities etc.); it also maps invoked methods on the classes handling them (translate to ApertiumTranslate and listLanguagePairs to ApertiumListLanguagePairs).

- ApertiumTranslate: handles the translation process; it recreates the Apertium pipeline (including formatting) using the informations contained into mode files, feeds it with user-provided input, and returns the translated message.

- ApertiumListLanguagePairs: returns a list of available modes.

- Modes: handles mode files parsing (both from .mode and modes.xml).

- FunctionMapper: its main role is to execute a specific task of the Apertium pipeline using a specific command contained into a mode.

- ObjectBroker: it's an implementation of the Object Pooling pattern: handles objects' creation and recycling.

At this moment, a working version of the Apertium XML-RPC service is available at http://www.neuralnoise.com:1234/ApertiumServer and it can be easily tested through a simple AJAX interface available at http://www.neuralnoise.com/ApertiumWeb2/

Sample usage: accessing the service from Python

pasquale@dell:~$ python

Python 2.5.4 (r254:67916, Feb 17 2009, 20:16:45)

[GCC 4.3.3] on linux2

Type "help", "copyright", "credits" or "license" for more information.

>>> import xmlrpclib

>>> proxy = xmlrpclib.ServerProxy("http://www.neuralnoise.com:1234/ApertiumServer")

>>> proxy.listLanguagePairs()

['en-eo', 'es-en', 'en-es']

>>> proxy.translate("Hello world", "en-es")

'Hola Mundo'

>>>

CURRENT ISSUE I'M GOING TO SOLVE ASAP: actually, LTToolBox and Apertium's {de, re}formatters are using GNU Flex to generate a lexical scanner; the deployed version of the Apertium Service is using Flex too, but in C++ mode, since I needed to have multiple concurrent (i.e. thread safe) lexical scanners running at the same time. The problem is that Flex, when used in C++ mode, doesn't seem to handle wide chars properly, so I'm temporairly switching (until I find a proper solution) to Boost::Spirit, a lexical scanner and parser generator that is already in service's dependencies (it's currently part of the Boost libraries, a collection of peer-reviewed, open source libraries that extend the functionality of C++).

Development status - 01/06/2009

The charset issue stated before has just been fixed; now, both the input and the output are in the UTF-8 encoding format:

pasquale@dell:~$ python

Python 2.5.4 (r254:67916, Feb 17 2009, 20:16:45)

[GCC 4.3.3] on linux2

Type "help", "copyright", "credits" or "license" for more information.

>>> import xmlrpclib

>>> proxy = xmlrpclib.ServerProxy("http://www.neuralnoise.com:1234/ApertiumServer")

>>> proxy.translate("How are you?", "en-es")

u'C\xf3mo te es?'

>>> print proxy.translate("How are you?", "en-es")

Cómo te es?

>>>

The new working prototype of the service can be tested here: http://www.neuralnoise.com/ApertiumWeb2/

Current work is focusing on handling Constraint Grammars, used by some language pairs and not included in lttoolbox or apertium.

Development status - 04/06/2009

Now the Apertium Service is able, given a sentence, to identify its language; this is done using an algorithm described in the following article:

N-Gram-Based Text Categorization (1994)

by William B. Cavnar, John M. Trenkle

In Proceedings of SDAIR-94, 3rd Annual Symposium on Document Analysis and Information Retrieval

http://www.info.unicaen.fr/~giguet/classif/cavnar_trenkle_ngram.ps

Sample usage:

pasquale@dell:~$ python

Python 2.5.4 (r254:67916, Feb 17 2009, 20:16:45)

[GCC 4.3.3] on linux2

Type "help", "copyright", "credits" or "license" for more information.

>>> import xmlrpclib

>>> proxy = xmlrpclib.ServerProxy("http://localhost:1234/ApertiumServer")

>>> proxy.classify("Voli di Stato, Berlusconi indagato")

'[it]'

>>>

Small and Quick Benchmark - 06/06/2009

I made the following Python script to quickly benchmark the project current, still-to-be-optimized, prototype:

#!/usr/bin/python

import time

import xmlrpclib

def timing(func):

def wrapper(*arg):

t1 = time.time()

res = func(*arg)

t2 = time.time()

print '%s took %0.3f ms' % (func.func_name, (t2-t1)*1000.0)

return res

return wrapper

@timing

def bench():

proxy = xmlrpclib.ServerProxy("http://localhost:1234/ApertiumServer")

for i in range(1, 1000):

proxy.translate("This is a test for the machine translation program", "en-es")

if __name__ == "__main__":

bench()

It executes 1000 translation requests to the service, and returns the time it took to complete that tasks; Here's the output:

pasquale@dell:~/gsoc/apertium-service/bench$ ./bench.py bench took 39365.654 ms

Resulting in ~40 ms to complete each request; BTW actually this protype lacks of some optimization that haven't still been introduced to make the development process easier: by introducing those optimizations, I think we can get to ~25-30 ms per request. In addition, those requests have been executed in sequence, without taking advantage of this service's multithreading capabilities.