Difference between revisions of "Talk:Automatically trimming a monodix"

| Line 1: | Line 1: | ||

==Alternate implementation methods== |

|||

==Implementing automatic trimming in lttoolbox== |

|||

| ⚫ | |||

The current method: compile the analyser in the normal way, then lt-trim loads it and loops through all its states, trying to do the same steps in parallel with the compiled bidix: |

|||

<pre> |

|||

trim(current_a, current_b): |

|||

for symbol, next_a in analyser.transitions[current_a]: |

|||

found = false |

|||

for s, next_b in bidix.transitions[current_b]: |

|||

if s==symbol: |

|||

trim(next_a, next_b) |

|||

found = true |

|||

if seen tags: |

|||

found = true |

|||

if !found && !current_b.isFinal(): |

|||

delete symbol from analyser.transitions[current_a] |

|||

// else: all transitions from this point on will just be carried over unchanged by bidix |

|||

trim(analyser.initial, bidix.initial) |

|||

</pre> |

|||

[[Image:Product-automaton-intersection.png|thumb|400px|right|product automaton for intersection]] |

[[Image:Product-automaton-intersection.png|thumb|400px|right|product automaton for intersection]] |

||

| ⚫ | The '''product automaton''' for intersection, marks as final only state-pairs where both parts of the state-pair are final in the original automata. Minimisation removes unreachable state-pairs. However, this quickly blows up in memory usage since it creates ''all'' possible state pairs first (cartesian product), not just the useful ones. |

||

| ⚫ | |||

| ⚫ | |||

| ⚫ | |||

| ⚫ | |||

<br clear="all" /> |

<br clear="all" /> |

||

===TODO=== |

|||

* Would it make things faster if we first clear out the right-sides of the bidix and minimize that? |

|||

* remove all those ifdef DEBUG's and wcerr's |

|||

* more tests? |

|||

* if no objections: git svn dcommit |

|||

== Compounds vs trimming in HFST == |

== Compounds vs trimming in HFST == |

||

Revision as of 08:36, 11 February 2014

Alternate implementation methods

Trimming while reading the XML file might have lower memory usage (who knows, untested), but seems like more work, since pardefs are read and turned into FST's before we get to an "initial" state and then attached at the end of regular entries.

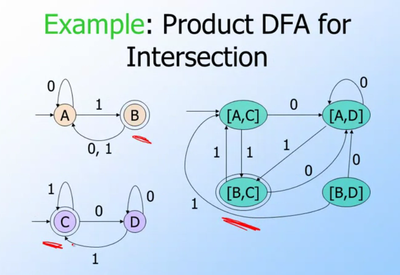

The product automaton for intersection, marks as final only state-pairs where both parts of the state-pair are final in the original automata. Minimisation removes unreachable state-pairs. However, this quickly blows up in memory usage since it creates all possible state pairs first (cartesian product), not just the useful ones.

https://github.com/unhammer/lttoolbox/branches has some experiments, see e.g. branches product-intersection and df-intersection (the latter is the currently used implementation)

Compounds vs trimming in HFST

The sme.lexc can't be trimmed using the simple HFST trick, due to compounds.

Say you have cake n sg, cake n pl, beer n pl and beer n sg in monodix, while bidix has beer n and wine n. The HFST method without compounding is to intersect (cake|beer) n (sg|pl) with (beer|wine) n .* to get beer n (sg|pl).

But HFST represents compounding as a transition from the end of the singular noun to the beginning of the (noun) transducer, so a compounding HFST actually looks like

- ((cake|beer) n sg)*(cake|beer) n (sg|pl)

The intersection of this with

- (beer|wine) n .*

is

- (beer n sg)*(cake|beer) n (sg|pl) | beer n pl

when it should have been

- (beer n sg)*(beer n (sg|pl)

Lttoolbox doesn't represent compounding by extra circular transitions, but instead by a special restart symbol interpreted while analysing.

lt-trim is able to understand compounds by simply skipping the compund tags