Difference between revisions of "Wikipedia dumps"

(Created page with "Wikipedia dumps are useful for quickly getting a corpus. They are also the best corpora for making your language pair are useful for Wikipedia's Content Translation tool :-) ...") |

m (add an internal link to Content Translation tool) |

||

| (16 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

Wikipedia dumps are useful for quickly getting a corpus. They are also the best corpora for making your language pair are useful for Wikipedia's Content Translation tool :-) |

Wikipedia dumps are useful for quickly getting a corpus. They are also the best corpora for making your language pair are useful for Wikipedia's [[Content Translation]] tool :-) |

||

You download them from |

You download them from |

||

* http://dumps.wikimedia.org/backup-index.html |

* http://dumps.wikimedia.org/backup-index.html |

||

==Tools to turn dumps into plaintext== |

|||

There are several tools for turning dumps into useful plaintext, e.g. |

There are several tools for turning dumps into useful plaintext, e.g. |

||

* [[Wikipedia Extractor]] – a python script that tries to remove all formatting |

* [[Wikipedia Extractor]] – a python script that tries to remove all formatting |

||

* [https://github.com/attardi/wikiextractor wikiextractor] – another python script that removes all formatting (with different options), putting XML marks just to know when begins and ends everty single article |

|||

* [https://gist.github.com/unhammer/3372222878580d1e4c6f mwdump-to-pandoc] – shell wrapper around [http://pandoc.org/ pandoc] (see the usage.sh below the script for how to use) |

* [https://gist.github.com/unhammer/3372222878580d1e4c6f mwdump-to-pandoc] – shell wrapper around [http://pandoc.org/ pandoc] (see the usage.sh below the script for how to use) |

||

* [[Calculating_coverage#More_involved_scripts]] – an ugly shell script that does the job |

|||

* [http://wp2txt.rubyforge.org/ wp2txt] – some ruby thing (does this work?) |

|||

==Content Translation dumps== |

|||

There are also dumps of the articles translated with the Content Translation tool, which uses Apertium (and other MT engines) under the hood: |

|||

* https://www.mediawiki.org/wiki/Content_translation/Published_translations |

|||

To turn a tmx into a <code>SOURCE\tMT\tGOLD</code> tab-separated text file, install xmlstarlet (<code>sudo apt install xmlstarlet</code>) and do: |

|||

<pre> |

|||

$ zcat ~/Nedlastingar/cx-corpora.nb2nn.text.tmx.gz \ |

|||

| xmlstarlet sel -t -m '//tu[tuv/prop/text()="mt"]' \ |

|||

-c 'tuv[./prop/text()="source"]/seg/text()' -o $'\t' \ |

|||

-c 'tuv[./prop/text()="mt"]/seg/text()' -o $'\t' \ |

|||

-c 'tuv[./prop/text()="user"]/seg/text()' -n \ |

|||

> nb2nn.tsv |

|||

</pre> |

|||

Now view the word diff between MT and GOLD with: |

|||

<pre> |

|||

$ diff -U0 <(cut -f2 nb2nn.tsv) <(cut -f3 nb2nn.tsv) | dwdiff --diff-input -c | less |

|||

</pre> |

|||

[[Image:Dwdiff-content-translation.png|600px]] |

|||

and find the original if you need it in <code>cut -f1 nb2nn.tsv</code>. |

|||

For some languages, you have to get the _2CODE files or _2_ files, e.g. sv2da is in https://dumps.wikimedia.org/other/contenttranslation/20180810/cx-corpora._2da.text.tmx.gz and da2sv is in https://dumps.wikimedia.org/other/contenttranslation/20180810/cx-corpora._2_.text.tmx.gz – so let's filter it to the languages we want: |

|||

<pre> |

|||

$ zcat ~/Nedlastingar/cx-corpora._2_.text.tmx.gz \ |

|||

| xmlstarlet sel -t \ |

|||

-m '//tu[@srclang="da" and tuv/prop/text()="mt" and tuv/@xml:lang="sv"]' \ |

|||

-c 'tuv[./prop/text()="source"]/seg/text()' -o $'\t' \ |

|||

-c 'tuv[./prop/text()="mt"]/seg/text()' -o $'\t' \ |

|||

-c 'tuv[./prop/text()="user"]/seg/text()' -n \ |

|||

> da2sv.tsv |

|||

</pre> |

|||

[[Category:Resources]] |

[[Category:Resources]] |

||

[[Category:Development]] |

[[Category:Development]] |

||

[[Category:Corpora]] |

[[Category:Corpora]] |

||

[[Category:Documentation in English]] |

|||

Latest revision as of 05:40, 10 April 2019

Wikipedia dumps are useful for quickly getting a corpus. They are also the best corpora for making your language pair are useful for Wikipedia's Content Translation tool :-)

You download them from

Tools to turn dumps into plaintext[edit]

There are several tools for turning dumps into useful plaintext, e.g.

- Wikipedia Extractor – a python script that tries to remove all formatting

- wikiextractor – another python script that removes all formatting (with different options), putting XML marks just to know when begins and ends everty single article

- mwdump-to-pandoc – shell wrapper around pandoc (see the usage.sh below the script for how to use)

- Calculating_coverage#More_involved_scripts – an ugly shell script that does the job

- wp2txt – some ruby thing (does this work?)

Content Translation dumps[edit]

There are also dumps of the articles translated with the Content Translation tool, which uses Apertium (and other MT engines) under the hood:

To turn a tmx into a SOURCE\tMT\tGOLD tab-separated text file, install xmlstarlet (sudo apt install xmlstarlet) and do:

$ zcat ~/Nedlastingar/cx-corpora.nb2nn.text.tmx.gz \

| xmlstarlet sel -t -m '//tu[tuv/prop/text()="mt"]' \

-c 'tuv[./prop/text()="source"]/seg/text()' -o $'\t' \

-c 'tuv[./prop/text()="mt"]/seg/text()' -o $'\t' \

-c 'tuv[./prop/text()="user"]/seg/text()' -n \

> nb2nn.tsv

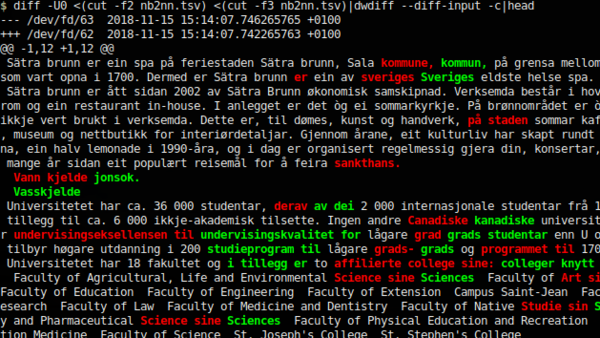

Now view the word diff between MT and GOLD with:

$ diff -U0 <(cut -f2 nb2nn.tsv) <(cut -f3 nb2nn.tsv) | dwdiff --diff-input -c | less

and find the original if you need it in cut -f1 nb2nn.tsv.

For some languages, you have to get the _2CODE files or _2_ files, e.g. sv2da is in https://dumps.wikimedia.org/other/contenttranslation/20180810/cx-corpora._2da.text.tmx.gz and da2sv is in https://dumps.wikimedia.org/other/contenttranslation/20180810/cx-corpora._2_.text.tmx.gz – so let's filter it to the languages we want:

$ zcat ~/Nedlastingar/cx-corpora._2_.text.tmx.gz \

| xmlstarlet sel -t \

-m '//tu[@srclang="da" and tuv/prop/text()="mt" and tuv/@xml:lang="sv"]' \

-c 'tuv[./prop/text()="source"]/seg/text()' -o $'\t' \

-c 'tuv[./prop/text()="mt"]/seg/text()' -o $'\t' \

-c 'tuv[./prop/text()="user"]/seg/text()' -n \

> da2sv.tsv