Difference between revisions of "User talk:Rlopez/Application"

(Created page with "== Contact information == '''Name: ''' Roque Enrique López Condori '''Email: ''' rlopezc27@gmail.com '''IRC: ''' Roque '''Personal page: ''' http://maskaygroup.com/rlopez...") |

|||

| (23 intermediate revisions by the same user not shown) | |||

| Line 23: | Line 23: | ||

=== Description === |

=== Description === |

||

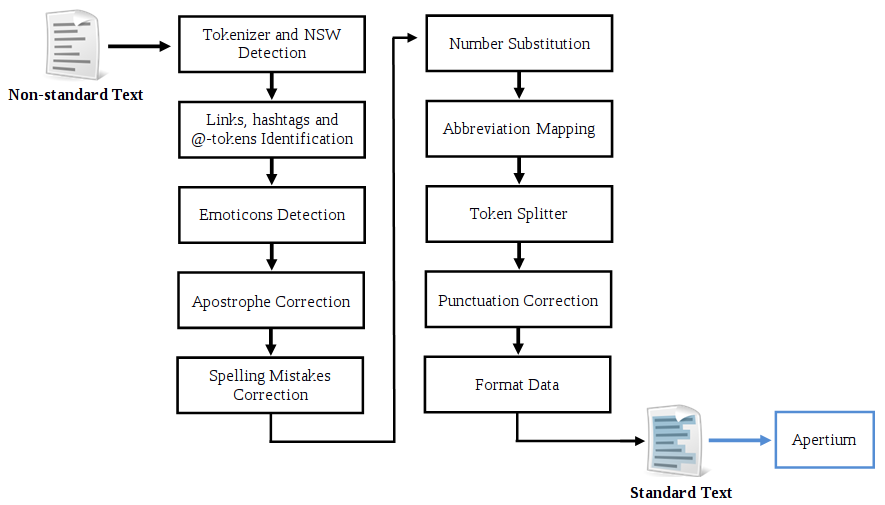

After read some papers about Text Normalitation (see References Section) and doing some experiments with Apertium, for this project I identify 10 main sub-modules to normalize non-standard texts. The Figure below shows the architecture of my proposal: |

|||

[[File:Arq.png|center]] |

|||

'''1. Tokenizer and NSW Detection''' |

|||

First, the text is broken up into whitespace-separated tokens. Regular expressions can be used to extract the tokens. For each token we evaluate if it is or not a NSW (Non-Standard Word) using the dictionary criterion (search the word in a dictionary of standard words). If the word is a NSW, we pass to the next sub-module; otherwise we evaluate the next token. |

|||

'''2. Links, hashtags and @-tokens Identification''' |

|||

Links, hashtags and @-tokens must not be translated. For example: |

|||

<pre> |

|||

http://www.timeanddate.com/astronomy/moon/blue-moon.html |

|||

#playing |

|||

@worker |

|||

</pre> |

|||

Using Apertium we get these translations: |

|||

<pre> |

|||

http://Www.timeanddate.com/luna/de astronomía/azul-luna.html |

|||

#Jugando |

|||

@Trabajador |

|||

</pre> |

|||

That is wrong. To solve this problem we have to identify that kind of token and put it in superblanks (http://wiki.apertium.org/wiki/Superblanks). So, we avoid the translation of these tokens. To identify these tokens, the use of regular expression is a good alternative. |

|||

'''3. Emoticons Detection''' |

|||

I have already collected a list of emoticons with their polarity (positive or negative, initially happy and sad). This list is available in: http://maskaygroup.com/rlopez/resource/emoticons.txt The emoticons don’t need to be translated. However, optionally we could add the emotion that represent. For example: |

|||

<pre> |

|||

Hello :) --> Hola :) |

|||

Hello :) --> Hola :) [alegre] |

|||

</pre> |

|||

Regular expressions can used to detect the emoticons. |

|||

'''4. Apostrophe Correction''' |

|||

Another kind of NSW is the missing apostrophe <ref>Clark, E., & Araki, K. (2011). Text normalitation in social media: progress, problems and applications for a pre-processing system of casual English. Procedia-Social and Behavioral Sciences, 27, 2-11.</ref>. For example: |

|||

<pre> |

|||

wouldnt --> wouldn't |

|||

dont --> don't |

|||

shell --> she'll or shell? |

|||

</pre> |

|||

To solve that, we can create a list of commons apostrophe occurrences for these cases. However, for the third case (she'll or shell) the translation is ambiguous. The Trigram Language Model can help to resolve the ambiguity. I think that there are few similar cases, but a deeper analysis can help better. |

|||

'''5. Spelling Mistakes Correction''' |

|||

Spelling Mistakes are typical in in comments and tweets. For example: |

|||

<pre> |

|||

smokin --> smoking |

|||

gooood --> god or good? |

|||

</pre> |

|||

Usually the first step in this sub-module is the elimination of repeated letters (=>3). Some cases (like the first example), the correction of these non-standards words is simple. Using an edit distance (like Levenshtein) and a dictionary, we can to find the minimum distance between the wrong word and one dictionary word <ref>Ruiz, P., Cuadros, M., & Etchegoyhen, T. (2014). Lexical Normalitation of Spanish Tweets with Rule-Based Components and Language Models. Procesamiento del Lenguaje Natural, 52, 45-52.</ref>. However in the second example, gooood may refer to '''god''' or '''good''' depending on context <ref>Han, B., & Baldwin, T. (2011, June). Lexical normalisation of short text messages: Makn sens a# twitter. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies-Volume 1 (pp. 368-378). Association for Computational Linguistics.</ref>. In that case, we can use the Trigram Language Model as an alternative to select the right correction. |

|||

'''6. Number Substitution''' |

|||

Other non-standards words are generated substituting letters with digit. For example: |

|||

<pre> |

|||

2gether --> together |

|||

be4 --> before |

|||

</pre> |

|||

One possibility to solve this problem is creating a dictionary where each digit contains their possible sounds. For example, {'4': ['for', 'fore', etc.]}. Then, we can replace all the sounds searching the right one. Another solution can be using an approach that not requires pre-categoritation like the method proposed by Liu et al. <ref>Liu, F., Weng, F., Wang, B., & Liu, Y. (2011, June). Insertion, deletion, or substitution?: normaliziing text messages without pre-categoritation nor supervision. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies: short papers-Volume 2 (pp. 71-76). Association for Computational Linguistics.</ref>. |

|||

'''7. Abbreviation Mapping''' |

|||

Nowadays, abbreviations and Internet slang are very commons. For example: |

|||

<pre> |

|||

u --> you |

|||

tgthr --> together |

|||

</pre> |

|||

To solve that, we can use a dictionary like [http://en.wiktionary.org/wiki/Appendix:English_internet_slang this] or other bigger and map them to their meanings. |

|||

'''8. Token Splitter''' |

|||

During the experiments in the coding challenge, many two pairs of words were written together. The problem of a single non-standard word is exacerbated by the deletion of whitespace between certain tokens. For example: |

|||

<pre> |

|||

youand --> you and |

|||

twoworkers -- > two workers |

|||

</pre> |

|||

To correct that, we can split the token in two tokens and verify iteratively if the two tokens are contained in a dictionary (correct words). Another alternative can be the proposal of Sproat et al.<ref>Sproat, R., Black, A. W., Chen, S., Kumar, S., Ostendorf, M., & Richards, C. (2001). Normalitation of non-standard words. Computer Speech & Language, 15(3), 287-333.</ref>. |

|||

'''9. Punctuation Correction''' |

|||

In this sub-module, basically we can solve two problems: the hyphen replacement and the non-standard capitalisation. For example: |

|||

<pre> |

|||

re-integrate --> reintegrate |

|||

i know her --> I know her |

|||

</pre> |

|||

For the first example, we verify if the word contains a hyphen and remove it. For the second example, we can use the Trigram Language Model to verify if the letter 'i' refers to the pronoun or not. |

|||

'''10. Format Data''' |

|||

Finally, this sub-module returns all the tokens separate by a simple whitespace. This will be the input of Apertium. |

|||

'''How it will work?''' |

|||

Initially, like this: |

|||

<pre> |

<pre> |

||

echo 'helllooo worrrddd!!!' | python normalizer_module.py 'en' | apertium en-es |

|||

Example |

|||

</pre> |

</pre> |

||

| Line 45: | Line 157: | ||

==== Week Plan ==== |

==== Week Plan ==== |

||

Initially, I will work with 3 languages very familiar for me: English, Spanish and Portuguese. In the third month, I plan to work with at least two new languages, according to the mentor’s suggestions and the complexity of the language’s specifications. |

|||

{| class="wikitable" border="1" |

{| class="wikitable" border="1" |

||

| Line 52: | Line 165: | ||

|- |

|- |

||

| Week 1 |

| Week 1 |

||

| |

| |

||

* Collect data for English language (slangs, tweets for the Trigram Language Model, etc.). |

|||

* Collect data for Spanish language (slangs, tweets for the Trigram Language Model, etc.). |

|||

|- |

|- |

||

| Week 2 |

| Week 2 |

||

| |

| |

||

* Implementations of the 10 sub-modules for English and Spanish (Part 1). |

|||

|- |

|- |

||

| Week 3 |

| Week 3 |

||

| |

| |

||

* Implementations of the 10 sub-modules for English and Spanish (Part 2). |

|||

* Compare the new Apertium’s results with other machines translations systems for improvement (English and Spanish). |

|||

|- |

|- |

||

| Week 4 |

| Week 4 |

||

| |

| |

||

* Make tests, check for bugs, and documentation. |

|||

|- |

|- |

||

| '''First Deliverable''' |

| '''First Deliverable:''' |

||

| '''Normalizer Module of non-standard text for English and Spanish''' |

|||

|- |

|- |

||

|Week 5 |

|Week 5 |

||

| |

| |

||

* Collect data for Portuguese language (slangs, tweets for the Trigram Language Model, etc.). |

|||

* Implementations of the 10 sub-modules for Portuguese (Part 1). |

|||

|- |

|- |

||

|Week 6 |

|Week 6 |

||

| |

| |

||

* Implementations of the 10 sub-modules for Portuguese (Part 2). |

|||

* Compare the new Apertium’s results with other machines translations systems for improvement (Portuguese). |

|||

|- |

|- |

||

| Week 7 |

| Week 7 |

||

| |

| |

||

* Study the specifications of the news languages. |

|||

* Collect data for the new languages. |

|||

|- |

|- |

||

| Week 8 |

| Week 8 |

||

| |

| |

||

* Make tests, check for bugs, and documentation. |

|||

|- |

|- |

||

| '''Second Deliverable''' |

| '''Second Deliverable:''' |

||

| '''Normalizer Module of non-standard text for English, Spanish and Portuguese''' |

|||

|- |

|- |

||

| Week 9 |

| Week 9 |

||

| |

| |

||

* Implementations of the sub-modules for the new languages (Part 1). |

|||

|- |

|- |

||

| Week 10 |

| Week 10 |

||

| |

| |

||

* Implementations of the sub-modules for the new languages (Part 2). |

|||

|- |

|- |

||

| Week 11 |

| Week 11 |

||

| |

| |

||

* Compare the new Apertium’s results with other machines translations systems for improvement (new languages). |

|||

* Make tests and check for bugs. |

|||

|- |

|- |

||

| Week 12 |

| Week 12 |

||

| |

| |

||

* Final documentation. |

|||

* Writing of the paper about the work. |

|||

|- |

|||

| '''Third Deliverable:''' |

|||

| '''A full implementation of the project''' |

|||

|- |

|- |

||

| '''Finalitation''' |

|||

|} |

|} |

||

Latest revision as of 13:35, 21 March 2014

Contents

- 1 Contact information

- 2 Why is it you are interested in machine translation?

- 3 Why is it that you are interested in the Apertium project?

- 4 Which of the published tasks are you interested in? What do you plan to do?

- 5 List your skills and give evidence of your qualifications

- 6 List any non-Summer-of-Code plans you have for the Summer

- 7 About me

- 8 References

Contact information[edit]

Name: Roque Enrique López Condori

Email: rlopezc27@gmail.com

IRC: Roque

Personal page: http://maskaygroup.com/rlopez/

Github repo: https://github.com/rlopezc27

Assembla repo: https://www.assembla.com/profile/roque27

Why is it you are interested in machine translation?[edit]

I am master student majoring in Natural Language Processing, and I like many tasks of this area. The machine translation is a task with various challenges. Nowadays, with the growth of internet, it is very common to find many non-standard texts like spelling mistakes, internet abbreviation, etc. These texts represent a big challenge to the machine translation. I'm so interested in finding better translations using a good preprocessing of these kinds of texts.

Why is it that you are interested in the Apertium project?[edit]

I think Apertium is one of the most important NLP open-source project. I'm very interested in the NLP area and I worked in some projects about this area. But, unfortunately I haven't had the opportunity to contribute to any open source project, and I think that Apertium is the right place to start. In addition, Apertium has many tasks which are so amazing to doing. I would really like to participate in this organitation because I have a big desire to contribute to the open source community.

Which of the published tasks are you interested in? What do you plan to do?[edit]

I am interested in the “Improving support for non-standard text input” task. I plan to work with English, Spanish and Portuguese languages.

Description[edit]

After read some papers about Text Normalitation (see References Section) and doing some experiments with Apertium, for this project I identify 10 main sub-modules to normalize non-standard texts. The Figure below shows the architecture of my proposal:

1. Tokenizer and NSW Detection

First, the text is broken up into whitespace-separated tokens. Regular expressions can be used to extract the tokens. For each token we evaluate if it is or not a NSW (Non-Standard Word) using the dictionary criterion (search the word in a dictionary of standard words). If the word is a NSW, we pass to the next sub-module; otherwise we evaluate the next token.

2. Links, hashtags and @-tokens Identification

Links, hashtags and @-tokens must not be translated. For example:

http://www.timeanddate.com/astronomy/moon/blue-moon.html #playing @worker

Using Apertium we get these translations:

http://Www.timeanddate.com/luna/de astronomía/azul-luna.html #Jugando @Trabajador

That is wrong. To solve this problem we have to identify that kind of token and put it in superblanks (http://wiki.apertium.org/wiki/Superblanks). So, we avoid the translation of these tokens. To identify these tokens, the use of regular expression is a good alternative.

3. Emoticons Detection

I have already collected a list of emoticons with their polarity (positive or negative, initially happy and sad). This list is available in: http://maskaygroup.com/rlopez/resource/emoticons.txt The emoticons don’t need to be translated. However, optionally we could add the emotion that represent. For example:

Hello :) --> Hola :) Hello :) --> Hola :) [alegre]

Regular expressions can used to detect the emoticons.

4. Apostrophe Correction

Another kind of NSW is the missing apostrophe [1]. For example:

wouldnt --> wouldn't dont --> don't shell --> she'll or shell?

To solve that, we can create a list of commons apostrophe occurrences for these cases. However, for the third case (she'll or shell) the translation is ambiguous. The Trigram Language Model can help to resolve the ambiguity. I think that there are few similar cases, but a deeper analysis can help better.

5. Spelling Mistakes Correction

Spelling Mistakes are typical in in comments and tweets. For example:

smokin --> smoking gooood --> god or good?

Usually the first step in this sub-module is the elimination of repeated letters (=>3). Some cases (like the first example), the correction of these non-standards words is simple. Using an edit distance (like Levenshtein) and a dictionary, we can to find the minimum distance between the wrong word and one dictionary word [2]. However in the second example, gooood may refer to god or good depending on context [3]. In that case, we can use the Trigram Language Model as an alternative to select the right correction.

6. Number Substitution

Other non-standards words are generated substituting letters with digit. For example:

2gether --> together be4 --> before

One possibility to solve this problem is creating a dictionary where each digit contains their possible sounds. For example, {'4': ['for', 'fore', etc.]}. Then, we can replace all the sounds searching the right one. Another solution can be using an approach that not requires pre-categoritation like the method proposed by Liu et al. [4].

7. Abbreviation Mapping

Nowadays, abbreviations and Internet slang are very commons. For example:

u --> you tgthr --> together

To solve that, we can use a dictionary like this or other bigger and map them to their meanings.

8. Token Splitter

During the experiments in the coding challenge, many two pairs of words were written together. The problem of a single non-standard word is exacerbated by the deletion of whitespace between certain tokens. For example:

youand --> you and twoworkers -- > two workers

To correct that, we can split the token in two tokens and verify iteratively if the two tokens are contained in a dictionary (correct words). Another alternative can be the proposal of Sproat et al.[5].

9. Punctuation Correction

In this sub-module, basically we can solve two problems: the hyphen replacement and the non-standard capitalisation. For example:

re-integrate --> reintegrate i know her --> I know her

For the first example, we verify if the word contains a hyphen and remove it. For the second example, we can use the Trigram Language Model to verify if the letter 'i' refers to the pronoun or not.

10. Format Data

Finally, this sub-module returns all the tokens separate by a simple whitespace. This will be the input of Apertium.

How it will work?

Initially, like this:

echo 'helllooo worrrddd!!!' | python normalizer_module.py 'en' | apertium en-es

Why Google and Apertium should sponsor this project?[edit]

Machine translation systems are pretty fragile working with non-standard input like spelling mistakes, internet abbreviation, etc. These types of inputs reduce the machine translation performance. Currently Apertium has no a preprocessing module to normalize these type of non-standard input. With a good text normalitation module, Apertium can significantly increases the translation quality and gets more human translation. Also this module would be helpful for other NLP tasks.

How and who it will benefit in society?[edit]

English, Spanish and Portuguese are some of the most spoken languages. The implementation of this module will improve the translations and it benefit to Apertium users that are learning these languages.

Work Plan[edit]

Coding Challenge[edit]

I have finished the Coding Challenge and, in addition to the English, I aggregate support for two languages (Spanish and Portuguese). All the instructions and explanations are in my Github Repo.

Community Bonding Period[edit]

- Familiaritation with Apertium tool and community.

- Find and analyze language resources for English, Spanish and Portuguese.

- Make preparations that will be used in the implementation.

Week Plan[edit]

Initially, I will work with 3 languages very familiar for me: English, Spanish and Portuguese. In the third month, I plan to work with at least two new languages, according to the mentor’s suggestions and the complexity of the language’s specifications.

| GSoC Week | Tasks |

|---|---|

| Week 1 |

|

| Week 2 |

|

| Week 3 |

|

| Week 4 |

|

| First Deliverable: | Normalizer Module of non-standard text for English and Spanish |

| Week 5 |

|

| Week 6 |

|

| Week 7 |

|

| Week 8 |

|

| Second Deliverable: | Normalizer Module of non-standard text for English, Spanish and Portuguese |

| Week 9 |

|

| Week 10 |

|

| Week 11 |

|

| Week 12 |

|

| Third Deliverable: | A full implementation of the project |

List your skills and give evidence of your qualifications[edit]

I am currently a 2-nd year master student majoring in Natural Language Processing. After my graduation I worked during 2 years and some months in three NLP projects that have a direct relation with this project. With my jobs and studies I have gained the following skills:

Programming Skills: During my undergraduate period, I took courses about Python, Java and C++ programing. I finished my undergraduate placed in the top 5 position. In my second and third jobs I used Python as a main programming language. At the Master, I am using Python more frequently. Some of my works are in my Github repo.

Natural Language Processing: I worked in three NLP projects. Some of the main topics are: text-processing, sentiment analysis, text classification, summaritation, etc.

Spanish, Portuguese and English language: I'm a Spanish native speaker. I'm living in Brazil for over a year, which improves my Portuguese. About my English, I studied for two years.

I develop all of my own software under free licenses and make an effort to work in groups as often as possible. However, unfortunately I can't claim much in terms of experience with open-source projects.

CV: More about me in my complete CV.

List any non-Summer-of-Code plans you have for the Summer[edit]

During the May 19 and August 18 period, mainly my activities will be focused in the GSoC project and the progress of my master’s work. This year I don’t have courses at my master, therefore, my tasks are related to research activities. I do not pretend to make any trip, I will stay in São Paulo–Brazil. I have planned to work at least 30 hours a week for this project, but if it is necessary I can work some hours more.

About me[edit]

I studied at San Agustin National University in Perú, where I gained my BA (Hons) degree in System Engineering. After my studies I worked two years. In the first year, I worked in a research project about Automatic Summaritation of medical records, as a result of my work, I got some publications about medical record classification. In the second year I was member and worked in the Lindexa startup (Natural Language Processing startup), which was one of 10 startups that won the Wayra-Peru 2011 competition.

From Perú I moved to Brazil, where I am doing my Master in Computer Science at São Paulo University (http://www.nilc.icmc.usp.br/nilc/index.php). My research topic is about Opinion Summaritation. In Brazil, the last year, I worked in the DicionarioCriativo startup, which is an online dictionary that relates words, concepts, phrases, quotes, images and other contents by semantic fields.

This is the first year applying to the GSoC. I hope to be part of your team in this summer.

References[edit]

- ↑ Clark, E., & Araki, K. (2011). Text normalitation in social media: progress, problems and applications for a pre-processing system of casual English. Procedia-Social and Behavioral Sciences, 27, 2-11.

- ↑ Ruiz, P., Cuadros, M., & Etchegoyhen, T. (2014). Lexical Normalitation of Spanish Tweets with Rule-Based Components and Language Models. Procesamiento del Lenguaje Natural, 52, 45-52.

- ↑ Han, B., & Baldwin, T. (2011, June). Lexical normalisation of short text messages: Makn sens a# twitter. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies-Volume 1 (pp. 368-378). Association for Computational Linguistics.

- ↑ Liu, F., Weng, F., Wang, B., & Liu, Y. (2011, June). Insertion, deletion, or substitution?: normaliziing text messages without pre-categoritation nor supervision. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies: short papers-Volume 2 (pp. 71-76). Association for Computational Linguistics.

- ↑ Sproat, R., Black, A. W., Chen, S., Kumar, S., Ostendorf, M., & Richards, C. (2001). Normalitation of non-standard words. Computer Speech & Language, 15(3), 287-333.