Difference between revisions of "User:Ksnmi/Application"

| (7 intermediate revisions by the same user not shown) | |||

| Line 14: | Line 14: | ||

*'''Why is it that they are interested in the Apertium project?''' |

*'''Why is it that they are interested in the Apertium project?''' |

||

** This current project on "non-standard text input" has everything I love to work on. From, Informal data from Twitter/IRC/etc., handling noise removal, building FST's, analysing data and at the end building Machine Translation systems. I believe that this approach can be standardized for many source languages with the approach I have in mind. Having learned from the process in English the language independent module will be a good contribution. Also, the translation quality we are working on should be intact when we are giving back to the community, well at least this is an important step. This is also one of the kind of projects whose implementation will help translation on all the language pairs on apertium at the end. |

** This current project on "non-standard text input" has everything I love to work on. From, Informal data from Twitter/IRC/etc., handling noise removal, building FST's, analysing data and at the end building Machine Translation systems. I believe that this approach can be standardized for many source languages with the approach I have in mind. Having learned from the process in English, the language independent module will be a good contribution. Also, the translation quality we are working on should be intact when we are giving back to the community, well at least this is an important step. This is also one of the kind of projects whose implementation will help translation on all the language pairs on apertium at the end. |

||

*'''Reasons why Google and Apertium should sponsor it''' - I'd love to work on the current project with the set of mentors. This project is important because the MT community on an open level should also welcome the change in the use of language, in the form of the popular non standard text, by the people. This will extend our reach to several people and practically increase the efficiency of the translation task no doubt. |

*'''Reasons why Google and Apertium should sponsor it''' - I'd love to work on the current project with the set of mentors. This project is important because the MT community on an open level should also welcome the change in the use of language, in the form of the popular non standard text, by the people. This will extend our reach to several people and practically increase the efficiency of the translation task no doubt. |

||

| Line 22: | Line 22: | ||

*'''List your skills and give evidence of your qualifications. Tell us what is your current field of study, major, etc. Convince us that you can do the work. In particular we would like to know whether you have programmed before in open-source projects.''' |

*'''List your skills and give evidence of your qualifications. Tell us what is your current field of study, major, etc. Convince us that you can do the work. In particular we would like to know whether you have programmed before in open-source projects.''' |

||

** I am a fourth year student at the International Institute of Information Technology, Hyderabad, India pursuing my Dual Degree (Btech+MS) in Computer Science and Digital Humanities. I have been inclined towards the linguistic studies even for my MS research. |

** I am a fourth year student at the International Institute of Information Technology, Hyderabad, India pursuing my Dual Degree (Btech+MS) in Computer Science and Digital Humanities. I have been inclined towards the linguistic studies even for my MS research. |

||

I have been associated with various kinds of projects, Some important one's are listed below -<br/> |

**I have been associated with various kinds of projects, Some important one's are listed below -<br/> |

||

| ⚫ | **I'm an active member of the Translation Process Research Community from Europe and under the guidance of Michael Carl, Copenhagen Business school and Srinivas Bangalore from AT&T, USA. I have completed a project which models translators as expert or novice, based on their eye movements tracked while they are performing a translation Task, last summer at CBS. In Proceedings for ETRA 2014, Florida (Recognition of translator expertise using sequences of fixations and keystrokes, Pascual Martinez-Gomez, Akshay Minocha, Jin Huang, Michael Carl, Srinivas Bangalore, Akiko Aizawa ) |

||

**I had been associated to SketchEngine as a Programmer for almost a year. - My work during the initial phase there resulted in a publication at ACL SIGWAC 2013, which was about building Quality Web Corpus from Feeds Information. |

|||

| ⚫ | **I'm an active member of the Translation Process Research Community from Europe and under the guidance of Michael Carl, Copenhagen Business school and Srinivas Bangalore from AT&T, USA. I have completed a project which models translators as expert or novice, based on their eye movements tracked while they are performing a translation Task, last summer at CBS. |

||

| ⚫ | |||

**I had been associated to SketchEngine as a Programmer for almost a year. |

|||

| ⚫ | |||

**Have worked vigorously on Corpus Linguistics and Data Mining tasks. to support other contributions. |

**Have worked vigorously on Corpus Linguistics and Data Mining tasks. to support other contributions. |

||

| ⚫ | |||

*'''List any non-Summer-of-Code plans you have for the Summer, especially employment, if you are applying for internships, and class-taking. Be specific about schedules and time commitments. we would like to be sure you have at least 30 free hours a week to develop for our project.''' |

|||

| ⚫ | |||

**I am dedicated towards this project and am very excited about working on it. A commitment of 30 hours a week would not be a problem, I might even work on some more so as to complete the weekly goals as described in the Workplan. |

|||

== Primary Goal == |

== Primary Goal == |

||

| Line 44: | Line 39: | ||

*Integrate the whole support efficiently with Apertium |

*Integrate the whole support efficiently with Apertium |

||

*Test it with other MT systems |

*Test it with other MT systems |

||

*Publish |

*Publish Research Paper(s) from the results of this work |

||

| Line 164: | Line 159: | ||

The functional Prototype involving the whole processing, and right now correctly working for English is available at the github link -> |

The functional Prototype involving the whole processing, and right now correctly working for English is available at the github link -> |

||

https://github.com/akshayminocha5/apertium-non-standard-input-task |

|||

<LINK TO GITHUB REPO > |

|||

Below is the description of the working of the Modules - |

Below is the description of the working of the Modules - |

||

| Line 220: | Line 215: | ||

=== Step.9 Solving issue for Abbreviation ( NON-CONVENTIONAL ) === |

=== Step.9 Solving issue for Abbreviation ( NON-CONVENTIONAL ) === |

||

| ⚫ | |||

| ⚫ | |||

rehab -> rehabilitation |

rehab -> rehabilitation |

||

| Line 256: | Line 250: | ||

**Other sources of non standard data don’t see to get a significant improvement |

**Other sources of non standard data don’t see to get a significant improvement |

||

**BLEU score improvement marginal |

**BLEU score improvement marginal |

||

<br/> |

|||

*This is a standard research<ref>Sproat, Richard, et al. "Normalis(z)ation of non-standard words." Computer Speech & Language 15.3 (2001): 287-333.</ref> on the Non-Standard Words, (NSW) It suggests that Non-standard words are more ambiguous with respect to ordinary words in the ways of pronunciation and interpretation. In many applications, it is desirable to “normalize” text by replacing the NSWs with the contextually appropriate ordinary word or sequence of words. They have generally categorized numbers, abbreviations, other markup, url’s, handled capitalisation, etc. A very interesting method on tree based abbreviation model has been suggested in the research, which can give us ideas on improving our current abbreviation model or just have another addition to it in the model. This includes suggestion for vowel Dropping, shortened words and first syllable usage. <br/> The issue with most of the research is the limitation to a particular language in this case English. They have standardized the most common points of english leaving scope for a lot of improvement. In our kind of processing at the moment we are not considering any specific markup techniques within the pipeline but this paper shows some promising work on the same which can be useful for developers, and other users who want to analyse the data in more detail. Such a convention can be added easily after conducting experiments and seeing results. |

*This is a standard research<ref>Sproat, Richard, et al. "Normalis(z)ation of non-standard words." Computer Speech & Language 15.3 (2001): 287-333.</ref> on the Non-Standard Words, (NSW) It suggests that Non-standard words are more ambiguous with respect to ordinary words in the ways of pronunciation and interpretation. In many applications, it is desirable to “normalize” text by replacing the NSWs with the contextually appropriate ordinary word or sequence of words. They have generally categorized numbers, abbreviations, other markup, url’s, handled capitalisation, etc. A very interesting method on tree based abbreviation model has been suggested in the research, which can give us ideas on improving our current abbreviation model or just have another addition to it in the model. This includes suggestion for vowel Dropping, shortened words and first syllable usage. <br/> The issue with most of the research is the limitation to a particular language in this case English. They have standardized the most common points of english leaving scope for a lot of improvement. In our kind of processing at the moment we are not considering any specific markup techniques within the pipeline but this paper shows some promising work on the same which can be useful for developers, and other users who want to analyse the data in more detail. Such a convention can be added easily after conducting experiments and seeing results. |

||

<br/> |

|||

*This <ref> Pennell, Deana, and Yang Liu. "A Character-Level Machine Translation Approach for Normalis(z)ation of SMS Abbreviations." IJCNLP. 2011. </ref> is a completely different approach where the author tries to solve the problem by proposing a character level machine translation approach. The issue here is accuracy, they have used the Jazzy spell checker<ref> Mindaugas Idzelis. 2005. Jazzy: The java open source spell checker. </ref> as baseline and the compared it with previous such research. The issue here is the huge resource being used up in training and tuning the MT system and also, such a system would have complications being included on the run with Apertium. |

*This <ref> Pennell, Deana, and Yang Liu. "A Character-Level Machine Translation Approach for Normalis(z)ation of SMS Abbreviations." IJCNLP. 2011. </ref> is a completely different approach where the author tries to solve the problem by proposing a character level machine translation approach. The issue here is accuracy, they have used the Jazzy spell checker<ref> Mindaugas Idzelis. 2005. Jazzy: The java open source spell checker. </ref> as baseline and the compared it with previous such research. The issue here is the huge resource being used up in training and tuning the MT system and also, such a system would have complications being included on the run with Apertium. |

||

<br/> |

|||

*This research <ref> Lopez, Adam, and Matt Post. "Beyond bitext: Five open problems in machine translation." </ref> is more idea centric, where the author says the MT research is far from complete and we face many challenges. With our project we aim to target these problems specifically. Translation of Informal text and Translation of low resource language pairs are the ones which concern Apertium and us the most. |

*This research <ref> Lopez, Adam, and Matt Post. "Beyond bitext: Five open problems in machine translation." </ref> is more idea centric, where the author says the MT research is far from complete and we face many challenges. With our project we aim to target these problems specifically. Translation of Informal text and Translation of low resource language pairs are the ones which concern Apertium and us the most. |

||

<br/> |

|||

*This research <ref> Lo, Chi-kiu, and Dekai Wu. "Can informal genres be better translated by tuning on automatic semantic metrics." Proceedings of the 14th Machine Translation Summit (MTSummit-XIV) (2013). </ref> identifies the difficulties faced not only by the Translation community with the web forum data and other informal genres but also by people working on semantic role labelling, and probably many more who rely on data analytics, etc. <br/> They propose that evaluation of systems which are MEANT tuned performed significantly better than other systems tuned according to BLEU and TER. With our module the Error analysis suggested would improve on the system, because of a significant rise in the number of known words, grammar and word sense, the semantic parser being used here would perform better. |

*This research <ref> Lo, Chi-kiu, and Dekai Wu. "Can informal genres be better translated by tuning on automatic semantic metrics." Proceedings of the 14th Machine Translation Summit (MTSummit-XIV) (2013). </ref> identifies the difficulties faced not only by the Translation community with the web forum data and other informal genres but also by people working on semantic role labelling, and probably many more who rely on data analytics, etc. <br/> They propose that evaluation of systems which are MEANT tuned performed significantly better than other systems tuned according to BLEU and TER. With our module the Error analysis suggested would improve on the system, because of a significant rise in the number of known words, grammar and word sense, the semantic parser being used here would perform better. |

||

<br/> |

|||

*This research<ref>Pennell, Deana L., and Yang Liu. "Normalis(z)ation of informal text." Computer Speech & Language 28.1 (2014): 256-277.</ref> idea’s to the approach very similar to ours. But they have focussed mainly on the abbreviated word re-modelling and expansion, by implementing a character based translation model. |

*This research<ref>Pennell, Deana L., and Yang Liu. "Normalis(z)ation of informal text." Computer Speech & Language 28.1 (2014): 256-277.</ref> idea’s to the approach very similar to ours. But they have focussed mainly on the abbreviated word re-modelling and expansion, by implementing a character based translation model. |

||

<br/> |

|||

*Inspired by this <ref>S. Bangalore, V. Murdock, G. Riccardi - Bootstrapping bilingual data using consensus translation for a multilingual instant messaging system, 19th International Conference on Computational Linguistics, Taipei, Taiwan (2002), pp. 1–7 |

*Inspired by this <ref>S. Bangalore, V. Murdock, G. Riccardi - Bootstrapping bilingual data using consensus translation for a multilingual instant messaging system, 19th International Conference on Computational Linguistics, Taipei, Taiwan (2002), pp. 1–7 |

||

</ref> research, Srinivas Bangalore has suggested a method of bootstrapping from the data on the chat forums and other informal sources. So that we can build up abbreviation resources for a particular language. The way we can proceed with this task in Apertium, as I had suggested before was to first take in a list of few abbreviations and the use them to suggest what other more frequent words in the data might also count as abbreviations. This resource can be verified and then included for building up the system for the particular language. |

</ref> research, Srinivas Bangalore has suggested a method of bootstrapping from the data on the chat forums and other informal sources. So that we can build up abbreviation resources for a particular language. The way we can proceed with this task in Apertium, as I had suggested before was to first take in a list of few abbreviations and the use them to suggest what other more frequent words in the data might also count as abbreviations. This resource can be verified and then included for building up the system for the particular language. |

||

== Outline of Research Paper == |

== Outline of Research Paper == |

||

| Line 336: | Line 323: | ||

*Repeat Steps from Week1 for these languages |

*Repeat Steps from Week1 for these languages |

||

*Improve and add non-standard->standard features to the pipeline as we go forward |

*Improve and add non-standard->standard features to the pipeline as we go forward |

||

|- |

|||

!colspan="2" style="text-align: right"|Deliverable #1<br />15 June |

|||

| |

|||

Compile the Weekly codes for 7 languages and Produce the deliverable. |

|||

|- |

|- |

||

! 5 !! 15 - 21 June |

! 5 !! 15 - 21 June |

||

| Line 363: | Line 354: | ||

*adding main monolingual languages worked on ( English,Spanish,Irish ) |

*adding main monolingual languages worked on ( English,Spanish,Irish ) |

||

*The one's which were worked on earlier ( in the initial phase )Improvement on these to be made and verified. |

*The one's which were worked on earlier ( in the initial phase )Improvement on these to be made and verified. |

||

|- |

|||

!colspan="2" style="text-align: right"|Deliverable #2<br />19 July |

|||

| |

|||

Compile the Weekly codes for about 7 languages and Produce the deliverable. |

|||

|- |

|- |

||

! 9 !! 20 - 26 July |

! 9 !! 20 - 26 July |

||

| Line 377: | Line 372: | ||

Working on binaries to be implemented efficiently on Apertium |

Working on binaries to be implemented efficiently on Apertium |

||

|- |

|- |

||

! |

! 12 !! 11 August - 18 August |

||

| |

|||

Integrating and testing support for the set of deliverables, along with information from last week. |

|||

|- |

|||

!colspan="2" style="text-align: right"|pencils-down week<br />final evaluation<br />18 August - 22 August |

|||

| |

| |

||

*Continuing work from last week |

*Continuing work from last week |

||

*Making Documentation and other |

*Making Documentation and other reqd. deliverables. |

||

|} |

|} |

||

==References== |

==References== |

||

Latest revision as of 09:19, 21 March 2014

Contents

- 1 Introduction

- 2 Primary Goal

- 3 Coding Challenges

- 4 Non Standard features in the Text

- 4.1 Use of content specific terms

- 4.2 Use of Emoticons

- 4.3 Use of Repetitive or Extended Words

- 4.4 Handline repetitive expressions ( with spaces )

- 4.5 Handling Hashtags

- 4.6 Abbreviation and Acronyms (CONVENTIONAL)

- 4.7 Abbreviations (UNCONVENTIONAL)

- 4.8 Apostrophe correction

- 4.9 Diacritics restoration

- 4.10 Spelling mistakes

- 4.11 Spacing and hyphen variation & optional hyphen

- 4.12 Handling Links

- 5 Prototype of the Toolkit

- 5.1 Step.1 Language Identification

- 5.2 Step.2 Removing Emoticons ( as Regular Expressions )

- 5.3 Step.3 Superblank addition for Hashtag and other content specific terms/symbols

- 5.4 Step.4 Tokenize

- 5.5 Step.5 Removing Emoticons ( as per-token )

- 5.6 Step.6 Substituting Abbreviation (CONVENTIONAL) list.

- 5.7 Step.7 Handling Extended Words

- 5.8 Step.8 Apostrophe correction

- 5.9 Step.9 Solving issue for Abbreviation ( NON-CONVENTIONAL )

- 5.10 Step.10 Checking words for Capitalisation

- 5.11 Step.11 Selecting the best candidate

- 5.12 Step.12 Addition of escape sequence

- 6 Literature Review

- 7 Outline of Research Paper

- 8 WorkPlan

- 9 References

Introduction[edit]

This section contains some points of introduction from my side.

- Name : Akshay Minocha

- E-mail address : akshayminocha5@gmail.com | akshay.minocha@students.iiit.ac.in

- Other information that may be useful to contact you: nick on the #apertium channel: ksnmi

- Why is it you are interested in machine translation?

- I'm interested in Language, and machine translation is a part of handling the language change. I have been working with understanding both theoretically as well as through building MT systems the methods involved in the Translation process.

- Why is it that they are interested in the Apertium project?

- This current project on "non-standard text input" has everything I love to work on. From, Informal data from Twitter/IRC/etc., handling noise removal, building FST's, analysing data and at the end building Machine Translation systems. I believe that this approach can be standardized for many source languages with the approach I have in mind. Having learned from the process in English, the language independent module will be a good contribution. Also, the translation quality we are working on should be intact when we are giving back to the community, well at least this is an important step. This is also one of the kind of projects whose implementation will help translation on all the language pairs on apertium at the end.

- Reasons why Google and Apertium should sponsor it - I'd love to work on the current project with the set of mentors. This project is important because the MT community on an open level should also welcome the change in the use of language, in the form of the popular non standard text, by the people. This will extend our reach to several people and practically increase the efficiency of the translation task no doubt.

- And a detailed work plan (including, if possible, a brief schedule with milestones and deliverables). Include time needed to think, to program, to document and to disseminate. - [Workplan]

- List your skills and give evidence of your qualifications. Tell us what is your current field of study, major, etc. Convince us that you can do the work. In particular we would like to know whether you have programmed before in open-source projects.

- I am a fourth year student at the International Institute of Information Technology, Hyderabad, India pursuing my Dual Degree (Btech+MS) in Computer Science and Digital Humanities. I have been inclined towards the linguistic studies even for my MS research.

- I have been associated with various kinds of projects, Some important one's are listed below -

- I'm an active member of the Translation Process Research Community from Europe and under the guidance of Michael Carl, Copenhagen Business school and Srinivas Bangalore from AT&T, USA. I have completed a project which models translators as expert or novice, based on their eye movements tracked while they are performing a translation Task, last summer at CBS. In Proceedings for ETRA 2014, Florida (Recognition of translator expertise using sequences of fixations and keystrokes, Pascual Martinez-Gomez, Akshay Minocha, Jin Huang, Michael Carl, Srinivas Bangalore, Akiko Aizawa )

- I had been associated to SketchEngine as a Programmer for almost a year. - My work during the initial phase there resulted in a publication at ACL SIGWAC 2013, which was about building Quality Web Corpus from Feeds Information.

- Was an initial contributor to the Health triangulation System, under the guidance of Dr. Jennifer Mankoff, HCI, Carnegie Mellon University.

- Have worked vigorously on Corpus Linguistics and Data Mining tasks. to support other contributions.

- A detailed CV can be found at [This Link]

- List any non-Summer-of-Code plans you have for the Summer, especially employment, if you are applying for internships, and class-taking. Be specific about schedules and time commitments. we would like to be sure you have at least 30 free hours a week to develop for our project.

- I am dedicated towards this project and am very excited about working on it. A commitment of 30 hours a week would not be a problem, I might even work on some more so as to complete the weekly goals as described in the Workplan.

Primary Goal[edit]

- Build non-standard to standard support for at least 15 languages.

- Language Priority list to be decided by mentor.

- Integrate the whole support efficiently with Apertium

- Test it with other MT systems

- Publish Research Paper(s) from the results of this work

Coding Challenges[edit]

Analysing the issues in non-standard data[edit]

I created a random set of 50 non-standard tweets and analysed them individually to see what goes wrong while performing the translation task.

Details of the analysis can be found on the following link - https://docs.google.com/spreadsheet/ccc?key=0ApJ82JmDw6DHdDBad1ZXay1LZDhQckpxcXZmQTl1VVE#gid=2

In the above link you will find details of the authenticity of the tweets ( collected for an earlier project hence the year 2011).

TranslationAnalysisSheet

The Extended word reduction task (Mailing list)[edit]

At the moment this works for English using the wordlist generated from the English dictionary.

The dictionary can be replaced by any other word list and the output will work properly accordingly.

Sample Input1 ->

Helllooo\ni\ncompletely\nloooooove\nyouuu\n!!!\nnooooo\ndoubt\nabout\nthat\n!!!!!!!!\n;)\n

Output2 (at the end of the processing)

^Helllooo/Hello$\n^i/i$\n^completely/completely$\n^loooooove/love$\n^youuu/you$\n^!!!/!!!$\n^nooooo/no$\n^doubt/doubt$\n^about/about$\n^that/that$\n^!!!!!!!!/!!!!!!!!$\n^;)/;)$\n

Please Check it out on the github link -> [ https://github.com/akshayminocha5/non_standard_repitition_check GitHub-Non-Standard-reduction ]

Corpus Creation[edit]

Separate task on Corpus Creation for English ->

- With special symbols. The number of tweets were high and the list of emoticons from this was considerable. Ended up finding around 545 most frequently used emoticons ( list of emoticons from the twitter dataset can be found here Emoticons_NON_Standard

'Number of Posts -> 475,179

Link -> Emoticon_dataset - Abbreviations are the words which are not in the dictionary but which are used on social platforms specially like Twitter where the users face a crunch in the limit of the characters.

Around 100 most Common abbreviations from tweets collected over a period of time are listed in the following link -> Abbreviations_english

Number of Posts -> 94,290

Link -> abbreviations_english_dataset - Repetitive or Extended words and punctuators -> Using a simple algorithm, I separated these occurrences. By generating a word list we know how the trend of using these words are. Also helps us to standardize it for further processing.

Number of Posts -> 411,404

Link -> Extended_words_dataset

Non Standard features in the Text[edit]

I analysed the most common categories of non-standard text occurrences and have summed it up below, The prototype later will describe how I plan to use the modules below. For some the order wont be important as we aim to make the whole structure regardless of the input language.

Use of content specific terms[edit]

Such as RT (ReTweet) and hashtags in the case of twitter. These have to be Ignored and we should understand that this does not affect the translation quality much. The random ( any position ) use of the above however affects the machine translation system which are ahead in the pipeline for the processing of the text. Links are also present in most of the tweets.

Terms such as these constitute an exhaustive list. After trial and error we seemed convinced that they should be processed as 'superblanks'.

Use of Emoticons[edit]

People use emoticons very frequently in posts. These have to be ignored. Analysing the symbols which were present in the set of tweets, I found out that the most commonly occurring emoticons are the following - http://web.iiit.ac.in/~akshay.minocha/emoticons_list_non_standard.txt

Use of Repetitive or Extended Words[edit]

This is the most commonly occurring issue in the non-standard text. Task given by Francis earlier on the mailing list was to standardise the output according to a dictionary. At the moment this works for English using the wordlist generated from the English dictionary. Resources for other languages can be put in and it would work the same way.

Our final aim is to -> reduce these words in a similar fashion as described above and then match them. It is to be noted that in the dictionary the abbreviations and acronyms should also be added externally. In many cases repetition such as “uuuu” is given which would standardise to “you” so “uuuu”->”u”->”you” Hence abbreviation processing should always be after this step. Preferably at the end.

#Punctuation repetition is not a problem for us.

Since Apertium handles ‘!!!’ similar to ‘!’

Handline repetitive expressions ( with spaces )[edit]

Expressions such as -

“ ha ha ha ha” “ he he he he”

May produce errors in translation.

Translating “he he” on apertium en-es will give us “él él”

The solution to this is simple. After rectifying handful such expressions we can make a list for them and trim spaces in between so they can become non-functional while translation.

Handling Hashtags[edit]

#ThisIsDoneNow.

We are seeing hashtags as expressions or terms which are trending at the moment. They may also be seen as an identification to a particular topic on the web.

Earlier I wrote a heuristic script for hashtag disambiguation but at the moment, we are not doing it and processing hashtags as superblanks.

Things that I noticed while processing hashtags

- Cases in Hashtags ->

- Words are separated by Capitalis

For example, #ForLife -> For Life - Words are not separated by Capitals

For example, #Fridayafterthenext -> Friday after the next

- Words are separated by Capitalis

Solution -

- Hashtag disambiguation can be easilydone by any of the two ways We need to break it into separate words by using recurring references to the dictionary or FST’s. I think the later will be much easier.

So Words in hashtags should be represented as a ‘lone sentence’. Example, “Today comes monday again, #whereismyextrasunday” -> Today comes monday again. “Where is my extra Sunday”

Abbreviation and Acronyms (CONVENTIONAL)[edit]

In the tweets by matching the most frequently occurring non dictionary words, I came up with the list of a few abbreviations.

For English These are - http://web.iiit.ac.in/~akshay.minocha/abbreviations_english.txt The solution to improve translation due to the occurrence of these is simple. When we know what their full form is, we can simply trade places as the final step of the processing towards standard input.

Abbreviation of single character representations such as

r->are, u->you, 2->to

are also included. This list can be increased by further analysing the data.

Abbreviations (UNCONVENTIONAL)[edit]

Examples,

rehab -> rehabilitation betw -> between

These are words just in the shortened forms.

A suggested solution which is implemented in the prototype can be seen. It shows the use of dictionary helping in predicting the best grammatical word to fit with the best possible chance.

Apostrophe correction[edit]

dont | do’nt | dont’ -> don’t shell -> she’ll | shell (disambiguation)

A module for the same has been built to correct the apostrophes it was built using most commonly used apostrophe words in English (refer [http:/web.iiit.ac.in/~akshay.minocha/apostrophe_list.txt] )

Diacritics restoration[edit]

Since we want to make our toolkit language independent our aim is to solve all the different kinds of problems faced. If we consider languages apart from English, diacritics errors are the most frequently occurring errors that are seen.

Example,

¿Qué le pasó a mi discográfia de RAMMSTEIN? #MeCagoEnLaPuta discográfia -> discografía (´ in the wrong place)

For diacritic restoration, charlifter ( http://code.google.com/p/charlifter-l10n/ ) can be included in the pipeline, which would restore the original text to its correct format in terms of the diacritic problem.

Spelling mistakes[edit]

These include spelling mistakes on purpose as well as the errors that arise due to vowel dropping. Levenshtein distance between two strings is defined as the minimum number of edits needed to transform one string into another, with the allowable edit operations being insertion, deletion, or substitution of a single character. The above algorithm works decently, but this was a bit non-accurate as it did not consider the "transposition" action which is defined in the following link - ( http://norvig.com/spell-correct.html). Peter Norvig in the spell correct link, shows us how easily we can build a spelling correction script by using a large standard corpora for a particular language.

Building a spelling corrector for a language becomes easy be it by any of the above ways. It solves both the problems.

But the results were not convincing when we used this spell corrector.

You can see the results ( https://docs.google.com/spreadsheet/ccc?key=0ApJ82JmDw6DHdDBad1ZXay1LZDhQckpxcXZmQTl1VVE&usp=drive_web#gid=4 ) Sheet Name - Test. Some more efforts and comparisons were made in the same sheet. You can have a look at them too.

I plan to include 'hfst-ospell' for the languages to correct the issues on spelling correction, although it was decided to down-weigh the contribution of this module with the earlier method.

Spacing and hyphen variation & optional hyphen[edit]

Since we are proposing a proper mechanism to figure out a solution. One way is to come up with the creation of a reference corpus (either what apertium is currently using or we can come up with something real quick using the technique described in my paper) Feed Corpus: An Ever Growing Up-To-Date Corpus, Minocha, Akshay and Reddy, Siva and Kilgarriff, Adam, ACL SIGWAC, 2013.

For extending language support to different languages, In case the corpus is not present, we can generate some million words of corpus to help us build characteristics of the language model.

With this we can use a trigram based model( or higher n-gram) or use POS information and user a more efficient HMM model to predict the most probably occurring word, by training on the reference corpus.

After creating the Standard text, the only way to verify our level of success would be to check and compare our system against the other machine translation systems available like Moses, train them on different sets and check our accuracy.

Handling Links[edit]

Imp from the perspective of Internal Handling with respect to the Apertium translator.

Hyperlinks should be treated as superblanks rather than being translated.

Not only for non standard but also for normal standard input this needs to taken into account in case of apertium at the moment.

As machine translation on the links changes the purpose of the same. (For example, say en->es translation of the text on Apertium -

http://en.wikipedia.org/wiki/Red_Bull -> http://en.wikipedia.org/wiki/Rojo_Bull

The above example would re-direct us to an undesirable page.

Prototype of the Toolkit[edit]

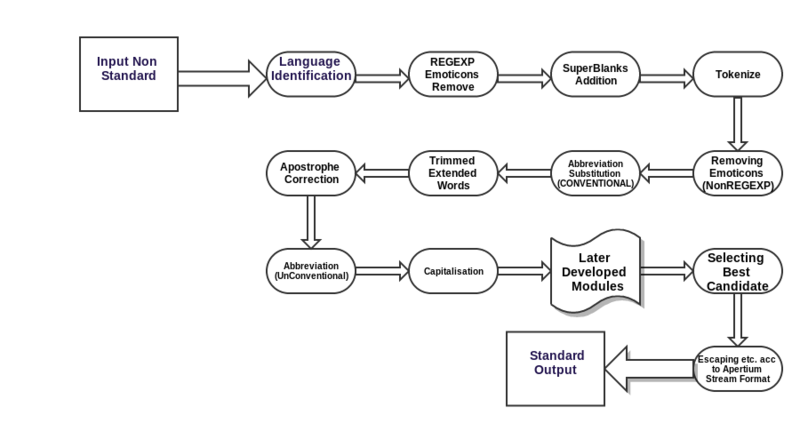

Too see the processing of the Prototype I created Please check the Image Below

The functional Prototype involving the whole processing, and right now correctly working for English is available at the github link -> https://github.com/akshayminocha5/apertium-non-standard-input-task

Below is the description of the working of the Modules -

Step.1 Language Identification[edit]

We would like to identify the source language, for further processing, right now the prototype includes supports for English(since the resources for the same are available when I built it) If we want to extend the support we may simply put resources in the specified format.

We can use langid.py which supports language identification for around 97 different languages and all the 32 languages used in apertium are listed here too (Lui, Marco, and Timothy Baldwin. "langid. py: An Off-the-shelf Language Identification Tool." ) I've implemented the same.

Step.2 Removing Emoticons ( as Regular Expressions )[edit]

This would solve the symbolic emoticon representations. Like :) or even a series of repetitive emoticons like :):):):) A basic regular expression that looks for emoticons in three parts -> eyes, nose and mouth.

Step.3 Superblank addition for Hashtag and other content specific terms/symbols[edit]

Hashtags/Other symbols and '/' are to be put in superblanks.

We are using '/' as superblanks because, in later stages it is being used as a delimiter

Step.4 Tokenize[edit]

Tokenize the text, the aim is to tokenize the text so that we can use the information in the further steps. A basic tokenizer is implemented for the proper processing of the non-standard data in the next steps.

Step.5 Removing Emoticons ( as per-token )[edit]

There are a few emoticons listed in http:web.iiit.ac.in/~akshay.minocha/emoticons_list_non_standard.txt which were extracted from the twitter database and they are not reducible to regular expressions hence we propose to handle them on a per token basis.

Emoticons are mainly language independent and hence these steps are rather consistent with different language pairs.

Step.6 Substituting Abbreviation (CONVENTIONAL) list.[edit]

At this moment I have replaced the most frequently occurring abbreviations whose information is with us. We use this resource as a substitution list.

Since, english resources are widely available online this substitution list was easy.

I had a Discussion with Francis regarding such a resource for other languages, I suggest we would make a list of a few abbreviations and then automatically come up with high frequency words appearing in non standard texts which are not in the language’s dictionary. We can ask people in the community to help us figure out whether they are really abbreviations. The number of words each person would have to check is very less and hence this will be an easy and a productive process.

Step.7 Handling Extended Words[edit]

From this step onwards, I chose to add a list for the following steps which would contain information per token in the form of

^original/candidate1/candidate2/…$

Now we can safely improve the quality of the extended words Words with same characters being repeated >=3 in succession would be thought of as being extended words. The solution to this module is very similar to the exercise given and pointed out earlier in 2.2

Step.8 Apostrophe correction[edit]

If word exists both in wordlist and in a apostrophe error list then we need to do further processing to find out which one to use - Classical disambiguation example, would be

she’ll vs shell | hell vs. he’ll

Since results of this module will be more accurate if we include the POS information as well. So the ideal way to go about this is either HMM or CG style.

In the prototype made, I have used ngram(trigrams,bigrams) information to disambiguate the use.

if word doesn’t exist in wordlist only in the apostrophe error list, then replace it with the mapping

For example,

input -> do’nt

Step 1 -> dont

Step 2 -> dont not in wordlist but dont in apostrophe error list

Step 3 -> replace from mapping in apostrophe_map_error

dont -> don’t

Step.9 Solving issue for Abbreviation ( NON-CONVENTIONAL )[edit]

It was very usually noted that people have a habit of referring to long words in abbreviated form. These words are incomplete but are an originating subsequence for word which should have been present

rehab -> rehabilitation

Steps

- Build a trie for the language wordlist.

- If word not in wordlist, check trie for suggestions

- Use n-gram to come up with the best suggestion.

For later, Module efficiency can be increased by using HMM and POS information.

Step.10 Checking words for Capitalisation[edit]

In this module a simple heuristic is implemented where the words and the sentence ending punctuator information (!.?) is used to correct the capitalisation of the tokens.

Step.11 Selecting the best candidate[edit]

At the moment, the best candidate is the last word in the token suggestion list of the input.

We can use this to re-construct the sentence.

Replacing the superblanks that were set prior to the processing

Step.12 Addition of escape sequence[edit]

Addition of the escape sequences and also according to the Apertium Stream Format.

Literature Review[edit]

There are many sites [1], [2], [3] on the internet that offer SMS English to English translation services. However the technology behind these sites is simple and uses straight dictionary substitution, with no language model or any other approach to help them disambiguate between possible word substitutions. [4]

- There have been a few attempts to improve the machine translation task for non-standard data. One of the preliminary research include [5] Where the comparison between the linguistic characteristics of Europarl data and Twitter data is made. The methodology suggested relies heavily on the in-domain data to improve on the quality for further steps. The Evaluation metric shows an improvement 0.57% BLEU score corressponding to the set of improvement on a set of 600 sentences. Major suggestion from this research - t hashtags, @usernames, URLs should not be treated like regular words. This was the mistake we were doing earlier and didn’t help much on the translation task. They also follow the technique of putting in xml markup in the source text to work on it like super blanks.

Issues in this case -- Working on building a bi-lingual resource from in-domain data.

- Other sources of non standard data don’t see to get a significant improvement

- BLEU score improvement marginal

- This is a standard research[6] on the Non-Standard Words, (NSW) It suggests that Non-standard words are more ambiguous with respect to ordinary words in the ways of pronunciation and interpretation. In many applications, it is desirable to “normalize” text by replacing the NSWs with the contextually appropriate ordinary word or sequence of words. They have generally categorized numbers, abbreviations, other markup, url’s, handled capitalisation, etc. A very interesting method on tree based abbreviation model has been suggested in the research, which can give us ideas on improving our current abbreviation model or just have another addition to it in the model. This includes suggestion for vowel Dropping, shortened words and first syllable usage.

The issue with most of the research is the limitation to a particular language in this case English. They have standardized the most common points of english leaving scope for a lot of improvement. In our kind of processing at the moment we are not considering any specific markup techniques within the pipeline but this paper shows some promising work on the same which can be useful for developers, and other users who want to analyse the data in more detail. Such a convention can be added easily after conducting experiments and seeing results. - This [7] is a completely different approach where the author tries to solve the problem by proposing a character level machine translation approach. The issue here is accuracy, they have used the Jazzy spell checker[8] as baseline and the compared it with previous such research. The issue here is the huge resource being used up in training and tuning the MT system and also, such a system would have complications being included on the run with Apertium.

- This research [9] is more idea centric, where the author says the MT research is far from complete and we face many challenges. With our project we aim to target these problems specifically. Translation of Informal text and Translation of low resource language pairs are the ones which concern Apertium and us the most.

- This research [10] identifies the difficulties faced not only by the Translation community with the web forum data and other informal genres but also by people working on semantic role labelling, and probably many more who rely on data analytics, etc.

They propose that evaluation of systems which are MEANT tuned performed significantly better than other systems tuned according to BLEU and TER. With our module the Error analysis suggested would improve on the system, because of a significant rise in the number of known words, grammar and word sense, the semantic parser being used here would perform better. - This research[11] idea’s to the approach very similar to ours. But they have focussed mainly on the abbreviated word re-modelling and expansion, by implementing a character based translation model.

- Inspired by this [12] research, Srinivas Bangalore has suggested a method of bootstrapping from the data on the chat forums and other informal sources. So that we can build up abbreviation resources for a particular language. The way we can proceed with this task in Apertium, as I had suggested before was to first take in a list of few abbreviations and the use them to suggest what other more frequent words in the data might also count as abbreviations. This resource can be verified and then included for building up the system for the particular language.

Outline of Research Paper[edit]

- We make a test corpus of ~3000 words of non-standard text:

IRC/Facebook/Twitter. - This is translated to another language

- We evaluate the translation quality of:

- Apertium

- Moses (Europarl)

- Apertium + program

- Moses (Europarl) + program

We can repeat the task above for the language-support built by us towards the end of the project and then share our results with the other people in the community by publishing a research paper out of the whole work.

WorkPlan[edit]

See GSoC 2014 Timeline for complete timeline. My WorkPlan on the Timeline is as suggested below -

| week | dates | goals | eval | accomplishments | notes |

|---|---|---|---|---|---|

| post-application period 22 March - 20 April |

Dates 22 March - 20 April Work on English, Irish and Spanish. This along with discussion from mentors will give me an idea of the non-standard features in full, not limiting to just English. Ask for a language priority list | ||||

| community bonding period 21 April - 19 May |

where we have a dearth of resource and language models.

| ||||

| 1 | 19 - 24 May |

| |||

| 2 | 25 - 31 May |

| |||

| 3 | 1 - 7 June |

| |||

| 4 | 8 - 14 June |

| |||

| Deliverable #1 15 June |

Compile the Weekly codes for 7 languages and Produce the deliverable. | ||||

| 5 | 15 - 21 June |

| |||

| midterm eval 23 - 27 June |

|||||

| 6 | 29 June - 5 July |

| |||

| 7 | 6 - 12 July |

| |||

| 8 | 13 - 19 July |

| |||

| Deliverable #2 19 July |

Compile the Weekly codes for about 7 languages and Produce the deliverable. | ||||

| 9 | 20 - 26 July |

| |||

| 10 | 27 July - 2 August |

| |||

| 11 | 3 - 10 August |

Working on binaries to be implemented efficiently on Apertium | |||

| 12 | 11 August - 18 August |

Integrating and testing support for the set of deliverables, along with information from last week. | |||

| pencils-down week final evaluation 18 August - 22 August |

| ||||

References[edit]

- ↑ http://transl8it.com/

- ↑ http://www.lingo2word.com/translate.php

- ↑ http://www.dtxtrapp.com/

- ↑ Raghunathan, Karthik, and Stefan Krawczyk. CS224N: Investigating SMS text normali(z)ation using statistical machine translation. Technical Report, 2009.

- ↑ Jehl, Laura Elisabeth. "Machine translation for twitter." (2010).

- ↑ Sproat, Richard, et al. "Normalis(z)ation of non-standard words." Computer Speech & Language 15.3 (2001): 287-333.

- ↑ Pennell, Deana, and Yang Liu. "A Character-Level Machine Translation Approach for Normalis(z)ation of SMS Abbreviations." IJCNLP. 2011.

- ↑ Mindaugas Idzelis. 2005. Jazzy: The java open source spell checker.

- ↑ Lopez, Adam, and Matt Post. "Beyond bitext: Five open problems in machine translation."

- ↑ Lo, Chi-kiu, and Dekai Wu. "Can informal genres be better translated by tuning on automatic semantic metrics." Proceedings of the 14th Machine Translation Summit (MTSummit-XIV) (2013).

- ↑ Pennell, Deana L., and Yang Liu. "Normalis(z)ation of informal text." Computer Speech & Language 28.1 (2014): 256-277.

- ↑ S. Bangalore, V. Murdock, G. Riccardi - Bootstrapping bilingual data using consensus translation for a multilingual instant messaging system, 19th International Conference on Computational Linguistics, Taipei, Taiwan (2002), pp. 1–7