Difference between revisions of "User:Asfrent/Application"

| (23 intermediate revisions by 2 users not shown) | |||

| Line 4: | Line 4: | ||

translation pipeline at the moment). |

translation pipeline at the moment). |

||

== Application == |

|||

=== General information === |

|||

== High level optimizations == |

|||

'''Name:''' Andrei Sfrent |

|||

'''E-mail address:''' gsoc a asfrent d net |

|||

'''Other information that may be useful to contact you:''' |

|||

IRC - asfrent on #apertium |

|||

'''Why is it that you are interested in the Apertium project?''' |

|||

I find the projects of this organisation interesting and much related to some of the theory that I |

|||

studied in school (context free grammars, state machines, compilers). I like getting involved in projects |

|||

like this one, where I can learn new things while improving the speed and reliability by applying my |

|||

experience (so a good starting point for me would be VM-for-transfer). Also, I met on IRC some really |

|||

cool guys, so I think this project has a great team! |

|||

'''Which of the published tasks are you interested in? What do you plan to do?''' |

|||

VM-for-transfer project, along with any other optimisation tasks. I am planning to make VM-for-transfer |

|||

at least a bit better than the XML tree-walking code and then focus on other bottlenecks in the project. |

|||

=== About me (short summary) === |

|||

My name is Andrei Sfrent, I am studying for a Master's Degree in Machine Learning at the Imperial College |

|||

and I am interested in working on the VM-for-transfer Apertium project in GSoC this summer. |

|||

I am proficient in C++ and I also have a good background in the software optimisation area (last year I |

|||

participated in an optimisation contest organized by Intel and ended on the first place in Europe / forth |

|||

place worldwide [http://software.intel.com/fr-fr/articles/contest-winners-are-announced 1] |

|||

[http://intel-software-academic-program.com/tmp/certificates/4.pdf 2]). |

|||

I took a compilers course one year ago, when I had to write a compiler that translated code to [http://llvm.org/ LLVM] (see an example |

|||

[http://asfrent.net/viewgit/?a=viewblob&p=SQ2Compiler&h=3e1ef8fcf3645849e5066c08f3a1a3ffdc18ce31&hb=HEAD&f=examples/qsort/qsort.sq2 here] |

|||

which generated |

|||

[http://asfrent.net/viewgit/?a=viewblob&p=SQ2Compiler&h=252ff92991fb53d337fdbd8d315c9babbebb8893&hb=HEAD&f=examples/qsort/qsort.lir this LLVM code]). |

|||

This course took me through the basics of grammars, parsing, abstract syntax trees, code generation and |

|||

optimisation. You can browse the source code [http://asfrent.net/viewgit/?a=tree&p=SQ2Compiler&hb=HEAD&f= here]. |

|||

I previously had two internships with Google and I had the chance to work with Map Reduce / Knowledge |

|||

Graph on YouTube data, enhancing my engineering and design skills. |

|||

You can also find out more about me from my [http://asfrent.net/CV.pdf resume]. |

|||

=== About the project === |

|||

==== My contribution ==== |

|||

First of all, I think performance is key in a lot of software projects, including this one. Nobody |

|||

likes to wait for things to happen. I also think that my contribution can make parts of the code |

|||

significantly faster and I also enjoy doing so. I also believe that Apertium is great for helping people |

|||

translate documents, software and maybe even books. Furthermore, by also targeting languages that receive |

|||

less attention from other translation projects it has a direct impact on the people that speak those |

|||

languages. In this context, efficiency matters - building real time translation systems on top of Apertium |

|||

or translating large amounts of text, like books. |

|||

==== Roadmap and deliverables ==== |

|||

This is my estimated schedule. For details, please see |

|||

[http://wiki.apertium.org/wiki/User:Asfrent/Application#High_level_optimisations High_level_optimisations] and |

|||

[http://wiki.apertium.org/wiki/User:Asfrent/Application#Low_level_optimisations Low_level_optimisations] below. |

|||

* '''weeks 1-2''' - prepare VM-for-transfer and Apertium for optimisation. |

|||

** build a small framework for creating tests from text files using as reference the XML treewalking code output; fthe framework should also allow for easy testing / profiling with valgrind. |

|||

** improve the test suite (multiple languages, small texts, large texts). |

|||

** fix failing tests for '''apertium-xfervm'''. |

|||

** rewrite parts of the code using C++11 so we get a more compact code, but equivalent with the original. This way, I get to see more code and get a better understanding of the relations between different parts. [already tried in the proof of concept] |

|||

** ''extra: make the project to compile with clang to LLVM and research LLVM code.'' |

|||

** ''extra: improve documentation.'' |

|||

'''Deliverables (weeks 1-2)''': testing framework for VM-for-transfer, a test suite of 20 tests, tests passing. |

|||

* '''weeks 3-4''' - work on the main algorithms and data structures that are present in the transfer code. |

|||

** transform the trie that is used for matching strings in the interpreter into a [http://en.wikipedia.org/wiki/Radix_tree Radix Tree] (a compressed version of the trie). |

|||

** write a memory allocator for the Radix tree in order to improve data locality (more cache hits). |

|||

** ''extra: refactor the code in order to eliminate as much overhead as possible that comes from copying structures around (such as vectors, strings). [already tried in the proof of concept].'' |

|||

* '''weeks 5-6''' - interpreter analysis and optimisations. |

|||

** analyse the interpreter design. |

|||

** fix stack datatype issues (current stack only holds strings and string-to-int is really slow, whenever an instruction actually needs to pop integers) |

|||

** analyse algorithms used in slow instructions (such as clip) and optimise the code in each one of them. This may involve different matching algorithms or preprocessing. |

|||

** decide which instructions should be translated directly to LLVM code (push, pop, jnz, jz, call, test) and which will stay as C++ code. |

|||

** ''extra: improve documentation on each instruction.'' |

|||

'''Deliverables (weeks 3-6)''': VM-for-transfer is as fast as the XML tree walking transfer code. |

|||

* '''weeks 7-8''' - first phase of porting the generated code to LLVM. |

|||

** the idea is to get rid of the main loop of the interpreter and use [http://llvm.org/docs/CommandGuide/lli.html lli] to run the code. Work will be done in a separate branch. |

|||

** design an API for the compiler so it will be easy to add new high level instructions, as well as pure LLVM ones. |

|||

** start to implement translation to LLVM in the compiler. All [http://wiki.apertium.org/wiki/VM_for_transfer#Instruction_Set instructions] will be translated to LLVM calls to C++ code. |

|||

** profile the prototype and decide whether it makes sense to move on. I will plan this as a "fail fast" approach - the idea is to find out as soon as possible if it's worth the effort. |

|||

* '''weeks 9-12''' - make the compiler translate all the instructions to LLVM. |

|||

** implement, test and debug on the new compiler. |

|||

** redesign the interpreter as a robust library of high level Apertium instructions. |

|||

** make the two work together. |

|||

** ''extra: profile other parts of the Apertium, find easy to fix bottlenecks and fix them. Make Apertium FAST.'' |

|||

'''Deliverables (weeks 7-12)''': LLVM compiler for Apertium transfer, VM-transfer is 2x faster than XML based code. |

|||

* Project completed. :-) |

|||

* Find something else to optimise and bring faster translation to the world! |

|||

== High level optimisations == |

|||

=== Algorithms and data structures === |

=== Algorithms and data structures === |

||

| Line 18: | Line 123: | ||

=== Interpreter redesign === |

=== Interpreter redesign === |

||

A profile of a slightly |

A profile of a slightly optimised code shows that a lot of time is spent in the interpreter code in order |

||

to convert strings to integers. This is because the interpreter stack has been designed to work with a |

to convert strings to integers. This is because the interpreter stack has been designed to work with a |

||

a single data type (strings), so every time a VM instruction needs integral data types we need to convert |

a single data type (strings), so every time a VM instruction needs integral data types we need to convert |

||

| Line 24: | Line 129: | ||

=== Instruction set === |

=== Instruction set === |

||

There are some instructions that take much longer time than others. By |

There are some instructions that take much longer time than others. By analysing the instruction set we |

||

should be able to further fragment these time consuming instructions into smaller ones that will perform |

should be able to further fragment these time consuming instructions into smaller ones that will perform |

||

faster and could be easier to |

faster and could be easier to optimise. |

||

== |

=== Port code to LLVM === |

||

Redesign the compiler to produce [http://llvm.org/ LLVM] code which would enable us to take advantage of |

|||

the optimisations built into LLVM. Most of the stack / branching / logical / math instructions will be translated |

|||

directly to LLVM instructions, while complex instructions would be translated to calls to C++ code (compiled |

|||

to LLVM as well). |

|||

By compiling to LLVM we get rid of most of the VM implementation (the parts that handle stack / branching / |

|||

logical / math operations), we gain a better, simplified architecture and speed. Hopefully, a lot of speed. |

|||

This can be done in conjunction with the other optimisations, which would then only apply to the complex |

|||

instructions of the transfer VM (such as '''clip''') and working with the matching data structures. |

|||

== Low level optimisations == |

|||

=== Memory allocator === |

=== Memory allocator === |

||

| Line 52: | Line 168: | ||

references). |

references). |

||

== Proof of concept == |

== Proof of concept (coding challenge and one step further) == |

||

The tools I used to produce the proof of concept were: |

The tools I used to produce the proof of concept were: |

||

| Line 79: | Line 195: | ||

Let's take a look at the profile (remember, times are measured on the '''-10000''' file, while the profile is done |

Let's take a look at the profile (remember, times are measured on the '''-10000''' file, while the profile is done |

||

on the '''-1000''' input file): |

on the '''-1000''' input file): |

||

[[File:Xfervm-master-profile.png]] |

|||

The odd thing here is the '''wtolower''' static method that seems to consume a lot of time. So most of the time spent |

|||

in selecting a matching rule is actually spent making strings lowercase. This was because a locale variable that was |

|||

always initialized in the respective function. In this [https://github.com/ggm/vm-for-transfer-cpp/tree/vm_wstring_utils-opt branch] |

|||

I fixed the '''wtolower''' method. Now the code runs approx 60% faster: |

|||

andrei@andrei-xps bin $ ./_tm |

|||

real 0m25.168s |

|||

user 0m25.078s |

|||

sys 0m0.072s |

|||

We can see that we got a huge improvement just by fixing one function. I ran the profiler again, looking for |

|||

the next bottleneck: |

|||

[[File:Xfervm-after-wtolower.png]] |

|||

As we can see, the '''SystemTrie::getPatternNodes''' method is still quite slow. Since the method returns all nodes |

|||

that correspond to a certain pattern starting from a node, we could cache the results at node level. Another thing |

|||

that makes this method work slower than it should is vector copying. I decided to do the caching and restructure |

|||

the code a bit in order to use constant references instead of copying things around (see this |

|||

[https://github.com/ggm/vm-for-transfer-cpp/commit/d3ee31d1ec1850582f592f1cf16d3e9a01df871c commit]). The new time |

|||

we get is, again, smaller: |

|||

andrei@andrei-xps bin $ ./_tm |

|||

real 0m15.677s |

|||

user 0m15.589s |

|||

sys 0m0.080s |

|||

The next step I tried is to do some small, low level optimisations (like improving the code for branch prediction |

|||

hits, see [https://github.com/ggm/vm-for-transfer-cpp/commit/4ae8b270bb0bbe807712dd7aaea0ef84c5a55f28 here]). The |

|||

result is almost unnoticeable, but these kind of optimisations could also improve the efficiency of the code: |

|||

andrei@andrei-xps bin $ ./_tm |

|||

real 0m15.447s |

|||

user 0m15.377s |

|||

sys 0m0.064s |

|||

This is the first time when we can look at the profiler report and see that one of the slowest parts is the actual |

|||

execution of the VM instructions. This report also gives us a hint on the interpreter design. It seems that a lot |

|||

of time is being spent in converting strings to integers and vice versa. By looking at the code I discovered that |

|||

the interpreter stack can only work with strings, so each time a integer is pushed or popped we need to apply |

|||

these expensive conversions. |

|||

[[File:Xfervm-after-caching-and-branch.png]] |

|||

[[Category:GSoC 2014 Student proposals|Asfrent]] |

|||

Latest revision as of 16:36, 20 March 2014

Contents

Project description[edit]

The project aims to speed up the VM for transfer code (the transfer step being the slowest of the translation pipeline at the moment).

Application[edit]

General information[edit]

Name: Andrei Sfrent

E-mail address: gsoc a asfrent d net

Other information that may be useful to contact you:

IRC - asfrent on #apertium

Why is it that you are interested in the Apertium project?

I find the projects of this organisation interesting and much related to some of the theory that I studied in school (context free grammars, state machines, compilers). I like getting involved in projects like this one, where I can learn new things while improving the speed and reliability by applying my experience (so a good starting point for me would be VM-for-transfer). Also, I met on IRC some really cool guys, so I think this project has a great team!

Which of the published tasks are you interested in? What do you plan to do?

VM-for-transfer project, along with any other optimisation tasks. I am planning to make VM-for-transfer at least a bit better than the XML tree-walking code and then focus on other bottlenecks in the project.

About me (short summary)[edit]

My name is Andrei Sfrent, I am studying for a Master's Degree in Machine Learning at the Imperial College and I am interested in working on the VM-for-transfer Apertium project in GSoC this summer.

I am proficient in C++ and I also have a good background in the software optimisation area (last year I participated in an optimisation contest organized by Intel and ended on the first place in Europe / forth place worldwide 1 2).

I took a compilers course one year ago, when I had to write a compiler that translated code to LLVM (see an example here which generated this LLVM code). This course took me through the basics of grammars, parsing, abstract syntax trees, code generation and optimisation. You can browse the source code here.

I previously had two internships with Google and I had the chance to work with Map Reduce / Knowledge Graph on YouTube data, enhancing my engineering and design skills.

You can also find out more about me from my resume.

About the project[edit]

My contribution[edit]

First of all, I think performance is key in a lot of software projects, including this one. Nobody likes to wait for things to happen. I also think that my contribution can make parts of the code significantly faster and I also enjoy doing so. I also believe that Apertium is great for helping people translate documents, software and maybe even books. Furthermore, by also targeting languages that receive less attention from other translation projects it has a direct impact on the people that speak those languages. In this context, efficiency matters - building real time translation systems on top of Apertium or translating large amounts of text, like books.

Roadmap and deliverables[edit]

This is my estimated schedule. For details, please see High_level_optimisations and Low_level_optimisations below.

- weeks 1-2 - prepare VM-for-transfer and Apertium for optimisation.

- build a small framework for creating tests from text files using as reference the XML treewalking code output; fthe framework should also allow for easy testing / profiling with valgrind.

- improve the test suite (multiple languages, small texts, large texts).

- fix failing tests for apertium-xfervm.

- rewrite parts of the code using C++11 so we get a more compact code, but equivalent with the original. This way, I get to see more code and get a better understanding of the relations between different parts. [already tried in the proof of concept]

- extra: make the project to compile with clang to LLVM and research LLVM code.

- extra: improve documentation.

Deliverables (weeks 1-2): testing framework for VM-for-transfer, a test suite of 20 tests, tests passing.

- weeks 3-4 - work on the main algorithms and data structures that are present in the transfer code.

- transform the trie that is used for matching strings in the interpreter into a Radix Tree (a compressed version of the trie).

- write a memory allocator for the Radix tree in order to improve data locality (more cache hits).

- extra: refactor the code in order to eliminate as much overhead as possible that comes from copying structures around (such as vectors, strings). [already tried in the proof of concept].

- weeks 5-6 - interpreter analysis and optimisations.

- analyse the interpreter design.

- fix stack datatype issues (current stack only holds strings and string-to-int is really slow, whenever an instruction actually needs to pop integers)

- analyse algorithms used in slow instructions (such as clip) and optimise the code in each one of them. This may involve different matching algorithms or preprocessing.

- decide which instructions should be translated directly to LLVM code (push, pop, jnz, jz, call, test) and which will stay as C++ code.

- extra: improve documentation on each instruction.

Deliverables (weeks 3-6): VM-for-transfer is as fast as the XML tree walking transfer code.

- weeks 7-8 - first phase of porting the generated code to LLVM.

- the idea is to get rid of the main loop of the interpreter and use lli to run the code. Work will be done in a separate branch.

- design an API for the compiler so it will be easy to add new high level instructions, as well as pure LLVM ones.

- start to implement translation to LLVM in the compiler. All instructions will be translated to LLVM calls to C++ code.

- profile the prototype and decide whether it makes sense to move on. I will plan this as a "fail fast" approach - the idea is to find out as soon as possible if it's worth the effort.

- weeks 9-12 - make the compiler translate all the instructions to LLVM.

- implement, test and debug on the new compiler.

- redesign the interpreter as a robust library of high level Apertium instructions.

- make the two work together.

- extra: profile other parts of the Apertium, find easy to fix bottlenecks and fix them. Make Apertium FAST.

Deliverables (weeks 7-12): LLVM compiler for Apertium transfer, VM-transfer is 2x faster than XML based code.

- Project completed. :-)

- Find something else to optimise and bring faster translation to the world!

High level optimisations[edit]

Algorithms and data structures[edit]

Look for better data structures and algorithms that will result in a lower complexity of the system. One thing that we could do to the current design is to compress the trie that stores rules (trie compression involves finding path in which the nodes have only one child and compress that path into a single node). The lookup table in each node will be string indexed, allowing the trie to have better search times because less nodes will be explored (although the same number of characters).

We could also introduce supplementary fast links in the trie (in the lookup tables present at each node) by making an analysis of the most common matches that are needed in practice.

Interpreter redesign[edit]

A profile of a slightly optimised code shows that a lot of time is spent in the interpreter code in order to convert strings to integers. This is because the interpreter stack has been designed to work with a a single data type (strings), so every time a VM instruction needs integral data types we need to convert the strings.

Instruction set[edit]

There are some instructions that take much longer time than others. By analysing the instruction set we should be able to further fragment these time consuming instructions into smaller ones that will perform faster and could be easier to optimise.

Port code to LLVM[edit]

Redesign the compiler to produce LLVM code which would enable us to take advantage of the optimisations built into LLVM. Most of the stack / branching / logical / math instructions will be translated directly to LLVM instructions, while complex instructions would be translated to calls to C++ code (compiled to LLVM as well).

By compiling to LLVM we get rid of most of the VM implementation (the parts that handle stack / branching / logical / math operations), we gain a better, simplified architecture and speed. Hopefully, a lot of speed. This can be done in conjunction with the other optimisations, which would then only apply to the complex instructions of the transfer VM (such as clip) and working with the matching data structures.

Low level optimisations[edit]

Memory allocator[edit]

We could design a memory allocator for the VM data structures that will keep related objects (like nodes in a tree) as close as possible one to the other in order to increase the cache hits).

Branch prediction[edit]

Some parts can be restructured in order to make use of the branch prediction at the CPU level. I worked a little on some parts of the code that had this issue and gained some speedup using this technique.

Constant pool[edit]

The current implementation of the virtual machine spends a lot of time in copying strings from one method to the other. This could be greatly improved by using a string pool.

Caching[edit]

Caching can really improve the behavior of the VM - methods that are called often with (more or less) the same parameters can maintain a cache of the results if they do not have any side effects or depend on the global state. In the proof of concept, caching itself made a difference of about 10%.

Reduce copying of the data structures[edit]

As you will see in the code profiling report below, a lot of time was spent in moving vectors around. This can be avoided by a stricter coding style (marking returned vectors with const& or, when not possible, just using plain references).

Proof of concept (coding challenge and one step further)[edit]

The tools I used to produce the proof of concept were:

- vim - the best editor there is

- valgrind - check for memory leaks

- callgrind (a valgrind tool) - profiling the code

- kcachegrind - GUI for callgrind reports

First I compiled and installed apertium on my machine (using the es-ro pair). I started with a fresh version of VM-for-transfer, by checking out the master branch of the repository. The test files I am using are part of the Parliament Proceedings Parallel Corpus 1996-2011.

In order to properly test the VM, I created two input files, pre-xfervm-10000 and pre-xfervm-1000. Both of them are created by running the first part of the pipeline (until just before the transfer), the only difference is the number of lines that were processed out of the original text (which is given by their suffix). The purpose of the pre-xfervm-10000 file is time evaluation, while the profiling will be done on the smaller one, pre-xfervm-1000, because valgrind takes a lot of time to produce the profiling report.

The fresh version (master branch) of the VM-for-transfer takes about 40s to do the transfer:

andrei@andrei-xps bin $ ./_tm real 0m40.275s user 0m40.183s sys 0m0.052s

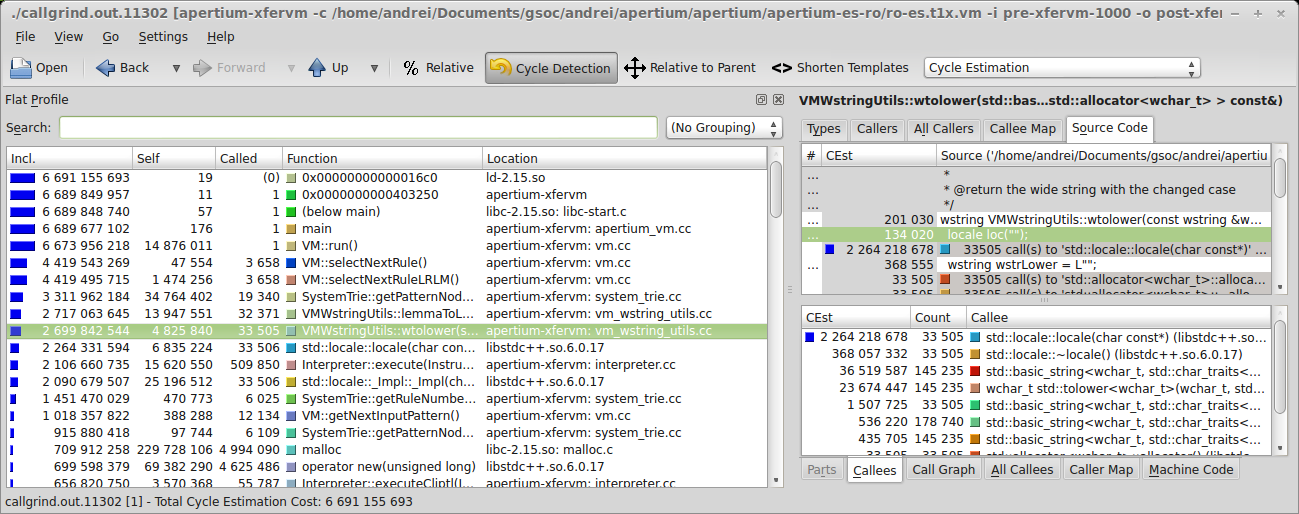

Let's take a look at the profile (remember, times are measured on the -10000 file, while the profile is done on the -1000 input file):

The odd thing here is the wtolower static method that seems to consume a lot of time. So most of the time spent in selecting a matching rule is actually spent making strings lowercase. This was because a locale variable that was always initialized in the respective function. In this branch I fixed the wtolower method. Now the code runs approx 60% faster:

andrei@andrei-xps bin $ ./_tm real 0m25.168s user 0m25.078s sys 0m0.072s

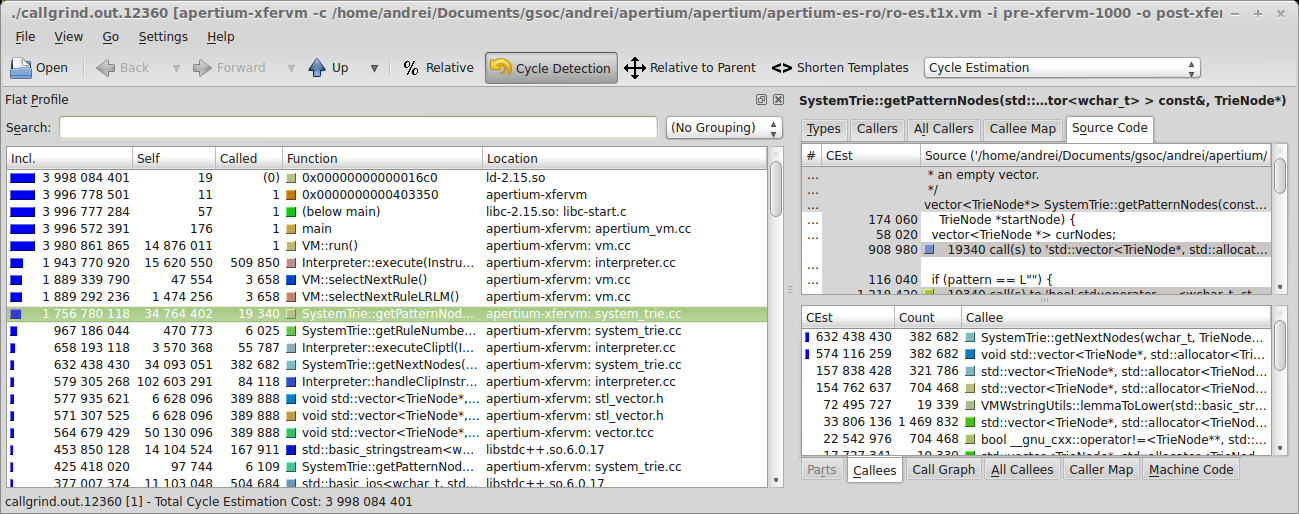

We can see that we got a huge improvement just by fixing one function. I ran the profiler again, looking for the next bottleneck:

As we can see, the SystemTrie::getPatternNodes method is still quite slow. Since the method returns all nodes that correspond to a certain pattern starting from a node, we could cache the results at node level. Another thing that makes this method work slower than it should is vector copying. I decided to do the caching and restructure the code a bit in order to use constant references instead of copying things around (see this commit). The new time we get is, again, smaller:

andrei@andrei-xps bin $ ./_tm real 0m15.677s user 0m15.589s sys 0m0.080s

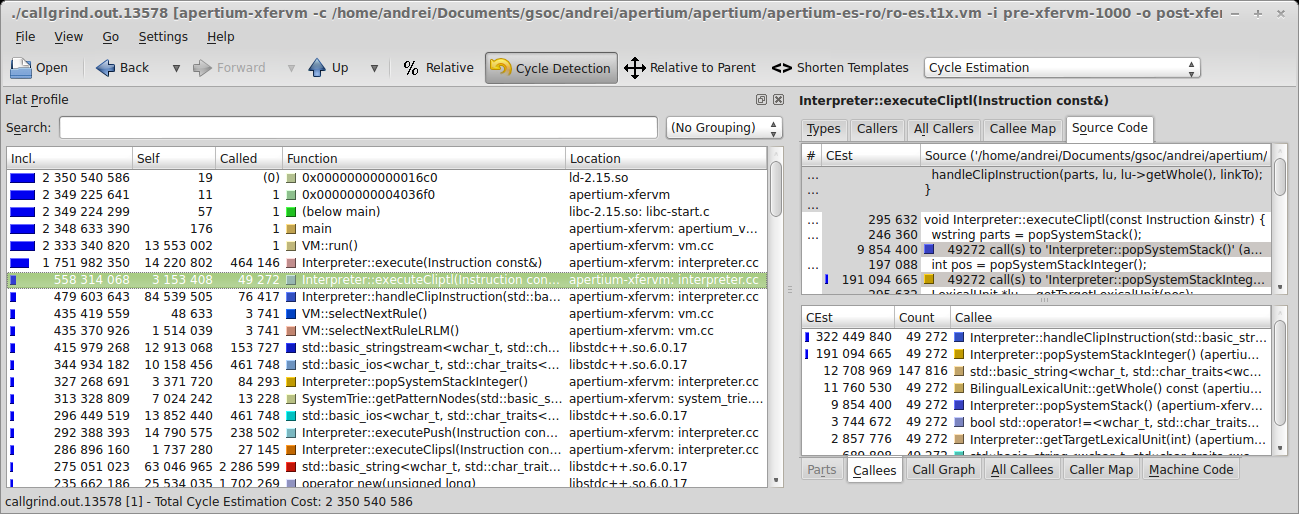

The next step I tried is to do some small, low level optimisations (like improving the code for branch prediction hits, see here). The result is almost unnoticeable, but these kind of optimisations could also improve the efficiency of the code:

andrei@andrei-xps bin $ ./_tm real 0m15.447s user 0m15.377s sys 0m0.064s

This is the first time when we can look at the profiler report and see that one of the slowest parts is the actual execution of the VM instructions. This report also gives us a hint on the interpreter design. It seems that a lot of time is being spent in converting strings to integers and vice versa. By looking at the code I discovered that the interpreter stack can only work with strings, so each time a integer is pushed or popped we need to apply these expensive conversions.