Difference between revisions of "Курсы машинного перевода для языков России/Session 0"

m (moved Машинный перевод для языков России/Session 0 to Курсы машинного перевода для языков России/Session 0: ... to get a better structure.) |

|||

| (9 intermediate revisions by one other user not shown) | |||

| Line 22: | Line 22: | ||

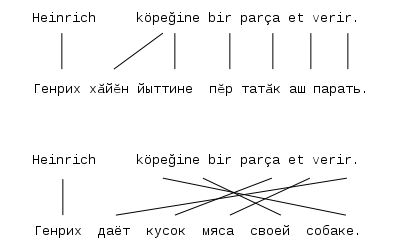

Direct, or word-for-word machine translation works by reading words in the source language one at a time, and looking them up in a bilingual word list of surface forms. Words may also be deleted, or left out, and maybe translated to one or more words. No grammatical analysis is done, so even simple errors, such as agreement in gender and number between a determiner and head noun will remain in the target language output. |

Direct, or word-for-word machine translation works by reading words in the source language one at a time, and looking them up in a bilingual word list of surface forms. Words may also be deleted, or left out, and maybe translated to one or more words. No grammatical analysis is done, so even simple errors, such as agreement in gender and number between a determiner and head noun will remain in the target language output. |

||

[[File:Session0 primer1.svg|center]] |

|||

:EXEMPLE 1 |

|||

:Heinrich köpeğine bir parça et verir. |

|||

:<< TXUVAIX AQUÍ >> |

|||

:Генрих сетö яй кусöк аслас понлы. <!-- Heinrich gives piece meat his dog.DAT --> |

|||

:Heinrich antoi lihapalan koiralleen. <!-- Heinrich gives meat.piece.ACC dog.HIS.TO --> |

|||

:Генрих даёт кусок мяса своей собаке. |

|||

===Transfer=== |

===Transfer=== |

||

Transfer-based machine translation works by first converting the source language to a language-dependent intermediate representation, and then rules are applied to this intermediate representation in order to change the structure of the source language to the structure of the target language. The translation is generated from this representation using both bilingual dictionaries and grammatical rules. |

Transfer-based machine translation works by first converting the source language to a language-dependent intermediate representation, and then rules are applied to this intermediate representation in order to change the structure of the source language to the structure of the target language. The translation is generated from this representation using both bilingual dictionaries and grammatical rules. |

||

[[File:Session0 primer2.svg|300px|right]] |

|||

There can be differences in the level of abstraction of the intermediate representation. We can distinguish two broad groups, shallow transfer, and deep transfer. In shallow-transfer MT the intermediate representation is usually either based on morphology or shallow syntax. In deep-transfer MT the intermediate representation usually includes some kind of parse tree or graph structure (see images on the right). |

There can be differences in the level of abstraction of the intermediate representation. We can distinguish two broad groups, shallow transfer, and deep transfer. In shallow-transfer MT the intermediate representation is usually either based on morphology or shallow syntax. In deep-transfer MT the intermediate representation usually includes some kind of parse tree or graph structure (see images on the right). |

||

| ⚫ | Transfer-based MT usually works as follows: The original text is first analysed and disambiguated morphologically (and in the case of deep transfer, syntactically) in order to obtain the source language intermediate representation. The transfer process then converts this final representation (still in the source language) to a representation of the same level of abstraction in the target language. From the target language representation, the target language is generated. |

||

:EXEMPLE 2 |

|||

| ⚫ | Transfer-based MT usually works as follows: The original text is first analysed and disambiguated morphologically (and in the case of deep transfer, syntactically) in order to obtain the source language intermediate representation. The transfer process then converts this final representation (still in the source language) to a representation of the same level of abstraction in the target language. From the target language representation, the target language is generated. |

||

===Interlingual=== |

===Interlingual=== |

||

| Line 60: | Line 54: | ||

This is the problem that in a given language there is usually more than one way to communicate the same meaning for any given meaning. |

This is the problem that in a given language there is usually more than one way to communicate the same meaning for any given meaning. |

||

:EXEMPLE 4 |

|||

:Эсир мӗнле пурӑнатӑр? |

:Эсир мӗнле пурӑнатӑр? |

||

:Мӗнле пурнӑҫсем? |

:Мӗнле пурнӑҫсем? |

||

| Line 67: | Line 60: | ||

All of these questions demand the same answer (how are you), but they may be more or less frequently used, or emphasise different things. |

All of these questions demand the same answer (how are you), but they may be more or less frequently used, or emphasise different things. |

||

In Apertium, for a given input sentence, one output sentence is produced. It is up to the designer of the translation system to choose which translation they want the system to produce. Often we recommend the most literal translation possible, as this reduces the necessity of transfer rules. |

In Apertium, for a given input sentence, one output sentence is produced. It is up to the designer of the translation system to choose which translation they want the system to produce. Often we recommend the most literal translation possible, as this reduces the necessity of transfer rules. |

||

| Line 76: | Line 70: | ||

:EXEMPLE 5 |

:EXEMPLE 5 |

||

:''Minä pidän uimisesta'' |

|||

| ⚫ | |||

| ⚫ | |||

===Description=== |

===Description=== |

||

| Line 128: | Line 124: | ||

| <code>apertium-tt-ba.ba.twol</code> || Phonological rules || Bashkir morphophonological rules || [[Машинный перевод для языков России/Session 1|1]] [[Машинный перевод для языков России/Session 2|2]] |

| <code>apertium-tt-ba.ba.twol</code> || Phonological rules || Bashkir morphophonological rules || [[Машинный перевод для языков России/Session 1|1]] [[Машинный перевод для языков России/Session 2|2]] |

||

|- |

|- |

||

| <code>apertium- |

| <code>apertium-tt-ba.tt.lexc</code> || Dictionary || Tatar morphotactic dictionary, used for analysis and generation || [[Машинный перевод для языков России/Session 1|1]] [[Машинный перевод для языков России/Session 2|2]] |

||

|- |

|- |

||

| <code>apertium-tt-ba.tt.twol</code> || Phonological rules || Tatar morphophonological rules || [[Машинный перевод для языков России/Session 1|1]] [[Машинный перевод для языков России/Session 2|2]] |

| <code>apertium-tt-ba.tt.twol</code> || Phonological rules || Tatar morphophonological rules || [[Машинный перевод для языков России/Session 1|1]] [[Машинный перевод для языков России/Session 2|2]] |

||

Latest revision as of 12:00, 31 January 2012

This session will give a short overview of the field of rule-based machine translation, and introduce how the free open/source rule-based machine translation platform Apertium is used.

There are two principal types of machine translation:

- Rule-based machine translation (RBMT), also called symbolic MT; Apertium falls into this category and this session focusses on the sub-types of RBMT

- Corpus-based machine translation; uses collections of previously translated sentences to propose translations of new sentences.

A brief overview of corpus-based MT would split it into two main subgroups, statistical and example based. In theory, the basic approach to statistical machine translation works by taking a collection of previously translated sentence (a parallel corpus) and calculating which tokens co-occur most frequently. All of the tokens that co-occur are assigned a probability. When translating a new sentence, these words are looked up, their probabilities combined, many possible translations are made and then the translation with the highest probability may be selected. The first statistical machine translation systems used coocurrences of words, but newer systems can use sequences of words (sometimes called phrases), and hierarchical trees.

By contrast example-based machine translation can be thought to be translation by analogy. It still uses a parallel corpus, but instead of assigning probabilities to words, it tries to learn by example. For example, given the sentence pairs (A la chica le gustan los gatos(es) → Das Mädchen mag Katzen(de) and A la chica le gustan los elefantes → Das Mädchen mag Elefanten) it might produce a translation example of (A la chica le gustan X → Das Mädchen mag X). When translating a new sentence, the parts are looked up and substituted.

Automatically applying a large translation memory to a text may also be considered a form of corpus-based machine translation. In practice, the lines between statistical and example-based MT are more blurry. Both rule-based and corpus-based methods have advantages and disadvantges. Corpus-based methods may produce translations which sound more fluent, but the meaning may be less faithfully reproduced. Rule-based systems tend to produce translations which are less fluent, but more preserving of the source language meaning.

Rule-based and corpus-based systems can also be combined in various ways as hybrid systems. For example, one might make a hybrid system that uses an example-based system to find equivalences, and then uses a rule-based system as backoff — when no pattern is found.

Types of machine translation systems[edit]

Direct[edit]

Direct, or word-for-word machine translation works by reading words in the source language one at a time, and looking them up in a bilingual word list of surface forms. Words may also be deleted, or left out, and maybe translated to one or more words. No grammatical analysis is done, so even simple errors, such as agreement in gender and number between a determiner and head noun will remain in the target language output.

Transfer[edit]

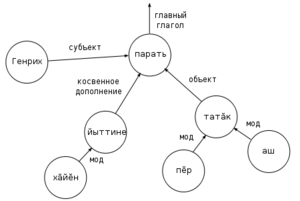

Transfer-based machine translation works by first converting the source language to a language-dependent intermediate representation, and then rules are applied to this intermediate representation in order to change the structure of the source language to the structure of the target language. The translation is generated from this representation using both bilingual dictionaries and grammatical rules.

There can be differences in the level of abstraction of the intermediate representation. We can distinguish two broad groups, shallow transfer, and deep transfer. In shallow-transfer MT the intermediate representation is usually either based on morphology or shallow syntax. In deep-transfer MT the intermediate representation usually includes some kind of parse tree or graph structure (see images on the right).

Transfer-based MT usually works as follows: The original text is first analysed and disambiguated morphologically (and in the case of deep transfer, syntactically) in order to obtain the source language intermediate representation. The transfer process then converts this final representation (still in the source language) to a representation of the same level of abstraction in the target language. From the target language representation, the target language is generated.

Interlingual[edit]

In transfer-based machine translation, rules are written on a pair-by-pair basis, making them specific to a language pair. In the interlingua approach, the intermediate representation is entirely language independent. There are a number of benefits to this approach, but also disadvantages. The benefits are that in order to add a new language to an existing MT system, it is only necessary to write an analyser and generator for the new language, and not transfer rules between the new language and all the existing languages. The drawbacks are that it is very hard to define an interlingua which can truely represent all nuances of all natural languages, and in practice, interlingua systems are only used for limited translation domains.

Problems in machine translation[edit]

Analysis[edit]

This is also called the problem of ambiguity. The problem is that many sentences in natural language can have more than one interpretation, and these interpretations may be translated differently in different languages. Consider the following example:

- EXEMPLE 3

- Здесь нужен пример синтаксической неоднозначности на русском или на чувашском языке (чем проще, тем лучше)

- Вот друг Саша, которого я вчера встретил.

Synthesis[edit]

This is the problem that in a given language there is usually more than one way to communicate the same meaning for any given meaning.

- Эсир мӗнле пурӑнатӑр?

- Мӗнле пурнӑҫсем?

- Мӗнле халсем?

- Мӗнле еҫсем?

All of these questions demand the same answer (how are you), but they may be more or less frequently used, or emphasise different things.

In Apertium, for a given input sentence, one output sentence is produced. It is up to the designer of the translation system to choose which translation they want the system to produce. Often we recommend the most literal translation possible, as this reduces the necessity of transfer rules.

Transfer[edit]

Languages have different ways of expressing the same meaning. These ways are often incompatible between languages. Consider the following examples expressing the same content:

- EXEMPLE 5

- Minä pidän uimisesta

In Apertium, rules are applied which convert source language structure to target language structure using sequences of lexical forms as an intermediate representation. For further information see: Session 5: Structural transfer basics.

Description[edit]

The final problem is that of description. In order to build a machine translation system it is necessary for people with knowledge of both languages to sit down and codify that knowledge in a form explicit and declarative enough for the machine to be able to process it.

While translation is often an unconscious process, we translate without reflecting on the rules that we use to translate, the machine does not have this unconsciousness, and must be told exactly what operations to perform. If these operations rely on information that the machine does not have, or cannot have, then a machine translation will not be possible.

But for many sentences, this information is not necessary:

- EXEMPLE 6

Practice[edit]

Installation[edit]

For guidance on the installation process of Apertium, HFST and Constraint grammar, see the hand out.

Usage[edit]

To use Apertium, first open up a terminal. Now cd into the directory of the language pair you want to test.

$ cd apertium-aa-bb

You can test it with the following command:

$ echo "Text that you want to translate" | apertium -d . aa-bb

For example, from Turkish to Kyrgyz:

$ echo "En güzel kız evime geldi." | apertium -d . tr-ky Эң жакшынакай кыз үйүмө келди.

Directory layout[edit]

Below is a table which gives a description of the main data files that can be found in a typical language pair, and links to the sessions where they are described.

| File | Type | Description | Session(s) |

|---|---|---|---|

apertium-tt-ba.ba.lexc |

Dictionary | Bashkir morphotactic dictionary, used for analysis and generation | 1 2 |

apertium-tt-ba.ba.twol |

Phonological rules | Bashkir morphophonological rules | 1 2 |

apertium-tt-ba.tt.lexc |

Dictionary | Tatar morphotactic dictionary, used for analysis and generation | 1 2 |

apertium-tt-ba.tt.twol |

Phonological rules | Tatar morphophonological rules | 1 2 |

apertium-tt-ba.tt.rlx |

Tagging rules | Tatar constraint grammar, used for morphological disambiguation | 3 |

apertium-tt-ba.ba.rlx |

Tagging rules | Bashkir constraint grammar, used for morphological disambiguation | 3 |

apertium-tt-ba.tt-ba.dix |

Dictionary | Tatar—Bashkir bilingual dictionary, used for lexical transfer | 4 |

apertium-tt-ba.tt-ba.lrx |

Lexical selection rules | Tatar→Bashkir lexical selection rules | 4 |

apertium-tt-ba.ba-tt.lrx |

Lexical selection rules | Bashkir→Tatar lexical selection rules | 4 |

apertium-tt-ba.tt-ba.t1x |

Transfer rules | Tatar→Bashkir first-level rule file, for structural transfer | 5 6 |

apertium-tt-ba.ba-tt.t1x |

Transfer rules | Bashkir→Tatar first-level rule file, for structural transfer | 5 6 |