Documentation of Matxin 1.0

General architecture

The objectives are open-source, interoperability between various systems and being in tune with the development of Apertium and Freeling. In order to do this, for the analysis of Spanish, FreeLing is used (as it gives a deeper analysis than the analysis of Apertium) and in the transfer and generation, the transducers from Apertium are used.

The design is based on the classic transfer architecture of machine translation, with three basic components: analysis of Spanish, transfer from Spanish to Basque and generation of Basque. It is based on previous work of the IXA group in building a prototype Matxin and in the design of Apertium. Two modules are added on top of the basic architecture, de-formatting and re-formatting which have the aim of maintaining the format of texts for translation and allowing surf-and-translate.

According to the initial design, no semantic disambiguation is done, but within the lexica a number of named entities, collocations and other multiword terms are added which makes this less important.

As the design was object-oriented, three main objects were defined, sentence, chunk and node. The chunk can be thought of as a phrase but always as the output of the analyser, and the node to the word, however taking into account that multiwords.

There follows a short description of each stage.

De-formatter and re-formatter

Analyser

The dependency analyser has been developed by the UPC and has been added to the existing modules of FreeLing (tokeniser, morphological analysis, disambiguation and chunking).

The analyser is called Txala, and annotates the dependency relations between nodes within a chunk and between chunks in a sentence. This information is obtained in the output format (see section 3) in an indirect way however in place of specific attributes, it is expressed implicitly in the form of the hierarchy of the tag, (for example, a node structure within another means that the node inside is dependent on the node outside).

As well as adding this functionality, the output of the analyser has been adapted to the interchange format which is described in section 3.

Information from the analysis

The result of the analysis is made up of three elements or objects (as previously described):

- Nodes: These tag words or multiwords and have the following information: lexical form, lemma, part-of-speech, and morphological inflection information.

- Chunks: These give information of (pseudo) phrase, type, syntactic information and dependency between nodes.

- Sentence: Gives the type of sentence and the dependency between the chunks of itself.

Example

For the phrase,

- "porque habré tenido que comer patatas"

The output would be made up of the following chunks:

- subordinate_conjunction: porque[cs]

- verb_chain:

- haber[vaif1s]+tener[vmpp0sm]+que[s]+comer[vmn]

- noun_chain: patatas[ncfp]

Transfer

In the transfer stage, the same objects and interchange format is maintained. The transfer stages are as follows:

- Lexical transfer

- Structural transfer in the sentence

- Structural transfer within the chunk

Lexical transfer

Firstly, the lexical transfer is done using part of a bilingual dictionary provided by Elhuyar, which is compiled into a lexical transducer in the Apertium format.

Structural transfer within the sentence

Owing to the different syntactic structure of the phrases in each language, some information is transferred between chunks, and chunks can be created or removed.

In the previous example, during this stage, the information for person and number of the object (third person plural) and the type of subordination (causal) are introduced into the verb chain from the other chunks.

Structural transfer within the chunk

This is a complex process inside verb chains and easier in noun chains. A finite-state grammar (see section 4) has been developed for verb transfer.

To start out with, the design of the grammar was compiled by the Apertium dictionaries or by means of the free software FSA package, however this turned out to be untenable and the grammar was converted into a set of regular expressions that will be read and processed by a standard program that will also deal with the transfer of noun chains.

Example

For the previously mentioned phrase:

- "porque habré tenido que comer patatas"

The output from the analysis module was:

- subordinate_conjunction: porque[cs]

- verb_chain:

- haber[vaif1s]+tener[vmpp0sm]+que[s]+comer[vmn]

- noun_chain: patatas[ncfp]

and the output from the transfer will be:

- verb_chain:

- jan(main) [partPerf] / behar(per) [partPerf] / izan(dum) [partFut] / edun(aux) [indPres][subj1s][obj3p]+lako[causal]

- noun_chain: patata[noun]+[abs][pl]

Generation

This also keeps the same objects and formats. The stages as as follows:

- Syntactic generation

- Morphological generation

Syntactic generation

The main job of syntactic generation is to re-order the words in a chunk as well as the chunks in a phrase.

The order inside the chunk is effected through a small grammar which gives the element order inside Basque phrases and is expressed by a set of regular expressions.

The order of the chunks in the phrase is decided by a rule-based recursive process.

Morphological generation

Once the word order inside each chunk is decided, we proceed to the generation from the last word in the chunk with its own morphological information or that inherited from the transfer phase. This is owing to the fact that in Basque, normally the morphological inflectional information (case, number and other attributes) is assigned to the set of the phrase, adding it as a suffix to the end of the last word in the phrase. In the verbal chains as well as the last word, it is also necessary to perform additional morphological generation in other parts of the phrase.

This generation is performed using a morphological dictionary generated by IXA from the EDBL database which is compiled into a lexical transducer using the programs from Apertium and following their specifications and formats.

Example

For the previously given phrase, the stages are:

- "porque habré tenido que comer patatas"

The output from analysis is:

- subordinate_conjunction: porque[cs]

- verb_chain:

- haber[vaif1s]+tener[vmpp0sm]+que[s]+comer[vmn]

- noun_chain: patatas[ncfp]

The output from the transfer stage is:

- verb_chain:

- jan(main) [partPerf] / behar(per) [partPerf] / izan(dum) [partFut] / edun(aux) [indPres][subj1s][obj3p]+lako[causal]

- noun_chain: patata[noun]+[abs][pl]

After the generation phase, the final result will be:

- "patatak jan behar izango ditudalako"[1]

Although the details of the modules and the linguistic data is presented in section 4 it is necessary to underline that the design is modular, being organised in the basic modules of analysis, transfer and generation, and with clear separation of data and algorithms. And within the data, the dictionaries and the grammars are also clearly separated.

Intercommunication between modules

An XML format has been designed in order to communicate between the various stages of the translation process. All of these formats are specified within a single DTD. A format sufficiently powerful for the translation process, but also light enough to allow for a fairly fast translation process has been designed.

This format will facilitate interoperability (anyone can change any of the modules while keeping the rest the same) and the addition of new languages (although in this case the transfer phase would need to be adjusted).

Although the post-edition of the results is not one of the specified objectives of the project, the format keeps this in mind (by means of a ref tag) which will facilitate the use of these tools in future projects.

The format comes described in the following DTD which can be used in order to validate the syntax of the interchange formats.

As you can see, there are two attributes ord and alloc which are used in order to get back the format and ref for postedition. The rest of the attributes correspond to the previously-mentioned linguistic information.

An XSLT stylesheet has been prepared in order to see the output of each of the modules in a graphical format.

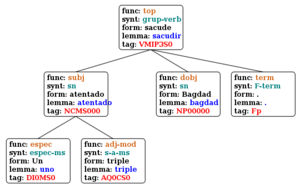

Three examples of the application of this format through each of the basic phases of translation (analysis, transfer and generation) will now be described taking as an example the translation of the phrase "Un triple atentado sacude Bagdad".

Analysis format

Is represented in an interchange format as follows:

<?xml version='1.0' encoding='iso-8859-1'?>

<?xml-stylesheet type='text/xsl' href='profit.xsl'?>

<corpus>

<SENTENCE ord='1'>

<CHUNK ord='2' type='grup-verb' si='top'>

<NODE ord='4' alloc='19' form='sacude' lem='sacudir' mi='VMIP3S0'> </NODE>

<CHUNK ord='1' type='sn' si='subj'>

<NODE ord='3' alloc='10' form='atentado' lem='atentado' mi='NCMS000'>

<NODE ord='1' alloc='0' form='Un' lem='uno' mi='DI0MS0'> </NODE>

<NODE ord='2' alloc='3' form='triple' lem='triple' mi='AQ0CS0'> </NODE>

</NODE>

</CHUNK>

<CHUNK ord='3' type='sn' si='obj'>

<NODE ord='5' alloc='26' form='Bagdad' lem='Bagdad' mi='NP00000'> </NODE>

</CHUNK>

<CHUNK ord='4' type='F-term' si='modnomatch'>

<NODE ord='6' alloc='32' form='.' lem='.' mi='Fp'> </NODE>

</CHUNK>

</CHUNK>

</SENTENCE>

</corpus>

The dependency hierarchy which has already been described here is expressed more clearly as a result of the indentation, however the programs obtain this as by the tags chunk and node. As can be seen, the format is simple but very powerful.

The same information processed by the abovementioned stylesheet is presented to the left for the phrase "Un triple atentado sacude Bagdad".

Transfer format

The format remains the same, but the information is translated. The attribute

ref is added in order to maintain the information about the order of the source

sentence. The postedition will require the information of interchange between

the various phases. On the other hand the information corresponding to the

attribute ord disappears as the new order will be calculated in the generation

stage.

It is also possible to see that various elements have been removed although their information has been inherited by other elements.

<?xml version='1.0' encoding='iso-8859-1'?>

<?xml-stylesheet type='text/xsl' href='profit.xsl'?>

<corpus>

<SENTENCE ord='1' ref='1'>

<CHUNK ord='2' ref='2' type='adi-kat' si='top' headpos='[ADI][SIN]' headlem='_astindu_'

trans='DU' objMi='[NUMS]' cas='[ABS]' length='2'>

<NODE form='astintzen' ord='0' ref='4' alloc='19' lem='astindu' pos='[NAG]' mi='[ADI][SIN]+[AMM][ADOIN]+[ASP][EZBU]'>

<NODE form='du' ord='1' ref='4' alloc='19' lem='edun' pos='[ADL]' mi='[ADL][A1][NR_HU][NK_HU]'/>

</NODE>

<CHUNK ord='0' ref='1' type='is' si='subj' mi='[NUMS]' headpos='[IZE][ARR]'

headlem='atentatu' cas='[ERG]' length='3'>

<NODE form='atentatu' ord='0' ref='3' alloc='10' lem='atentatu' pos='[IZE][ARR]'

mi='[NUMS]'>

<NODE form='batek' ord='2' ref='1' alloc='0' lem='bat' pos='[DET][DZH]'> </NODE>

<NODE form='hirukoitz' ord='1' ref='2' alloc='3' lem='hirukoitz' pos='[IZE][ARR]'/>

</NODE>

</CHUNK>

<CHUNK ord='1' ref='3' type='is' si='obj' mi='[NUMS]' headpos='[IZE][LIB]' headlem='Bagdad'

cas='[ABS]' length='1'>

<NODE form='Bagdad' ord='0' ref='5' alloc='26' lem='Bagdad' pos='[IZE][LIB]' mi='[NUMS]'> </NODE>

</CHUNK>

<CHUNK ord='3' ref='4' type='p-buka' si='modnomatch' headpos='Fp' headlem='.' cas='[ZERO]'

length='1'>

<NODE form='.' ord='0' ref='6' alloc='32' lem='.' pos='Fp'> </NODE>

</CHUNK>

</CHUNK>

</SENTENCE>

</corpus>

The result is the phrase: "At entatu hirukoitz batek Bagad astintzen du"[2]

Detailed architecture

It is necessary to remember that the analysis stage re-uses the FreeLing package and thus the documentation which will be used is from that project: http://garraf.epsevg.upc.es/freeling/ and also auxiliary functions from the Apertium package will be used for the generation and lexical transfer stages and in order to do de/re-formatting of texts. The documentation for this can be found on www.apertium.org and wiki.apertium.org.

The detailed architecture of Matxin can be found in figure 1.

The application which has been developed is stored in an SVN repository which is mirrored for public access at matxin.sourceforge.net. The elements in the figure can be found in four subdirectories:

trunk/src-- Here the sourcecode of the programs is found which corresponds almost one to one with the names of the processes in figure 1.trunk/data/dict-- The dictionaries used in the transfer stage and in the generation stagetrunk/data/gram-- The grammars for transfer and generationtrunk/bin-- Executable programs

As can be seen in the figure, in one hand the three phases of analysis, transfer and generation are distinguished and on the other hand the modules corresponding to the programs, the dictionaries and the grammars are distinguished. This helps to achieve an open and modular architecture which allows the addition of new languages without requiring changes in the programs. As a result of being free software, it will be possible to improve the system without needing to modify the programs, all that will be required is to improve and expand the dictionaries and grammars without needing to understand the code of the programs. Of course it will also be possible to modify the code.

We will now detail the elements of the various phases:

- Transfer

- LT: Lexical transfer

- Bilingual dictionary (compiled) -- (eseu.bin)

- Dictionary of chunk types -- (eseu_chunk_type.txt)

- Dictionary of semantic information -- (eu_sem.txt)

- ST_intra: Syntactic transfer within the chunk

- Grammar to exchange information between nodes (intrachunk_move.dat)

- ST_inter: Syntactic transfer between chunks

- Verb subcategorisation dictionary (eu_verb_subcat.txt)

- Preposition dictionary (eseu_prep.txt)

- Grammar to exchange information between chunks (interchunk_move.dat)

- ST_verb: Syntactic transfer of the verb

- Grammar of verb transfer (compiled) (eseu_verb_transfer.dat)

- Generation

- SG_intra: Conversion and ordering within the chunk

- Dictionary of conversion of syntactic information (eu_changes_sint.txt)

- Grammar of ordering within the chunk (eu_intrachunk_order.dat)

- SG_inter: Ordering between chunks

- Grammar for ordering between chunks (eu_interchunk_order.dat)

- MG: Morphological generation

- Dictionary for converting morphological information (compiled) (eu_changes_morph.bin)

- Morphological generation dictionary (compiled) (eu_morph_gen.bin)

- Morphological generation dictionary for any lemma (compiled) (eu_morph_nolex.bin)

- Morphological generation dictionary for measures (eu_measures_gen.bin)

- Morphological preprocessing grammar (eu_morph_preproc.dat)

Now the linguistic resources which have been employed will be described and after that the structure of the programs.

Format of linguistic data

With the objective of encouraging good software engineering as well as facilitating the modification of the system by linguists, the linguistic information is distributed in two types of resources (dictionaries and grammars) and these resources have been given the most abstract and standard format possible.

As has been previously described the basic linguistic resources, with the exception of those designed specifically for FreeLing are as follows:

- Dictionaries:

- Transfer: The bilingual dictionary es-eu, the syntactic tag dictionary es-eu, the semantic dictionary eu, the preposition dictionary es-eu and the verb subcategorisation dictionary eu.

- Generation: The syntactic change dictionary, the morphological change dictionary and the morphological dictionary eu.

- Grammars:

- Transfer: grammar for the transfer of verbal chains es-eu and structural transfer grammars es-eu

- Generation: morphological preprocessing grammar eu, re-ordering grammar for both interchunk and intrachunk.

In the search for standardisation, the bilingual and morphological dictionaries are specified in the XML format described by Apertium which has been made compatible with this system.

We have tried to make the grammars finite-state, but in the case of the interchunk movement a recursive grammar has been opted for.

A special effort has been made to optimise the verb chain transfer grammar as the transformations are deep and may slow down the system. While standardising this grammar, the language of the xfst package (with some restrictions) has been chosen, it is well documented and very powerful, although it has the problem of not being free software. As a result of this, a compiler which transforms this grammar into a set of regular expressions that are processed in the transfer module.

The rest of the linguistic resources are grammars which take care of different objectives and which have a specific format. At the moment they have a format which is not based on XML but one which has been aimed at finding a compromise between something which is comprehensible for linguists and which is easily processed by the programs. In the future formats and compilers will be designed which will make the grammars more independent of the programs.

In any case the linguistic data is separated from the programs so that it can allow third parties to modify the behaviour of the translator without needing to change the source code.

Spanish→Basque bilingual dictionary

This follows the Apertium specification. It has been obtained from the Elhuyar bilingual dictionary and it contains the Basque equivalents for each of the entries in Spanish present in the FreeLing dictionary. Although a fraction of these equivalents are distributed on the SourceForge mirror site. The dictionary creation process is described in depth in reference.[6]

Given that there is no semantic disambiguation, only the first entry for each word has been entered (except with the prepositions which have been kept), but in order to improve the situation many multiword units have been entered, from both the Elhuyar dictionary and through an automatic process of searching for collocations.

In annex 2 the format (which is described in chapter 2 of reference [1]) will be presented and a small part of the bilingual dictionary.

Syntax tag dictionary

In this dictionary the equivalences between the syntactic tags that are given by the FreeLing analyser and the tags which are used in the transfer and generation of Basque are found. It is a very simple dictionary that allows us to remove the code for these transformations.

- Format

es_chunk_type eu_chunk_type #comment

Example of the content of this dictionary

sn is #sintagma nominal izen-sintagma s-adj adjs #sintagma adjetivo adjektibo-sintagma sp-de post-izls #sint. preposicional con la prep. "de" izenlagun-sintagma grup-sp post-sint #sint. preposicional (excepto "de") postposizio-sintagma Fc p-koma #signo de puntuación "coma" puntuazioa: koma F-no-c p-ez-koma #signos de puntuación(excep. "coma") koma es diren punt-ikurrak número zki #cualquier número cardinal edozein zenbaki kardinal grup-verb adi-kat #grupo verbal aditz-katea sadv adbs #sintagma adverbial adberbio-sintagma neg ez #negación ezeztapena coord emen #conjunción coordinada emendiozko juntagailua